Nov 30, 2025·8 min

AI for CRUD Apps: What It Automates vs What Needs Humans

A practical guide to what AI can reliably automate in CRUD apps (scaffolding, queries, tests) and where human judgment is vital (models, rules, security).

A practical guide to what AI can reliably automate in CRUD apps (scaffolding, queries, tests) and where human judgment is vital (models, rules, security).

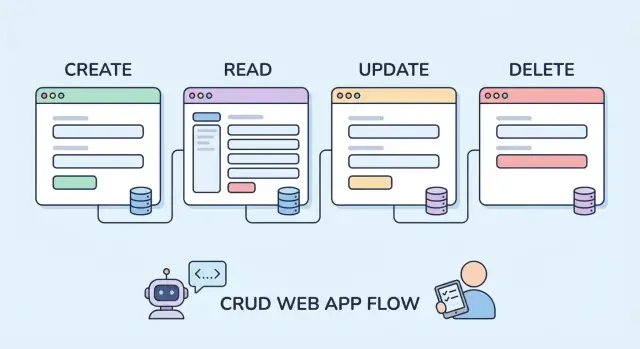

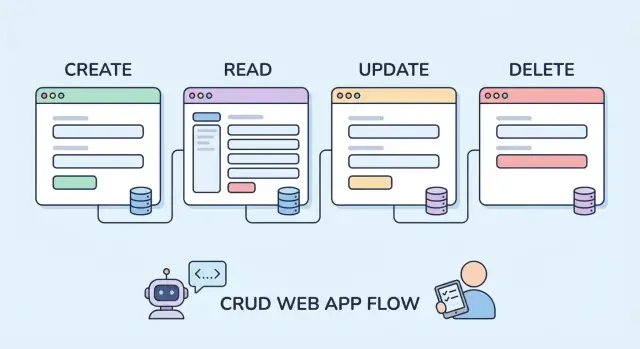

CRUD apps are the everyday tools that let people Create, Read, Update, and Delete data—think customer lists, inventory trackers, appointment systems, internal dashboards, and admin panels. They’re common because most businesses run on structured records and repeatable workflows.

When people say “AI for CRUD apps,” they usually don’t mean an AI that magically ships a finished product on its own. They mean an assistant that speeds up routine engineering work by producing drafts you can edit, review, and harden.

In practice, AI automation is closer to:

That can save hours—especially on boilerplate—because CRUD apps often follow patterns.

AI can make you faster, but it doesn’t make the result automatically correct. Generated code can:

So the right expectation is acceleration, not certainty. You still review, test, and decide.

AI is strongest where the work is patterned and the “right answer” is mostly standard: scaffolding, CRUD endpoints, basic forms, and predictable tests.

Humans remain essential where decisions are contextual: data meaning, access control, security/privacy, edge cases, and the rules that make your app unique.

CRUD apps tend to be built from the same Lego bricks: data models, migrations, forms, validation, list/detail pages, tables and filters, endpoints (REST/GraphQL/RPC), search and pagination, auth, and permissions. That repeatability is exactly why AI-assisted generation can feel so fast—many projects share the same shapes, even when the business domain changes.

Patterns show up everywhere:

Because these patterns are consistent, AI is good at producing a first draft: basic models, scaffolded routes, simple controllers/handlers, standard UI forms, and starter tests. It’s similar to what frameworks and code generators already do—AI just adapts faster to your naming and conventions.

CRUD apps stop being “standard” the moment you add meaning:

These are the areas where a small oversight creates big issues: unauthorized access, irreversible deletions, or records that can’t be reconciled.

Use AI to automate patterns, then deliberately review consequences. If the output affects who can see/change data, or whether data stays correct over time, treat it as high-risk and verify it like production-critical code.

AI is at its best when the work is repetitive, structurally predictable, and easy to verify. CRUD apps have a lot of that: the same patterns repeated across models, endpoints, and screens. Used this way, AI can save hours without taking ownership of the product’s meaning.

Given a clear description of an entity (fields, relationships, and basic actions), AI can quickly draft the skeleton: model definitions, controllers/handlers, routes, and basic pages. You still need to confirm naming, data types, and relationships—but starting from a complete draft is faster than building every file from scratch.

For common operations—list, detail, create, update, delete—AI can generate handler code that follows a conventional structure: parse input, call a data-access layer, return a response.

This is especially useful when you’re setting up many similar endpoints at once. The key is reviewing the edges: filtering, pagination, error codes, and any “special cases” that aren’t actually standard.

CRUD often needs internal tooling: list/detail pages, basic forms, table views, and an admin-style navigation. AI can produce functional first versions of these screens quickly.

Treat these as prototypes you harden: check empty states, loading states, and whether the UI matches how people actually search and scan data.

AI is surprisingly helpful at mechanical refactors: renaming fields across files, moving modules, extracting helpers, or standardizing patterns (like request parsing or response formatting). It can also suggest where duplication exists.

Still, you should run tests and inspect diffs—refactors fail in subtle ways when two “similar” cases aren’t truly equivalent.

AI can draft README sections, endpoint descriptions, and inline comments that explain intent. That’s useful for onboarding and code reviews—so long as you verify everything it claims. Outdated or incorrect docs are worse than none.

AI can be genuinely useful at the start of data modeling because it’s good at turning plain-language entities into a first-pass schema. If you describe “Customer, Invoice, LineItem, Payment,” it can draft tables/collections, typical fields, and reasonable defaults (IDs, timestamps, status enums).

For straightforward changes, AI speeds up the dull parts:

tenant_id + created_at, status, email), as long as you verify them against real queriesThis is especially handy when you’re exploring: you can iterate on a model quickly, then tighten it once the workflow is clearer.

Data models hide “gotchas” that AI can’t reliably infer from a short prompt:

These aren’t syntax problems; they’re business and risk decisions.

A migration that’s “correct” can still be unsafe. Before running anything on real data, you need to decide:

Use AI to draft the migration and the rollout plan, but treat the plan as a proposal—your team owns the consequences.

Forms are where CRUD apps meet real humans. AI is genuinely useful here because the work is repetitive: turning a schema into inputs, wiring up basic validation, and keeping client and server in sync.

Given a data model (or even a sample JSON payload), AI can quickly draft:

This speeds up the “first usable version” dramatically, especially for standard admin-style screens.

Validation isn’t just about rejecting bad data; it’s about expressing intent. AI can’t reliably infer what “good” means for your users.

You still need to decide things like:

A common failure mode is AI enforcing rules that feel reasonable but are wrong for your business (e.g., forcing a strict phone format or rejecting apostrophes in names).

AI can propose options, but you choose the source of truth:

A practical approach: let AI generate the first pass, then review each rule and ask, “Is this a user convenience, an API contract, or a hard data invariant?”

CRUD APIs tend to follow repeatable patterns: list records, fetch one by ID, create, update, delete, and sometimes search. That makes them a sweet spot for AI assistance—especially when you need a lot of similar endpoints across multiple resources.

AI is typically good at drafting standard list/search/filter endpoints and the “glue code” around them. For example, it can quickly generate:

GET /orders, GET /orders/:id, POST /orders, etc.)That last point matters more than it sounds: inconsistent API shapes create hidden work for front-end teams and integrations. AI can help enforce patterns like “always return { data, meta }” or “dates are always ISO-8601 strings.”

AI can add pagination and sorting quickly, but it won’t reliably choose the right strategy for your data.

Offset pagination (?page=10) is simple, but it can get slow and inconsistent for changing datasets. Cursor pagination (using a “next cursor” token) performs better at scale, but it’s harder to implement correctly—especially when users can sort by multiple fields.

You still need to decide what “correct” means for your product: stable ordering, how far back users need to browse, and whether you can afford expensive counts.

Query code is where small mistakes become big outages. AI-generated API logic often needs review for:

Before you accept generated code, sanity-check it against realistic data volumes. How many records will an average customer have? What does “search” mean at 10k vs 10M rows? Which endpoints need indexes, caching, or strict rate limits?

AI can draft the patterns, but humans need to set the guardrails: performance budgets, safe query rules, and what the API is allowed to do under load.

AI is surprisingly good at producing a lot of test code quickly—especially for CRUD apps where patterns repeat. The trap is thinking “more tests” automatically means “better quality.” AI can generate volume; you still have to decide what matters.

If you give AI a function signature, a short description of expected behavior, and a few examples, it can draft unit tests fast. It’s also effective at creating happy‑path integration tests for common flows like “create → read → update → delete,” including wiring up requests, asserting status codes, and checking response shapes.

Another strong use: scaffolding test data. AI can draft factories/fixtures (users, records, related entities) and common mocking patterns (time, UUIDs, external calls) so you’re not hand-writing repetitive setup every time.

AI tends to optimize for coverage numbers and obvious scenarios. Your job is to choose meaningful cases:

A practical rule: let AI draft the first pass, then review each test and ask, “What failure would this catch in production?” If the answer is “none,” delete it or rewrite it into something that protects real behavior.

Authentication (who a user is) is usually straightforward in CRUD apps. Authorization (what they’re allowed to do) is where projects get breached, audited, or quietly leak data for months. AI can speed up the mechanics, but it can’t take responsibility for the risk.

If you give AI clear requirements text (“Managers can edit any order; customers can only view their own; support can refund but not change addresses”), it can draft a first pass at RBAC/ABAC rules and map them to roles, attributes, and resources. Treat this as a starting sketch, not a decision.

AI is also useful at spotting inconsistent authorization, especially in codebases with many handlers/controllers. It can scan for endpoints that authenticate users but forget to enforce permissions, or for “admin-only” actions that are missing a guard in one code path.

Finally, it can generate the plumbing: middleware stubs, policy files, decorators/annotations, and boilerplate checks.

You still have to define the threat model (who might abuse the system), least-privilege defaults (what happens when a role is missing), and audit needs (what must be logged, retained, and reviewed). Those choices depend on your business, not your framework.

AI can help you get to “implemented.” Only you can get to “safe.”

AI is helpful here because error handling and observability follow familiar patterns. It can quickly set up “good enough” defaults—then you refine them to match your product, risk profile, and what your team actually needs to know at 2 a.m.

AI can suggest a baseline package of practices:

A typical AI-generated starting point for an API error format might look like:

{

"error": {

"code": "VALIDATION_ERROR",

"message": "Email is invalid",

"details": [{"field": "email", "reason": "format"}],

"request_id": "..."

}

}

That consistency makes client apps easier to build and support.

AI can propose metric names and a starter dashboard: request rate, latency (p50/p95), error rate by endpoint, queue depth, and database timeouts. Treat these as initial ideas, not a finished monitoring strategy.

The risky part isn’t adding logs—it’s choosing what not to capture.

You decide:

Finally, define what “healthy” means for your users: “successful checkouts,” “projects created,” “emails delivered,” not just “servers are up.” That definition drives alerts that signal real customer impact instead of noise.

CRUD apps look simple because the screens are familiar: create a record, update fields, search, delete. The hard part is everything your organization means by those actions.

AI can generate controllers, forms, and database code quickly—but it can’t infer the rules that make your app correct for your business. Those rules live in policy documents, tribal knowledge, and edge-case decisions people make daily.

A reliable CRUD workflow usually hides a decision tree:

Approvals are a good example. “Manager approval required” sounds straightforward until you define: what if the manager is on leave, the amount changes after approval, or the request spans two departments? AI can draft the scaffolding for an approval state machine, but you must define the rules.

Stakeholders often disagree without realizing it. One team wants “fast processing,” another wants “tight controls.” AI will happily implement whichever instruction appears most recent, most explicit, or most confidently phrased.

Humans need to reconcile conflicts and write a single source of truth: what the rule is, why it exists, and what success looks like.

Small naming choices create big downstream effects. Before generating code, agree on:

Business rules force trade-offs: simplicity vs flexibility, strictness vs speed. AI can offer options, but it can’t know your risk tolerance.

A practical approach: write 10–20 “rule examples” in plain language (including exceptions), then ask AI to translate them into validations, transitions, and constraints—while you review every edge condition for unintended outcomes.

AI can draft CRUD code quickly, but security and compliance don’t work on “good enough.” A generated controller that saves records and returns JSON may look fine in a demo—and still create a breach in production. Treat AI output as untrusted until reviewed.

Common pitfalls show up in otherwise clean-looking code:

role=admin, isPaid=true).CRUD apps fail most often at the seams: list endpoints, “export CSV,” admin views, and multi-tenant filtering. AI can forget to scope queries (e.g., by account_id) or may assume the UI prevents access. Humans must verify:

Requirements like data residency, audit trails, and consent depend on your business, geography, and contracts. AI can suggest patterns, but you must define what “compliant” means: what gets logged, how long data is retained, who can access it, and how deletion requests are handled.

Run security reviews, vet dependencies, and plan incident response (alerts, rotation of secrets, rollback steps). Set clear “stop the line” release criteria: if access rules are ambiguous, sensitive data handling is unverified, or auditability is missing, the release pauses until resolved.

AI is most valuable in CRUD work when you treat it like a fast draft partner—not an author. The goal is simple: shorten the path from idea to working code while keeping accountability for correctness, security, and product intent.

Tools like Koder.ai fit this model well: you can describe a CRUD feature in chat, generate a working draft across UI and API, and then iterate with guardrails (like planning mode, snapshots, and rollback) while keeping humans responsible for permissions, migrations, and business rules.

Don’t ask for “a user management CRUD.” Ask for a specific change with boundaries.

Include: framework/version, existing conventions, data constraints, error behavior, and what “done” means. Example acceptance criteria: “Reject duplicates, return 409,” “Soft-delete only,” “Audit log required,” “No N+1 queries,” “Must pass existing test suite.” This reduces plausible-but-wrong code.

Use AI to propose 2–3 approaches (e.g., “single table vs join table,” “REST vs RPC endpoint shape”), and require trade-offs: performance, complexity, migration risk, permission model. Pick one option and record the reason in the ticket/PR so future changes don’t drift.

Treat some files as “always human-reviewed”:

Make this a checklist in your PR template (or in /contributing).

Maintain a small, editable spec (README in the module, ADR, or a /docs page) for core entities, validation rules, and permission decisions. Paste relevant excerpts into prompts so generated code stays aligned instead of “inventing” rules.

Track outcomes: cycle time for CRUD changes, bug rate (especially permission/validation defects), support tickets, and user success metrics (task completion, fewer manual workarounds). If those aren’t improving, tighten prompts, add gates, or reduce AI scope.

“AI for CRUD” usually means using AI to generate drafts of repetitive work—models, migrations, endpoints, forms, and starter tests—based on your description.

It’s best viewed as acceleration for boilerplate, not a guarantee of correctness or a replacement for product decisions.

Use AI where the work is patterned and easy to verify:

Avoid delegating judgment-heavy decisions like permissions, data meaning, and risky migrations without review.

Generated code can:

Treat output as untrusted until it passes review and tests.

Provide constraints and acceptance criteria, not just a feature name. Include:

The more “definition of done” you supply, the fewer plausible-but-wrong drafts you’ll get.

AI can propose a first-pass schema (tables, fields, enums, timestamps), but it can’t reliably infer:

Use AI to draft options, then validate them against real workflows and failure scenarios.

A migration can be syntactically correct and still be dangerous. Before running it on production data, check:

AI can draft the migration and rollout plan, but you should own the risk review and execution plan.

AI is great at mapping schema fields to inputs and generating basic validators (required, min/max, format). The risky part is semantics:

Review each rule and decide if it’s UX convenience, API contract, or hard invariant.

AI can quickly scaffold endpoints, filters, pagination, and DTO/serializer mappings. Then review for sharp edges:

Validate against expected data volumes and performance budgets.

AI can generate lots of tests, but you decide which ones matter. Prioritize:

If a test wouldn’t catch a real production failure, rewrite or delete it.

Use AI to draft RBAC/ABAC rules and the plumbing (middleware, policy stubs), but treat authorization as high-risk.

A practical checklist:

Humans must define the threat model, least-privilege defaults, and audit needs.