Jul 22, 2025·8 min

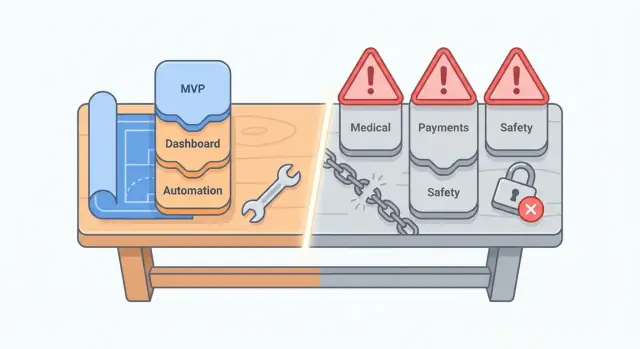

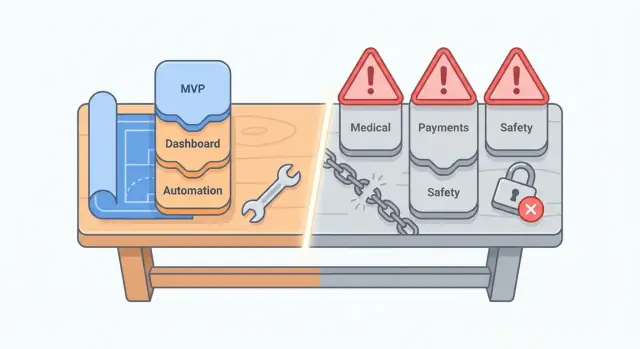

Best Products to Build With AI Coding Tools (and What to Avoid)

Learn which product types fit AI coding tools best—MVPs, internal apps, dashboards, automations—and which to avoid, like safety- or compliance-critical systems.

Learn which product types fit AI coding tools best—MVPs, internal apps, dashboards, automations—and which to avoid, like safety- or compliance-critical systems.

AI coding tools can write functions, generate boilerplate, translate ideas into starter code, and suggest fixes when something breaks. They’re especially good at speeding up familiar patterns: forms, CRUD screens, simple APIs, data transforms, and UI components.

They’re less reliable when requirements are vague, the domain rules are complex, or the “correct” output can’t be quickly verified. They may hallucinate libraries, invent configuration options, or produce code that works in one scenario but fails in edge cases.

If you’re evaluating a platform (not just a code assistant), focus on whether it helps you turn specs into a testable app and iterate safely. For example, vibe-coding platforms like Koder.ai are designed around producing working web/server/mobile apps from chat—useful when you can validate outcomes quickly and want fast iteration with features like snapshots/rollback and source-code export.

Choosing the right product is mostly about how easy it is to validate outcomes, not whether you’re using JavaScript, Python, or something else. If you can test your product with:

then AI-assisted coding is a strong fit.

If your product requires deep expertise to judge correctness (legal interpretations, medical decisions, financial compliance) or failures are costly, you’ll often spend more time verifying and reworking AI-generated code than you’ll save.

Before you build, define what “done” means in observable terms: screens that must exist, actions users can take, and measurable results (e.g., “imports a CSV and shows totals that match this sample file”). Products with concrete acceptance criteria are easier to build safely with AI.

This article ends with a practical checklist you can run in a few minutes to decide whether a product is a good candidate—and what guardrails to add when it’s borderline.

Even with great tools, you still need human review and testing. Plan for code review, basic security checks, and automated tests for the parts that matter. Think of AI as a fast collaborator that drafts and iterates—not a replacement for responsibility, validation, and release discipline.

AI coding tools shine when you already know what you want and can describe it clearly. Treat them as extremely fast assistants: they can draft code, suggest patterns, and fill in tedious pieces—but they don’t automatically understand your real product constraints.

They’re especially good at accelerating “known work,” such as:

Used well, this can compress days of setup into hours—especially for MVPs and internal tools.

AI tools tend to break down when the problem is underspecified or when details matter more than speed:

AI-generated code often optimizes for the happy path: the ideal sequence where everything succeeds and users behave predictably. Real products live in the unhappy paths—failed payments, partial outages, duplicate requests, and users who click buttons twice.

Treat AI output as a draft. Verify correctness with:

The more costly a bug is, the more you should lean on human review and automated tests—not just fast generation.

MVPs (minimum viable products) and “clickable-to-working” prototypes are a sweet spot for AI coding tools because success is measured by learning speed, not perfection. The goal is narrow scope: ship quickly, get it in front of real users, and answer one or two key questions (Will anyone use this? Will they pay? Does this workflow save time?).

A practical MVP is a short time-to-learn project: something you can build in days or a couple of weeks, then refine based on feedback. AI tools are great at getting you to a functional baseline fast—routing, forms, simple CRUD screens, basic auth—so you can focus your energy on the problem and the user experience.

Keep the first version centered on 1–2 core flows. For example:

Define a measurable outcome for each flow (e.g., “user can create an account and finish a booking in under 2 minutes” or “a team member can submit a request without Slack back-and-forth”).

These are strong candidates for AI-assisted MVP development because they’re easy to validate and easy to iterate:

What makes these work is not feature breadth, but clarity of the first use case.

Assume your MVP will pivot. Structure your prototype so changes are cheap:

A useful pattern is: ship a “happy path” first, instrument it (even lightweight analytics), then expand only where users get stuck. That’s where AI coding tools provide the most leverage: fast iteration cycles rather than one big build.

Internal tools are one of the safest, highest-leverage places to use AI coding tools. They’re built for a known group of users, used in a controlled environment, and the “cost of being slightly imperfect” is usually manageable (because you can fix and ship updates quickly).

These projects tend to have clear requirements and repeatable screens—perfect for AI-assisted scaffolding and iteration:

Small-team internal tools typically have:

This is where AI coding tools shine: generating CRUD screens, form validation, basic UI, and wiring up a database—while you focus on workflow details and usability.

If you want this accelerated end-to-end, platforms like Koder.ai are often a good match for internal tools: they’re optimized for spinning up React-based web apps with a Go + PostgreSQL backend, plus deployment/hosting and custom domains when you’re ready to share the tool with the team.

Internal doesn’t mean “no standards.” Make sure you include:

Pick a single team and solve one painful process end-to-end. Once it’s stable and trusted, extend the same foundation—users, roles, logging—into the next workflow instead of starting over each time.

Dashboards and reporting apps are a sweet spot for AI coding tools because they’re mostly about pulling data together, presenting it clearly, and saving people time. When something goes wrong, the impact is often “we made a decision a day late,” not “the system broke production.” That lower downside makes this category practical for AI-assisted builds.

Start with reporting that replaces spreadsheet busywork:

A simple rule: ship read-only first. Let the app query approved sources and visualize results, but avoid write-backs (editing records, triggering actions) until you trust the data and permissions. Read-only dashboards are easier to validate, safer to roll out broadly, and faster to iterate.

AI can generate the UI and query plumbing quickly, but you still need clarity on:

A dashboard that “looks right” but answers the wrong question is worse than no dashboard.

Reporting systems fail quietly when metrics evolve but the dashboard doesn’t. That’s metric drift: the KPI name stays the same while its logic changes (new billing rules, updated event tracking, different time windows).

Also beware of mismatched source data—finance numbers from the warehouse won’t always match what’s in a CRM. Make the source of truth explicit in the UI, include “last updated” timestamps, and keep a short changelog of metric definitions so everyone knows what changed and why.

Integrations are one of the safest “high leverage” uses of AI coding tools because the work is mostly glue code: moving well-defined data from A to B, triggering predictable actions, and handling errors cleanly. The behavior is easy to describe, straightforward to test, and easy to observe in production.

Pick a workflow with clear inputs, clear outputs, and a small number of branches. For example:

These projects fit AI-assisted coding well because you can describe the contract (“when X happens, do Y”), then verify it with test fixtures and real sample payloads.

Most automation bugs show up under retries, partial failures, and duplicate events. Build a few basics from the start:

Even if AI generates the first pass quickly, you’ll get more value by spending time on edge cases: empty fields, unexpected types, pagination, and rate limits.

Automations fail silently unless you surface them. At minimum:

If you want a helpful next step, add a “replay failed job” button so non-engineers can recover without digging into code.

Content and knowledge apps are a strong fit for AI coding tools because the “job” is clear: help people find, understand, and reuse information that already exists. The value is immediate, and you can measure success with simple signals like time saved, fewer repeated questions, and higher self-serve rates.

These products work well when they’re grounded in your own documents and workflows:

The safest and most useful pattern is: retrieve first, generate second. In other words, search your data to find the relevant sources, then use AI to summarize or answer based on those sources.

This keeps answers grounded, reduces hallucinations, and makes it easier to debug when something looks wrong (“Which document did it use?”).

Add lightweight protections early, even for an MVP:

Knowledge tools can get popular quickly. Avoid surprise bills by building in:

With these guardrails, you get a tool people can rely on—without pretending the AI is always right.

AI coding tools can speed up scaffolding and boilerplate, but they’re a poor fit for software where a small mistake can directly harm someone. In safety-critical work, “mostly correct” isn’t acceptable—edge cases, timing issues, and misunderstood requirements can become real-world injuries.

Safety- and life-critical systems sit under strict standards, detailed documentation expectations, and legal liability. Even if the generated code looks clean, you still need proof it behaves correctly under all relevant conditions, including failures. AI outputs can also introduce hidden assumptions (units, thresholds, error handling) that are easy to miss in review.

A few common “sounds useful” ideas that carry outsized risk:

If your product truly must touch safety-critical workflows, treat AI coding tools as a helper, not an author. Minimum expectations usually include:

If you’re not prepared for that level of rigor, you’re building risk, not value.

You can create meaningful products around these domains without making life-or-death decisions:

If you’re unsure where the boundary is, use the decision checklist in /blog/a-practical-decision-checklist-before-you-start-building and bias toward simpler, reviewable assistance over automation.

Building in regulated finance is where AI-assisted coding can hurt you quietly: the app may “work,” but fail a requirement you didn’t realize existed. The cost of being wrong is high—chargebacks, fines, frozen accounts, or legal exposure.

These products often look like “just another form and database,” but they carry strict rules around identity, auditability, and data handling:

AI coding tools can produce plausible implementations that miss edge cases and controls that regulators and auditors expect. Common failure modes include:

The tricky part is that these issues may not show up in normal testing. They surface during audits, incidents, or partner reviews.

Sometimes finance functionality is unavoidable. In that case, reduce the surface area of custom code:

If your product’s value depends on novel financial logic or compliance interpretation, consider delaying AI-assisted implementation until you have domain expertise and a validation plan in place.

Security-sensitive code is where AI coding tools are most likely to hurt you—not because they “can’t write code,” but because they often miss the unglamorous parts: hardening, edge cases, threat modeling, and secure operational defaults.

Generated implementations may look correct in happy-path tests while failing under real-world attack conditions (timing differences, replay attacks, broken randomness, unsafe deserialization, confused-deputy bugs, subtle auth bypasses). These issues tend to be invisible until you have adversaries.

Avoid building or “improving” these components using AI-generated code as the primary source of truth:

Even small changes can invalidate security assumptions. For example:

If your product needs security features, build them by integrating established solutions rather than inventing them:

AI can still help here—generate integration glue code, configuration scaffolding, or test stubs—but treat it like a productivity assistant, not a security designer.

Security failures often come from defaults, not exotic attacks. Bake these in from day one:

If a feature’s main value is “we securely handle X,” it deserves security specialists, formal review, and careful validation—areas where AI-generated code is the wrong foundation.

Before you ask an AI coding tool to generate screens, routes, or database tables, take 15 minutes to decide whether the project is a good fit—and what “success” looks like. This pause saves days of rework.

Score each item from 1 (weak) to 5 (strong). If your total is under ~14, consider shrinking the idea or postponing it.

Use this checklist as your pre-build spec. Even a half-page note is enough.

A project is “done” when it has:

If you’re using an end-to-end builder like Koder.ai, make these items explicit: use planning mode to write acceptance criteria, lean on snapshots/rollback for safer releases, and export the source code when the prototype graduates into a longer-lived product.

Use templates when the product matches a common pattern (CRUD app, dashboard, webhook integration). Hire help when security, data modeling, or scaling decisions could be expensive to undo. Pause when you can’t clearly define requirements, don’t have lawful access to data, or can’t explain how you’ll test correctness.

Prioritize products where you can quickly verify correctness with clear inputs/outputs, fast feedback loops, and low consequences for mistakes. If you can write acceptance criteria and tests that catch wrong behavior in minutes, AI-assisted coding tends to be a strong fit.

Because the bottleneck is usually validation, not syntax. If outcomes are easy to test, AI can accelerate scaffolding in any common language; if outcomes are hard to judge (complex domain rules, compliance), you’ll spend more time verifying and reworking than you save.

They’re typically strongest at:

Common weak spots include:

Treat generated code as a draft and verify with tests and review.

Define “done” in observable terms: required screens, actions, and measurable results. Example: “Imports this sample CSV and totals match expected output.” Concrete acceptance criteria make it easier to prompt well and to test what AI generates.

Keep it narrow and testable:

Because they have known users, controlled environments, and fast feedback. Still, don’t skip basics:

Start read-only to reduce risk and speed validation. Define up front:

Also show “last updated” timestamps and document a source of truth to prevent silent metric drift.

Design for real-world failures, not “it worked once”:

Test with real sample payloads and fixtures for each integration.

Avoid using AI-generated code as the foundation for:

If you’re unsure, run a quick scoring pass (clarity, risk, testability, scope) and use the build-readiness checklist in /blog/a-practical-decision-checklist-before-you-start-building.