May 25, 2025·8 min

Bjarne Stroustrup and C++: Why Zero-Cost Abstractions Matter

Learn how Bjarne Stroustrup shaped C++ around zero-cost abstractions, and why performance-critical software still relies on its control, tools, and ecosystem.

Learn how Bjarne Stroustrup shaped C++ around zero-cost abstractions, and why performance-critical software still relies on its control, tools, and ecosystem.

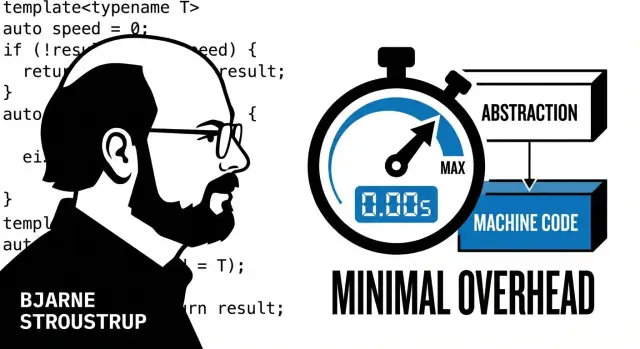

C++ was created with a specific promise: you should be able to write expressive, high-level code—classes, containers, generic algorithms—without automatically paying extra runtime cost for that expressiveness. If you don’t use a feature, you shouldn’t be charged for it. If you do use it, the cost should be close to what you’d write by hand in a lower-level style.

This post is the story of how Bjarne Stroustrup shaped that goal into a language, and why the idea still matters. It’s also a practical guide for anyone who cares about performance and wants to understand what C++ is trying to optimize for—beyond slogans.

“High-performance” isn’t just about making a benchmark number go up. In plain terms, it usually means at least one of these constraints is real:

When those constraints matter, hidden overhead—extra allocations, unnecessary copying, or virtual dispatch where it’s not needed—can be the difference between “works” and “misses the target.”

C++ is a common choice for systems programming and performance-critical components: game engines, browsers, databases, graphics pipelines, trading systems, robotics, telecom, and parts of operating systems. It’s not the only option, and many modern products mix languages. But C++ remains a frequent “inner-loop” tool when teams need direct control over how code maps to the machine.

Next, we’ll unpack the zero-cost idea in plain English, then connect it to specific C++ techniques (like RAII and templates) and the real trade-offs teams deal with.

Bjarne Stroustrup didn’t set out to “invent a new language” for its own sake. In the late 1970s and early 1980s, he was doing systems work where C was fast and close to the machine, but larger programs were hard to organize, hard to change, and easy to break.

His goal was simple to state and tricky to achieve: bring better ways to structure big programs—types, modules, encapsulation—without giving up the performance and hardware access that made C valuable.

The earliest step was literally called “C with Classes.” That name hints at the direction: not a clean-slate redesign, but an evolution. Keep what C already did well (predictable performance, direct memory access, simple calling conventions), then add the missing tools for building large systems.

As the language matured into C++, the additions weren’t just “more features.” They were aimed at making high-level code compile down to the same kind of machine code you’d write by hand in C, when used well.

Stroustrup’s central tension was—and still is—between:

Many languages pick a side by hiding details (which can hide overhead). C++ tries to let you build abstractions while still being able to ask, “What does this cost?” and, when needed, drop down to low-level operations.

That motivation—abstraction without penalty—is the thread connecting C++’s early class support to later ideas like RAII, templates, and the STL.

“Zero-cost abstractions” sounds like a slogan, but it’s really a promise about trade-offs. The everyday version is:

If you don’t use it, you don’t pay for it. And if you do use it, you should pay about the same as if you wrote the low-level code yourself.

In performance terms, “cost” is anything that makes the program do extra work at runtime. That can include:

Zero-cost abstractions aim to let you write clean, higher-level code—types, classes, functions, generic algorithms—while still producing machine code that’s as direct as hand-written loops and manual resource handling.

C++ doesn’t magically make everything fast. It makes it possible to write high-level code that compiles down to efficient instructions—but you can still choose expensive patterns.

If you allocate in a hot loop, copy large objects repeatedly, miss cache-friendly data layouts, or build layers of indirection that block optimization, your program will slow down. C++ won’t stop you. The “zero-cost” goal is about avoiding forced overhead, not about guaranteeing good decisions.

The rest of this article makes the idea concrete. We’ll look at how compilers erase abstraction overhead, why RAII can be both safer and faster, how templates generate code that runs like hand-tuned versions, and how the STL delivers reusable building blocks without sneaky runtime work—when used with care.

C++ leans on a simple bargain: pay more at build time so you pay less at run time. When you compile, the compiler doesn’t just translate your code—it tries hard to remove overhead that would otherwise show up while the program is running.

During compilation, the compiler can “pre-pay” many expenses:

The goal is that your clean, readable structure turns into machine code that looks close to what you would have written by hand.

A small helper function like:

int add_tax(int price) { return price * 108 / 100; }

often becomes no call at all after compilation. Instead of “jump to function, set up arguments, return,” the compiler may paste the arithmetic directly where you used it. The abstraction (a nicely named function) effectively disappears.

Loops also get attention. A straightforward loop over a contiguous range can be transformed by the optimizer: bounds checks may be removed when provably unnecessary, repeated calculations may be hoisted out of the loop, and the loop body may be reorganized to use the CPU more efficiently.

This is the practical meaning of zero-cost abstractions: you get clearer code without paying a permanent runtime fee for the structure you used to express it.

Nothing is free. Heavier optimization and more “disappearing abstractions” can mean longer compile times and sometimes larger binaries (for example, when many call sites get inlined). C++ gives you the choice—and the responsibility—to balance build cost against runtime speed.

RAII (Resource Acquisition Is Initialization) is a simple rule with big consequences: a resource’s lifetime is tied to a scope. When an object is created, it acquires the resource. When the object goes out of scope, its destructor releases it—automatically.

That “resource” can be almost anything you must clean up reliably: memory, files, mutex locks, database handles, sockets, GPU buffers, and more. Instead of remembering to call close(), unlock(), or free() on every path, you put the cleanup in one place (the destructor) and let the language guarantee it runs.

Manual cleanup tends to grow “shadow code”: extra if checks, duplicated return handling, and carefully placed cleanup calls after every potential failure. It’s easy to miss a branch, especially when functions evolve.

RAII usually generates straight-line code: acquire, do work, and let scope exit handle cleanup. That reduces both bugs (leaks, double-frees, forgotten unlocks) and runtime overhead from defensive bookkeeping. In performance terms, fewer error-handling branches in the hot path can mean better instruction cache behavior and fewer mispredicted branches.

Leaks and unreleased locks aren’t just “correctness issues”; they are performance time bombs. RAII makes resource release predictable, which helps systems stay stable under load.

RAII shines with exceptions because stack unwinding still calls destructors, so resources are released even when control flow jumps unexpectedly. Exceptions are a tool: their cost depends on how they’re used and on compiler/platform settings. The key point is that RAII keeps cleanup deterministic regardless of how you exit a scope.

Templates are often described as “compile-time code generation,” and that’s a useful mental model. You write an algorithm once—say, “sort these items” or “store items in a container”—and the compiler produces a version tailored to the exact types you use.

Because the compiler knows the concrete types, it can inline functions, pick the right operations, and optimize aggressively. In many cases, that means you avoid virtual calls, runtime type checks, and dynamic dispatch that you might otherwise need to make “generic” code work.

For example, a templated max(a, b) for integers can become a couple of machine instructions. The same template used with a small struct can still compile down to direct comparisons and moves—no interface pointers, no “what type is this?” checks at runtime.

The Standard Library leans heavily on templates because they make familiar building blocks reusable without hidden work:

std::vector<T> and std::array<T, N> store your T directly.std::sort work on many data types as long as they can be compared.The result is code that often performs like a hand-written, type-specific version—because it effectively becomes one.

Templates aren’t free for developers. They can increase compile times (more code to generate and optimize), and when something goes wrong, error messages can be long and hard to read. Teams typically cope with coding guidelines, good tooling, and keeping template complexity where it pays off.

The Standard Template Library (STL) is C++’s built-in toolbox for writing reusable code that can still compile down to tight machine instructions. It’s not a separate framework you “add on”—it’s part of the standard library, and it’s designed around the zero-cost idea: use higher-level building blocks without paying for work you didn’t ask for.

vector, string, array, map, unordered_map, list, and more.sort, find, count, transform, accumulate, etc.That separation matters. Instead of each container reinventing “sort” or “find,” the STL gives you one set of well-tested algorithms that the compiler can optimize aggressively.

STL code can be fast because many decisions are made at compile time. If you sort a vector<int>, the compiler knows the element type and iterator type, and it can inline comparisons and optimize loops much like handwritten code. The key is choosing data structures that match access patterns.

vector vs. list: vector is often the default because elements are contiguous in memory, which tends to be cache-friendly and fast for iteration and random access. list can help when you truly need stable iterators and lots of splicing/insertion in the middle without moving elements—but it pays overhead per node and can be slower to traverse.

unordered_map vs. map: unordered_map is typically a good pick for fast average-case lookups by key. map keeps keys ordered, which is useful for range queries (e.g., “all keys between A and B”) and predictable iteration order, but lookups are usually slower than a good hash table.

For a deeper guide, see also: /blog/choosing-cpp-containers

Modern C++ didn’t abandon Stroustrup’s original idea of “abstraction without penalty.” Instead, many newer features focus on letting you write clearer code while still giving the compiler the opportunity to produce tight machine code.

A common source of slowness is unnecessary copying—duplicating large strings, buffers, or data structures just to pass them around.

Move semantics is the simple idea of “don’t copy if you’re really just handing something over.” When an object is temporary (or you’re done with it), C++ can transfer its internals to the new owner instead of duplicating them. For everyday code, that often means fewer allocations, less memory traffic, and faster execution—without you having to manually micromanage bytes.

constexpr: compute earlier so runtime does lessSome values and decisions never change (table sizes, configuration constants, lookup tables). With constexpr, you can ask C++ to compute certain results earlier—during compilation—so the running program does less work.

The benefit is both speed and simplicity: the code can read like a normal calculation, while the result may end up “baked in” as a constant.

Ranges (and related features like views) let you express “take these items, filter them, transform them” in a readable way. Used well, they can compile down to straightforward loops—without forced runtime layers.

These features support the zero-cost direction, but performance still depends on how they’re used and how well the compiler can optimize the final program. Clean, high-level code often optimizes beautifully—but it’s still worth measuring when speed truly matters.

C++ can compile “high-level” code into very fast machine instructions—but it doesn’t guarantee fast results by default. Performance usually isn’t lost because you used a template or a clean abstraction. It’s lost because small costs sneak into hot paths and get multiplied millions of times.

A few patterns show up again and again:

None of these are “C++ problems.” They’re usually design and usage problems—and they can exist in any language. The difference is that C++ gives you enough control to fix them, and enough rope to create them.

Start with habits that keep the cost model simple:

Use a profiler that can answer basic questions: Where is time spent? How many allocations happen? Which functions are called most? Pair that with lightweight benchmarks for the parts you care about.

When you do this consistently, “zero-cost abstractions” becomes practical: you keep readable code, then remove the specific costs that show up under measurement.

C++ keeps showing up in places where milliseconds (or microseconds) aren’t just “nice to have,” but a product requirement. You’ll often find it behind low-latency trading systems, game engines, browser components, databases and storage engines, embedded firmware, and high-performance computing (HPC) workloads. These aren’t the only places it’s used—but they’re good examples of why the language persists.

Many performance-sensitive domains care less about peak throughput than about predictability: the tail latencies that cause frame drops, audio glitches, missed market opportunities, or missed real-time deadlines. C++ lets teams decide when memory is allocated, when it’s released, and how data is laid out in memory—choices that strongly affect cache behavior and latency spikes.

Because abstractions can compile down to straightforward machine code, C++ code can be structured for maintainability without automatically paying runtime overhead for that structure. When you do pay costs (dynamic allocation, virtual dispatch, synchronization), it’s typically visible and measurable.

A pragmatic reason C++ remains common is interoperability. Many organizations have decades of C libraries, operating-system interfaces, device SDKs, and battle-tested code they can’t simply rewrite. C++ can call C APIs directly, expose C-compatible interfaces when needed, and gradually modernize parts of a codebase without demanding an all-at-once migration.

In systems programming and embedded work, “close to the metal” still matters: direct access to instructions, SIMD, memory-mapped I/O, and platform-specific optimizations. Combined with mature compilers and profiling tools, C++ is often chosen when teams need to squeeze performance while keeping control over binaries, dependencies, and runtime behavior.

C++ earns loyalty because it can be extremely fast and flexible—but that power has a cost. People’s criticisms are not imaginary: the language is large, old codebases carry risky habits, and mistakes can lead to crashes, data corruption, or security issues.

C++ grew over decades, and it shows. You’ll see multiple ways to do the same thing, plus “sharp edges” that punish small errors. Two trouble spots come up often:

Older patterns add to the risk: raw new/delete, manual memory ownership, and unchecked pointer arithmetic are still common in legacy code.

Modern C++ practice is largely about getting the benefits while avoiding the foot-guns. Teams do this by adopting guidelines and safer subsets—not as a promise of perfect safety, but as a practical way to reduce failure modes.

Common moves include:

std::vector, std::string) over manual allocation.std::unique_ptr, std::shared_ptr) to make ownership explicit.clang-tidy-like rules.The standard continues to evolve toward safer, clearer code: better libraries, more expressive types, and ongoing work around contracts, safety guidance, and tool support. The trade-off remains: C++ gives you leverage, but teams must earn reliability through discipline, reviews, testing, and modern conventions.

C++ is a great bet when you need fine-grained control over performance and resources and you can invest in discipline. It’s less about “C++ is faster” and more about “C++ lets you decide what work happens, when, and at what cost.”

Choose C++ when most of these are true:

Consider another language when:

If you choose C++, set guardrails early:

new/delete, use std::unique_ptr/std::shared_ptr intentionally, and ban unchecked pointer arithmetic in application code.If you’re evaluating options or planning a migration, it also helps to keep internal decision notes and share them in a team space like /blog for future hires and stakeholders.

Even if your performance-critical core stays in C++, many teams still need to ship surrounding product code quickly: dashboards, admin tools, internal APIs, or prototypes that validate requirements before you commit to a low-level implementation.

That’s where Koder.ai can be a practical complement. It’s a vibe-coding platform that lets you build web, server, and mobile applications from a chat interface (React on the web, Go + PostgreSQL on the backend, Flutter for mobile), with options like planning mode, source code export, deployment/hosting, custom domains, and snapshots with rollback. In other words: you can iterate fast on “everything around the hot path,” while keeping your C++ components focused on the parts where zero-cost abstractions and tight control matter most.

A “zero-cost abstraction” is a design goal: if you don’t use a feature, it shouldn’t add runtime overhead, and if you do use it, the generated machine code should be close to what you’d write by hand in a lower-level style.

Practically, it means you can write clearer code (types, functions, generic algorithms) without automatically paying extra allocations, indirections, or dispatch.

In this context, “cost” means extra runtime work such as:

The goal is to keep these costs visible and avoid forcing them on every program.

It works best when the compiler can see through the abstraction at compile time—common cases include small functions that get inlined, compile-time constants (constexpr), and templates instantiated with concrete types.

It’s less effective when runtime indirection dominates (e.g., heavy virtual dispatch in a hot loop) or when you introduce frequent allocations and pointer-chasing data structures.

C++ shifts many expenses to build time so runtime stays lean. Typical examples:

To benefit, compile with optimizations (e.g., -O2/-O3) and keep code structured so the compiler can reason about it.

RAII ties resource lifetime to scope: acquire in a constructor, release in a destructor. Use it for memory, file handles, locks, sockets, etc.

Practical habits:

std::vector, std::string).RAII is especially valuable with exceptions because destructors run during stack unwinding, so resources still get released.

Performance-wise, exceptions are typically expensive when thrown, not when merely possible. If your hot path throws frequently, redesign toward error codes/expected-like results; if throws are truly exceptional, RAII + exceptions often keeps the fast path simple.

Templates let you write generic code that becomes type-specific at compile time, often enabling inlining and avoiding runtime type checks.

Trade-offs to plan for:

Keep template complexity where it pays off (core algorithms, reusable components) and avoid over-templating application glue.

Default to std::vector for contiguous storage and fast iteration; consider std::list only when you truly need stable iterators and cheap splicing/insertion without moving elements.

For key-value maps:

std::unordered_map for fast average-case lookupstd::map for ordered keys and range queriesIf you want a deeper container decision guide, see /blog/choosing-cpp-containers.

Focus on costs that multiply:

reserve())Then validate with profiling rather than intuition.

Set guardrails early so performance and safety don’t rely on heroics:

new/deletestd::unique_ptr / std::shared_ptr used deliberately)clang-tidyThis helps preserve C++’s control while reducing undefined behavior and surprise overhead.