Jul 12, 2025·8 min

How to Build a Feature Flag & Rollout Management Web App

Learn how to design and build a web app to create feature flags, target users, run gradual rollouts, add a kill switch, and track changes safely.

Learn how to design and build a web app to create feature flags, target users, run gradual rollouts, add a kill switch, and track changes safely.

A feature flag (also called a “feature toggle”) is a simple control that lets you turn a product capability on or off without shipping new code. Instead of tying a release to a deploy, you separate “code is deployed” from “code is active.” That small shift changes how safely—and how quickly—you can ship.

Teams use feature flags because they reduce risk and increase flexibility:

The operational value is simple: feature flags give you a fast, controlled way to respond to real-world behavior—errors, performance regressions, or negative user feedback—without waiting for a full redeploy cycle.

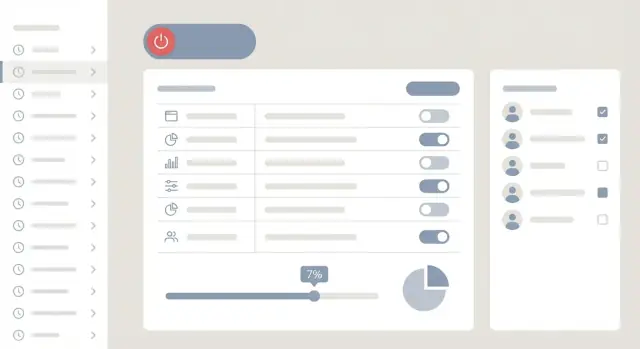

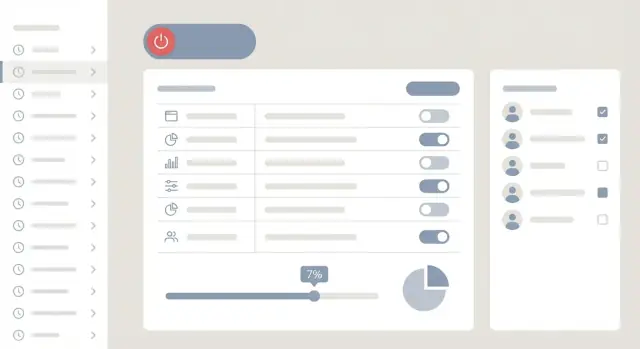

This guide walks you through building a practical feature flag and rollout management web app with three core parts:

The goal isn’t a massive enterprise platform; it’s a clear, maintainable system you can put in front of a product team and trust in production.

If you want to prototype this kind of internal tool quickly, a vibe-coding workflow can help. For example, teams often use Koder.ai to generate a first working version of the React dashboard and Go/PostgreSQL API from a structured chat spec, then iterate on the rules engine, RBAC, and audit requirements in planning mode before exporting the source code.

Before you design screens or write code, get clear on who the system is for and what “success” looks like. Feature flag tools often fail not because the rule engine is wrong, but because the workflow doesn’t match how teams ship and support software.

Engineers want fast, predictable controls: create a flag, add targeting rules, and ship without redeploying. Product managers want confidence that releases can be staged and scheduled, with clear visibility into who is affected. Support and operations need a safe way to respond to incidents—ideally without paging engineering—by disabling a risky feature quickly.

A good requirements doc names these personas and the actions they should be able to take (and not take).

Focus on a tight core that enables gradual rollout and rollback:

These aren’t “nice extras”—they’re what makes a rollout tool worth adopting.

Capture these now, but don’t build them first:

Write down safety requirements as explicit rules. Common examples: approvals for production changes, full auditability (who changed what, when, and why), and a quick rollback path that’s available even during an incident. This “definition of safe” will drive later decisions about permissions, UI friction, and change history.

A feature flag system is easiest to reason about when you separate “managing flags” from “serving evaluations.” That way your admin experience can be pleasant and safe, while your applications get fast, reliable answers.

At a high level, you’ll want four building blocks:

A simple mental model: the dashboard updates flag definitions; applications consume a compiled snapshot of those definitions for fast evaluation.

You generally have two patterns:

Server-side evaluation (recommended for most flags). Your backend asks the SDK/evaluation layer using a user/context object, then decides what to do. This keeps rules and sensitive attributes off the client and makes it easier to enforce consistent behavior.

Client-side evaluation (use selectively). A web/mobile client fetches a pre-filtered, signed configuration (only what the client is allowed to know) and evaluates locally. This can reduce backend load and improve UI responsiveness, but it requires stricter data hygiene.

To start, a modular monolith is usually the most practical:

As usage grows, the first thing to split is typically the evaluation path (read-heavy) from the admin path (write-heavy). You can keep the same data model while introducing a dedicated evaluation service later.

Flag checks happen on hot paths, so optimize reads:

The goal is consistent behavior even during partial outages: if the dashboard is down, applications should still evaluate using the last known good configuration.

A feature-flag system succeeds or fails on its data model. If it’s too loose, you can’t audit changes or safely roll back. If it’s too rigid, teams will avoid using it. Aim for a structure that supports clear defaults, predictable targeting, and a history you can trust.

Flag is the product-level switch. Keep it stable over time by giving it:

key (unique, used by SDKs, e.g. new_checkout)name and description (for humans)type (boolean, string, number, JSON)archived_at (soft delete)Variant represents the value a flag can return. Even boolean flags benefit from explicit variants (on/off) because it standardizes reporting and rollouts.

Environment separates behavior by context: dev, staging, prod. Model it explicitly so one flag can have different rules and defaults per environment.

Segment is a saved group definition (e.g., “Beta testers”, “Internal users”, “High spenders”). Segments should be reusable across many flags.

Rules are where most complexity lives, so make them first-class records.

A practical approach:

FlagConfig (per flag + environment) stores default_variant_id, enabled state, and a pointer to the current published revision.Rule belongs to a revision and includes:

priority (lower number wins)conditions (JSON array like attribute comparisons)serve (fixed variant, or percentage rollout across variants)fallback is always the default_variant_id in FlagConfig when no rule matches.This keeps evaluation simple: load the published revision, sort rules by priority, match the first rule, else default.

Treat every change as a new FlagRevision:

status: draft or publishedcreated_by, created_at, optional commentPublishing is an atomic action: set FlagConfig.published_revision_id to the chosen revision (per environment). Drafts let teams prepare changes without affecting users.

For audits and rollbacks, store an append-only change log:

AuditEvent: who changed what, when, in which environmentbefore/after snapshots (or a JSON patch) referencing the revision IDsRollback becomes “re-publish an older revision” rather than trying to manually reconstruct settings. This is faster, safer, and easy to explain to non-technical stakeholders using the dashboard’s history view.

Targeting is the “who gets what” part of feature flags. Done well, it lets you ship safely: expose a change to internal users first, then a specific customer tier, then a region—without redeploying.

Start with a small, consistent set of attributes your apps can reliably send with every evaluation:

Keep attributes boring and predictable. If one app sends plan=Pro and another sends plan=pro, your rules will behave unexpectedly.

Segments are reusable groups like “Beta testers,” “EU customers,” or “All enterprise admins.” Implement them as saved definitions (not static lists), so membership can be computed on demand:

To keep evaluation fast, cache segment membership results for a short time (seconds/minutes), keyed by environment and user.

Define a clear evaluation order so results are explainable in the dashboard:

Support AND/OR groups and common operators: equals, not equals, contains, in list, greater/less than (for versions or numeric attributes).

Minimize personal data. Prefer stable, non-PII identifiers (e.g., an internal user ID). When you must store identifiers for allow/deny lists, store hashed IDs where possible, and avoid copying emails, names, or raw IP addresses into your flag system.

Rollouts are where a feature flag system delivers real value: you can expose changes gradually, compare options, and stop problems quickly—without redeploying.

A percentage rollout means “enable for 5% of users,” then increase as confidence grows. The key detail is consistent bucketing: the same user should reliably stay in (or out of) the rollout across sessions.

Use a deterministic hash of a stable identifier (for example, user_id or account_id) to assign a bucket from 0–99. If you instead pick users randomly on each request, people will “flip” between experiences, metrics become noisy, and support teams can’t reproduce issues.

Also decide the bucketing unit intentionally:

Start with boolean flags (on/off), but plan for multivariate variants (e.g., control, new-checkout-a, new-checkout-b). Multivariate is essential for A/B tests, copy experiments, and incremental UX changes.

Your rules should always return a single resolved value per evaluation, with a clear priority order (e.g., explicit overrides > segment rules > percentage rollout > default).

Scheduling lets teams coordinate releases without someone staying up to flip a switch. Support:

Treat schedules as part of the flag config, so changes are auditable and previewable before they go live.

A kill switch is an emergency “force off” that overrides everything else. Make it a first-class control with the fastest path in the UI and API.

Decide what happens during outages:

Document this clearly so teams know what the app will do when the flag system is degraded. For more on how teams operate this day-to-day, see /blog/testing-deployment-and-governance.

Your web app is only half the system. The other half is how your product code reads flags safely and quickly. A clean API plus a small SDK for each platform (Node, Python, mobile, etc.) keeps integration consistent and prevents every team from inventing their own approach.

Your applications will call read endpoints far more often than write endpoints, so optimize these first.

Common patterns:

GET /api/v1/environments/{env}/flags — list all flags for an environment (often filtered to “enabled” only)GET /api/v1/environments/{env}/flags/{key} — fetch a single flag by keyGET /api/v1/environments/{env}/bootstrap — fetch flags + segments needed for local evaluationMake responses cache-friendly (ETag or updated_at version), and keep payloads small. Many teams also support ?keys=a,b,c for batch fetch.

Write endpoints should be strict and predictable:

POST /api/v1/flags — create (validate key uniqueness, naming rules)PUT /api/v1/flags/{id} — update draft config (schema validation)POST /api/v1/flags/{id}/publish — promote draft to an environmentPOST /api/v1/flags/{id}/rollback — revert to last known good versionReturn clear validation errors so the dashboard can explain what to fix.

Your SDK should handle caching with TTL, retries/backoff, timeouts, and an offline fallback (serve last cached values). It should also expose a single “evaluate” call so teams don’t need to understand your data model.

If flags affect pricing, entitlements, or security-sensitive behavior, avoid trusting the browser/mobile client. Prefer server-side evaluation, or use signed tokens (server issues a signed “flag snapshot” the client can read but not forge).

A feature flag system only works if people trust it enough to use it during real releases. The admin dashboard is where that trust is built: clear labels, safe defaults, and changes that are easy to review.

Start with a simple flag list view that supports:

Make the “current state” readable at a glance. For example, show On for 10%, Targeting: Beta segment, or Off (kill switch active) rather than just a green dot.

The editor should feel like a guided form, not a technical configuration screen.

Include:

If you support variants, display them as human-friendly options (“New checkout”, “Old checkout”) and validate that traffic adds up correctly.

Teams will need bulk enable/disable and “copy rules to another environment.” Add guardrails:

Use warnings and required notes for risky actions (Production edits, large percentage jumps, kill switch toggles). Show a change summary before saving—what changed, where, and who will be affected—so non-technical reviewers can approve confidently.

Security is where feature flag tools either earn trust quickly—or get blocked by your security team. Because flags can change user experiences instantly (and sometimes break production), treat access control as a first-class part of your product.

Start with email + password for simplicity, but plan for enterprise expectations.

A clean model is role-based access control (RBAC) plus environment-level permissions.

Then scope that role per environment (Dev/Staging/Prod). For example, someone can be Editor in Staging but only Viewer in Prod. This prevents accidental production flips while keeping teams fast elsewhere.

Add an optional approval workflow for production edits:

Your SDKs will need credentials to fetch flag values. Treat these like API keys:

For more on traceability, connect this section to your audit trail design in /blog/auditing-monitoring-alerts.

When feature flags control real user experiences, “what changed?” becomes a production question, not a paperwork question. Auditing and monitoring turn your rollout tool from a toggle board into an operational system your team can trust.

Every write action in the admin app should emit an audit event. Treat it as append-only: never edit history—add a new event.

Capture the essentials:

Make this log easy to browse: filter by flag, environment, actor, and time range. A “copy link to this change” deep link is invaluable for incident threads.

Add lightweight telemetry around flag evaluations (SDK reads) and decision outcomes (which variant was served). At minimum, track:

This supports both debugging (“are users actually receiving variant B?”) and governance (“which flags are dead and can be removed?”).

Alerts should connect a change event to an impact signal. A practical rule: if a flag was enabled (or ramped up) and errors spike soon after, page someone.

Example alert conditions:

Create a simple “Ops” area in your dashboard:

These views reduce guesswork during incidents and make rollouts feel controlled rather than risky.

Feature flags sit on the critical path of every request, so reliability is a product feature, not an infrastructure detail. Your goal is simple: flag evaluation should be fast, predictable, and safe even when parts of the system are degraded.

Start with in-memory caching inside your SDK or edge service so most evaluations never hit the network. Keep the cache small and keyed by environment + flag set version.

Add Redis when you need shared, low-latency reads across many app instances (and to reduce load on your primary database). Redis is also useful for storing a “current flag snapshot” per environment.

A CDN can help only when you expose a read-only flags endpoint that’s safe to cache publicly or per-tenant (often it’s not). If you do use a CDN, prefer signed, short-lived responses and avoid caching anything user-specific.

Polling is simpler: SDKs fetch the latest flag snapshot every N seconds with ETags/version checks to avoid downloading unchanged data.

Streaming (SSE/WebSockets) gives faster propagation for rollouts and kill switches. It’s great for large teams, but requires more operational care (connection limits, reconnect logic, regional fanout). A practical compromise is polling by default with optional streaming for “instant” environments.

Protect your APIs from accidental SDK misconfiguration (e.g., polling every 100ms). Enforce server-side minimum intervals per SDK key, and return clear errors.

Also guard your database: ensure your read path is snapshot-based, not “evaluate rules by querying user tables.” Feature evaluation should never trigger expensive joins.

Back up your primary data store and run restore drills on a schedule (not just backups). Store an immutable history of flag snapshots so you can roll back quickly.

Define safe defaults for outages: if the flag service can’t be reached, SDKs should fall back to the last known good snapshot; if none exists, default to “off” for risky features and document exceptions (like billing-critical flags).

Shipping a feature flag system isn’t “deploy and forget.” Because it controls production behavior, you want high confidence in rule evaluation, change workflows, and rollback paths—and a lightweight governance process so the tool stays safe as more teams adopt it.

Start with tests that protect the core promises of flagging:

A practical tip: add “golden” test cases for tricky rules (multiple segments, fallbacks, conflicting conditions) so regressions are obvious.

Make staging a safe rehearsal environment:

Before production releases, use a short checklist:

For governance, keep it simple: define who can publish to production, require approval for high-impact flags, review stale flags monthly, and set an “expiration date” field so temporary rollouts don’t live forever.

If you’re building this as an internal platform, it can also help to standardize how teams request changes. Some organizations use Koder.ai to spin up an initial admin dashboard and iterate on workflows (approvals, audit summaries, rollback UX) with stakeholders in chat, then export the codebase for a full security review and long-term ownership.

A feature flag (feature toggle) is a runtime control that turns a capability on/off (or to a variant) without deploying new code. It separates shipping code from activating behavior, which enables safer staged rollouts, quick rollbacks, and controlled experiments.

A practical setup separates:

This split keeps the “change workflow” safe and auditable while keeping evaluations low-latency.

Use consistent bucketing: compute a deterministic hash from a stable identifier (e.g., user_id or account_id), map it to 0–99, then include/exclude based on the rollout percentage.

Avoid per-request randomness; otherwise users “flip” between experiences, metrics get noisy, and support can’t reproduce issues.

Start with:

A clear precedence order makes results explainable:

Keep the attribute set small and consistent (e.g., role, plan, region, app version) to prevent rule drift across services.

Store schedules as part of the environment-specific flag config:

Make scheduled changes auditable and previewable, so teams can confirm exactly what will happen before it goes live.

Optimize for read-heavy usage:

This prevents your database from being queried on every flag check.

If a flag affects pricing, entitlements, or security-sensitive behavior, prefer server-side evaluation so clients can’t tamper with rules or attributes.

If you must evaluate on the client:

Use RBAC plus environment scoping:

For production, add optional approvals for changes to targeting/rollouts/kill switch. Always record requester, approver, and the exact change.

At minimum, capture:

For outages: SDKs should fall back to last known good config, then a documented safe default (often “off” for risky features). See also /blog/auditing-monitoring-alerts and /blog/testing-deployment-and-governance.

key, type, name/description, archived/soft-delete.dev/staging/prod with separate configs.Add revisions (draft vs published) so publishing is an atomic pointer change and rollback is “re-publish an older revision.”