Aug 16, 2025·8 min

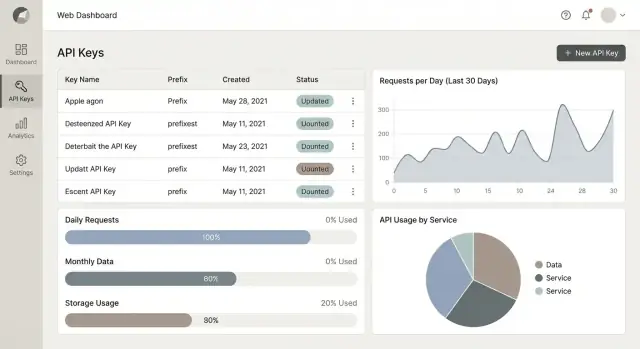

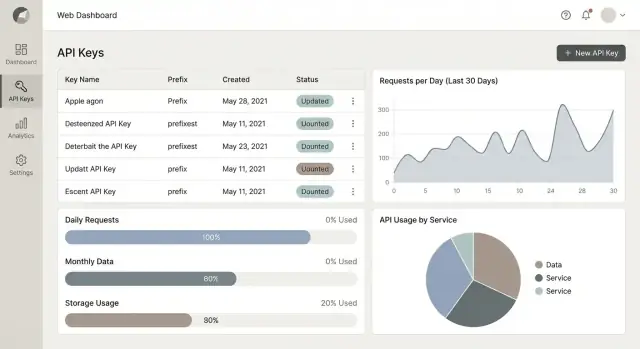

Build a Web App to Manage API Keys, Quotas & Usage Analytics

Learn how to design and build a web app that issues API keys, enforces quotas, tracks usage, and presents clear analytics dashboards with secure workflows.

Learn how to design and build a web app that issues API keys, enforces quotas, tracks usage, and presents clear analytics dashboards with secure workflows.

You’re building a web app that sits between your API and the people who consume it. Its job is to issue API keys, control how those keys can be used, and explain what happened—in a way that’s clear enough for both developers and non-developers.

At a minimum, it answers three practical questions:

If you want to move fast on the portal and admin UI, tools like Koder.ai can help you prototype and ship a production-grade baseline quickly (React frontend + Go backend + PostgreSQL), while still keeping full control via source-code export, snapshots/rollback, and deployment/hosting.

A key-management app isn’t only for engineers. Different roles show up with different goals:

Most successful implementations converge on a few core modules:

A strong MVP focuses on key issuance + basic limits + clear usage reporting. Advanced features—like automated plan upgrades, invoicing workflows, proration, and complex contract terms—can come later once you trust your metering and enforcement.

A practical “north star” for the first release: make it easy for someone to create a key, understand their limits, and see their usage without filing a support ticket.

Before you write code, decide what “done” means for the first release. This kind of system grows quickly: billing, audits, and enterprise security show up sooner than you expect. A clear MVP keeps you shipping.

At a minimum, users should be able to:

If you can’t safely issue a key, limit it, and prove what it did, it’s not ready.

Pick one early:

Rotation flows, webhook notifications, billing exports, SSO/SAML, per-endpoint quotas, anomaly detection, and richer audit logs.

Your architecture choice should start with one question: where do you enforce access and limits? That decision affects latency, reliability, and how quickly you can ship.

An API gateway (managed or self-hosted) can validate API keys, apply rate limits, and emit usage events before requests reach your services.

This is a strong fit when you have multiple backend services, need consistent policies, or want to keep enforcement out of application code. The trade-off: gateway configuration can become its own “product,” and debugging often requires good tracing.

A reverse proxy (e.g., NGINX/Envoy) can handle key checks and rate limiting with plugins or external auth hooks.

This works well when you want a lightweight edge layer, but it can be harder to model business rules (plans, per-tenant quotas, special cases) without building supporting services.

Putting checks in your API application (middleware) is usually fastest for an MVP: one codebase, one deploy, simpler local testing.

It can get tricky as you add more services—policy drift and duplicated logic are common—so plan an eventual extraction into a shared component or edge layer.

Even if you start small, keep boundaries clear:

For metering, decide what must happen on the request path:

Rate limit checks are the hot path (optimize for low-latency, in-memory/Redis). Reports and dashboards are the cold path (optimize for flexible queries and batch aggregation).

A good data model keeps three concerns separate: who owns access, what limits apply, and what actually happened. If you get that right, everything else—rotation, dashboards, billing—gets simpler.

At minimum, model these tables (or collections):

Never store raw API tokens. Store only:

This lets you show “Key: ab12cd…”, while keeping the actual secret unrecoverable.

Add audit tables early: KeyAudit and AdminAudit (or a single AuditLog) capturing:

When a customer asks “who revoked my key?”, you’ll have an answer.

Model quotas with explicit windows: per_minute, per_hour, per_day, per_month.

Store counters in a separate table like UsageCounter keyed by (project_id, window_start, window_type, metric). That makes resets predictable and keeps analytics queries fast.

For portal views, you can aggregate Usage Events into daily rollups and link to /blog/usage-metering for deeper detail.

If your product manages API keys and usage, your app’s own access control needs to be stricter than a typical CRUD dashboard. A clear role model keeps teams productive while preventing “everyone is an admin” drift.

Start with a small set of roles per organization (tenant):

Keep permissions explicit (e.g., keys:rotate, quotas:update) so you can add features without reinventing roles.

Use standard username/password only if you must; otherwise support OAuth/OIDC. SSO is optional, but MFA should be required for owners/admins and strongly encouraged for everyone.

Add session protections: short-lived access tokens, refresh token rotation, and device/session management.

Offer a default API key in a header (e.g., Authorization: Bearer <key> or X-API-Key). For advanced customers, add optional HMAC signing (prevents replay/tampering) or JWT (good for short-lived, scoped access). Document these clearly in your developer portal.

Enforce isolation at every query: org_id everywhere. Avoid relying on UI filtering alone—apply org_id in database constraints, row-level policies (if available), and service-layer checks, and write tests that attempt cross-tenant access.

A good key lifecycle keeps customers productive while giving you fast ways to reduce risk when something goes wrong. Design the UI and API so the “happy path” is obvious, and the safer options (rotation, expiry) are the default.

In the key creation flow, ask for a name (e.g., “Prod server”, “Local dev”), plus scopes/permissions so the key can be least-privilege from day one.

If it fits your product, add optional restrictions like allowed origins (for browser-based usage) or allowed IPs/CIDRs (for server-to-server). Keep these optional, with clear warnings about lockouts.

After creation, show the raw key only once. Provide a big “Copy” button, plus lightweight guidance: “Store in a secret manager. We can’t show this again.” Link directly to setup instructions like /docs/auth.

Rotation should follow a predictable pattern:

In the UI, provide a “Rotate” action that creates a replacement key and labels the previous one as “Pending revoke” to encourage cleanup.

Revocation should disable the key immediately and log who did it and why.

Also support scheduled expiry (e.g., 30/60/90 days) and manual “expires on” dates for temporary contractors or trials. Expired keys should fail predictably with a clear auth error so developers know what to fix.

Rate limits and quotas solve different problems, and mixing them up is a common source of confusing “why was I blocked?” support tickets.

Rate limits control bursts (e.g., “no more than 50 requests per second”). They protect your infrastructure and keep one noisy customer from degrading everyone else.

Quotas cap total consumption over a period (e.g., “100,000 requests per month”). They’re about plan enforcement and billing boundaries.

Many products use both: a monthly quota for fairness and pricing, plus a per-second/per-minute rate limit for stability.

For real-time rate limiting, choose an algorithm you can explain and implement reliably:

Token bucket is usually the better default for developer-facing APIs because it’s predictable and forgiving.

You typically need two stores:

Redis answers “can this request run right now?” The DB answers “how much did they use this month?”

Be explicit per product and per endpoint. Common meters include requests, tokens, bytes transferred, endpoint-specific weights, or compute time.

If you use weighted endpoints, publish the weights in your docs and portal.

When blocking a request, return clear, consistent errors:

Retry-After and optionally headers like X-RateLimit-Limit, X-RateLimit-Remaining, X-RateLimit-Reset.Good messages reduce churn: developers can back off, add retries, or upgrade without guessing.

Usage metering is the “source of truth” for quotas, invoices, and customer trust. The goal is simple: count what happened, consistently, without slowing down your API.

For each request, capture a small, predictable event payload:

Avoid logging request/response bodies. Redact sensitive headers by default (Authorization, cookies) and treat PII as “opt-in with strong need.” If you must log something for debugging, store it separately with a shorter retention window and strict access controls.

Don’t aggregate metrics inline during the request. Instead:

This keeps latency stable even when traffic spikes.

Queues can deliver messages more than once. Add a unique event_id and enforce deduplication (e.g., unique constraint or “seen” cache with TTL). Workers should be safe to retry so a crash doesn’t corrupt totals.

Store raw events briefly (days/weeks) for audits and investigations. Keep aggregated metrics much longer (months/years) for trends, quota enforcement, and billing readiness.

A usage dashboard shouldn’t be a “nice chart” page. It should answer two questions quickly: what changed? and what should I do next? Design around decisions—debugging spikes, preventing overages, and proving value to a customer.

Start with four panels that map to everyday needs:

Every chart should connect to a next step. Show:

When projected overage is likely, link directly to the upgrade path: /plans (or /pricing).

Add filters that narrow investigations without forcing users into complex query builders:

Include CSV download for finance and support, and provide a lightweight metrics API (e.g., GET /api/metrics/usage?from=...&to=...&key_id=...) so customers can pull usage into their own BI tools.

Alerts are the difference between “we noticed a problem” and “customers noticed first.” Design them around the questions users ask under pressure: What happened? Who is affected? What should I do next?

Start with predictable thresholds tied to quotas. A simple pattern that works well is 50% / 80% / 100% of quota usage within a billing period.

Add a few high-signal behavioral alerts:

Keep alerts actionable: include tenant, API key/app, endpoint group (if available), time window, and a link to the relevant view in your portal (e.g., /dashboard/usage).

Email is the baseline because everyone has it. Add webhooks for teams that want to route alerts into their own systems. If you support Slack, treat it as optional and keep setup lightweight.

A practical rule: provide a per-tenant notification policy—who gets which alerts, and at what severity.

Offer a daily/weekly summary that highlights total requests, top endpoints, errors, throttles, and “change vs last period.” Stakeholders want trends, not raw logs.

Even if billing is “later,” store:

This lets you backfill invoices or previews without rewriting your data model.

Every message should state: what happened, impact, and next step (rotate key, upgrade plan, investigate client, or contact support via /support).

Security for an API-key management app is less about fancy features and more about careful defaults. Treat every key as a credential, and assume it will eventually be copied into the wrong place.

Never store keys in plaintext. Store a verifier derived from the secret (commonly SHA-256 or HMAC-SHA-256 with a server-side pepper) and only show the user the full secret once at creation time.

In the UI and logs, display only a non-sensitive prefix (for example, ak_live_9F3K…) so people can identify a key without exposing it.

Provide practical “secret scanning” guidance: remind users not to commit keys to Git, and link to their tooling docs (for example, GitHub secret scanning) in your portal docs at /docs.

Attackers love admin endpoints because they can create keys, raise quotas, or disable limits. Apply rate limiting to admin APIs too, and consider an IP allowlist option for admin access (useful for internal teams).

Use least privilege: separate roles (viewer vs admin), and restrict who can change quotas or rotate keys.

Record audit events for key creation, rotation, revocation, login attempts, and quota changes. Keep logs tamper-resistant (append-only storage, restricted write access, and regular backups).

Adopt compliance basics early: data minimization (store only what you need), clear retention controls (auto-delete old logs), and documented access rules.

Key leakage, replay abuse, scraping of your portal, and “noisy neighbor” tenants consuming shared capacity. Design mitigations (hashing/verifiers, short-lived tokens where possible, rate limits, and per-tenant quotas) around these realities.

A great portal makes the “safe path” the easiest path: admins can quickly reduce risk, and developers can get a working key and a successful test call without emailing anyone.

Admins usually arrive with an urgent task (“revoke this key now”, “who created this?”, “why did usage spike?”). Design for fast scanning and decisive action.

Use quick search that works across key ID prefixes, app names, users, and workspace/tenant names. Pair it with clear status indicators (Active, Expired, Revoked, Compromised, Rotating) and timestamps like “last used” and “created by”. Those two fields alone prevent a lot of accidental revokes.

For high-volume operations, add bulk actions with safety rails: bulk revoke, bulk rotate, bulk change quota tier. Always show a confirmation step with a count, and summarize impact (“38 keys will be revoked; 12 have been used in the last 24h”).

Provide an audit-friendly details panel for each key: scopes, associated app, allowed IPs (if any), quota tier, and recent errors.

Developers want to copy, paste, and move on. Put clear docs next to the key creation flow, not buried elsewhere. Offer copyable curl examples and a language toggle (curl, JS, Python) if you can.

Show the key once with a “copy” button, plus a short reminder about storage. Then guide them through a “Test call” step that runs a real request against a sandbox or a low-risk endpoint. If it fails, provide error explanations in plain English, including common fixes:

A simple path works best: Create first key → make a test call → see usage. Even a tiny usage chart (“Last 15 minutes”) builds trust that metering works.

Link directly to relevant pages using relative routes like /docs, /keys, and /usage.

Use plain labels (“Requests per minute”, “Monthly requests”) and keep units consistent across pages. Add tooltips for terms like “scope” and “burst”. Ensure keyboard navigation, visible focus states, and sufficient contrast—especially on status badges and error banners.

Getting this kind of system into production is mostly about discipline: predictable deploys, clear visibility when something breaks, and tests focused on the “hot paths” (auth, rate checks, and metering).

Keep configuration explicit. Store non-sensitive settings in environment variables (e.g., rate-limit defaults, queue names, retention windows) and put secrets in a managed secrets store (AWS Secrets Manager, GCP Secret Manager, Vault). Avoid baking keys into images.

Run database migrations as a first-class step in your pipeline. Prefer a “migrate then deploy” strategy for backward-compatible changes, and plan for safe rollbacks (feature flags help). If you’re multi-tenant, add sanity checks to prevent migrations that accidentally scan every tenant table.

If you’re building the system on Koder.ai, snapshots and rollback can be a practical safety net for these early iterations (especially while you’re still refining enforcement logic and schema boundaries).

You need three signals: logs, metrics, and traces. Instrument rate limiting and quota enforcement with metrics such as:

Create a dashboard specifically for rate-limit rejects so support can answer “why is my traffic failing?” without guessing. Tracing helps spot slow dependencies on the critical path (DB lookups for key status, cache misses, etc.).

Treat config data (keys, quotas, roles) as high-priority and usage events as high-volume. Back up configuration frequently with point-in-time recovery.

For usage data, focus on durability and replay: a write-ahead log/queue plus re-aggregation is often more practical than frequent full backups.

Unit-test limit logic (edge cases: window boundaries, concurrent requests, key rotation). Load-test the hottest paths: key validation + counter updates.

Then roll out in phases: internal users → limited beta (select tenants) → GA, with a kill switch to disable enforcement if needed.

Focus on three outcomes:

If users can create a key, understand their limits, and verify usage without filing a ticket, your MVP is doing its job.

Pick based on where you want consistent enforcement:

A common path is middleware first, then extract to a shared edge layer when the system grows.

Store metadata separately from the secret:

They solve different problems:

Many APIs use both: a monthly quota plus a per-second/per-minute rate limit to keep traffic stable.

Use a pipeline that keeps the request path fast:

This avoids slow “counting” in-line while still producing billing-grade rollups.

Assume events can be delivered more than once and design for retries:

event_id per request.This is essential if you’ll later use usage for quotas, invoices, or credits.

Record who did what, when, and from where:

Include actor, target, timestamp, and IP/user-agent. When support asks “who revoked this key?”, you’ll have a definitive answer.

Use a small, explicit role model and fine-grained permissions:

A practical approach is raw short-term, aggregates long-term:

Decide this up front so storage costs, privacy posture, and reporting expectations stay predictable.

Make blocks easy to debug without guesswork:

Retry-After and (optionally) X-RateLimit-* headers.created_at, last_used_at, expires_at, and status.In the UI, show the full key only once at creation and make it clear it can’t be recovered later.

keys:rotatequotas:updateEnforce tenant isolation everywhere (e.g., org_id on every query), not just via UI filters.

/plans or /billing).Pair this with portal pages that answer “why was I blocked?” and let users verify usage in /usage (and deeper details in /blog/usage-metering if you have it).