Apr 09, 2025·8 min

How to Build a Web App to Track Feature Adoption & Behavior

A practical guide to building a web app that tracks feature adoption and user behavior, from event design to dashboards, privacy, and rollout.

A practical guide to building a web app that tracks feature adoption and user behavior, from event design to dashboards, privacy, and rollout.

Before you track anything, decide what “feature adoption” actually means for your product. If you skip this step, you’ll collect plenty of data—and still argue in meetings about what it “means.”

Adoption usually isn’t a single moment. Pick one or more definitions that match how value is delivered:

Example: for “Saved Searches,” adoption might be created a saved search (use), ran it 3+ times in 14 days (repeat), and received an alert and clicked through (value achieved).

Your tracking should answer questions that lead to action, such as:

Write these as decision statements (e.g., “If activation drops after release X, we roll back onboarding changes.”).

Different teams need different views:

Choose a small set of metrics to review weekly, plus a lightweight release check after every deployment. Define thresholds (e.g., “Adoption rate ≥ 25% among active users in 30 days”) so reporting drives decisions, not debate.

Before you instrument anything, decide what “things” your analytics system will describe. If you get these entities right, your reports stay understandable even as the product evolves.

Define each entity in plain language, then translate it into IDs you can store:

project_created, invite_sent).Write down the minimum properties you need for each event: user_id (or anonymous ID), account_id, timestamp, and a few relevant attributes (plan, role, device, feature flag, etc.). Avoid dumping everything “just in case.”

Pick the reporting angles that match your product goals:

Your event design should make these computations straightforward.

Be explicit about scope: web only first, or web + mobile from day one. Cross-platform tracking is easiest if you standardize event names and properties early.

Finally, set non-negotiable targets: acceptable page performance impact, ingestion latency (how fresh dashboards must be), and dashboard load time. These constraints guide later choices in tracking, storage, and querying.

A good tracking schema is less about “tracking everything” and more about making events predictable. If event names and properties drift, dashboards break, analysts stop trusting data, and engineers hesitate to instrument new features.

Pick a simple, repeatable pattern and stick to it. A common choice is verb_noun:

viewed_pricing_pagestarted_trialenabled_featureexported_reportUse past tense consistently (or present tense consistently), and avoid synonyms (clicked, pressed, tapped) unless they truly mean different things.

Every event should carry a small set of required properties so you can segment, filter, and join reliably later. At minimum, define:

user_id (nullable for anonymous users, but present when known)account_id (if your product is B2B/multi-seat)timestamp (server-generated when possible)feature_key (stable identifier like "bulk_upload")plan (e.g., free, pro, enterprise)These properties make feature adoption tracking and user behavior analytics far easier because you don’t have to guess what’s missing in each event.

Optional fields add context, but they’re easy to overdo. Typical optional properties include:

device, os, browserpage, referrerexperiment_variant (or ab_variant)Keep optional properties consistent across events (same key names, same value formats), and document any “allowed values” where possible.

Assume your schema will evolve. Add an event_version (e.g., 1, 2) and update it when you change meaning or required fields.

Finally, write an instrumentation spec that lists each event, when it fires, required/optional properties, and examples. Keep that doc in source control alongside your app so schema changes are reviewed like code.

If your identity model is shaky, your adoption metrics will be noisy: funnels won’t line up, retention will look worse than it is, and “active users” will be inflated by duplicates. The goal is to support three views at once: anonymous visitors, logged-in users, and account/workspace activity.

Start every device/session with an anonymous_id (cookie/localStorage). The moment a user authenticates, link that anonymous history to an identified user_id.

Link identities when the user has proven ownership of the account (successful login, magic link verification, SSO). Avoid linking on weak signals (email typed into a form) unless you clearly separate it as “pre-auth.”

Treat auth transitions as events:

login_success (includes user_id, account_id, and the current anonymous_id)logoutaccount_switched (from account_id → account_id)Important: don’t change the anonymous cookie on logout. If you rotate it, you’ll fragment sessions and inflate unique users. Instead, keep the stable anonymous_id, but stop attaching user_id after logout.

Define merge rules explicitly:

user_id. If you must merge by email, do it server-side and only for verified emails. Keep an audit trail.account_id/workspace_id generated by your system, not a mutable name.When merging, write a mapping table (old → new) and apply it consistently at query time or via a backfill job. This prevents “two users” showing up in cohorts.

Store and send:

anonymous_id (stable per browser/device)user_id (stable per person)account_id (stable per workspace)With those three keys, you can measure behavior pre-login, per-user adoption, and account-level adoption without double counting.

Where you track events changes what you can trust. Browser events tell you what people attempted to do; server events tell you what actually happened.

Use client-side tracking for UI interactions and context you only have in the browser. Typical examples:

Batch events to reduce network chatter: queue in memory, flush every N seconds or at N events, and also flush on visibilitychange/page hide.

Use server-side tracking for any event that represents a completed outcome or a billing/security-sensitive action:

Server-side tracking is usually more accurate because it isn’t blocked by ad blockers, page reloads, or flaky connectivity.

A practical pattern is: track intent in the client and success on the server.

For example, emit feature_x_clicked_enable (client) and feature_x_enabled (server). Then enrich server events with client context by passing a lightweight context_id (or request ID) from the browser to the API.

Add resiliency where events are most likely to drop:

localStorage/IndexedDB, retry with exponential backoff, cap retries, and dedupe by event_id.This mix gives you rich behavioral detail without sacrificing trustworthy adoption metrics.

A feature-adoption analytics app is mainly a pipeline: capture events reliably, store them cheaply, and query them fast enough that people trust and use the results.

Start with a simple, separable set of services:

If you want to prototype an internal analytics web app quickly, a vibe-coding platform like Koder.ai can help you stand up the dashboard UI (React) and a backend (Go + PostgreSQL) from a chat-driven spec—useful for getting an initial “working slice” before you harden the pipeline.

Use two layers:

Choose the freshness your team actually needs:

Many teams do both: real-time counters for “what’s happening now,” plus nightly jobs that recompute canonical metrics.

Design for growth early by partitioning:

Also plan retention (e.g., 13 months raw, longer aggregates) and a replay path so you can fix bugs by reprocessing events rather than patching dashboards.

Good analytics starts with a model that can answer common questions quickly (funnels, retention, feature usage) without turning every query into a custom engineering project.

Most teams do best with two stores:

This split keeps your product database lean while making analytics queries cheaper and faster.

A practical baseline looks like this:

In the warehouse, denormalize what you query often (e.g., copy account_id onto events) to avoid expensive joins.

Partition raw_events by time (daily is common) and optionally by workspace/app. Apply retention by event type:

This prevents “infinite growth” from quietly becoming your biggest analytics problem.

Treat quality checks as part of modeling, not a later clean-up:

Store validation results (or a rejected-events table) so you can monitor instrumentation health and fix issues before dashboards drift.

Once your events are flowing, the next step is turning raw clicks into metrics that answer: “Is this feature actually getting adopted, and by whom?” Focus on four views that work together: funnels, cohorts, retention, and paths.

Define a funnel per feature so you can see where users drop off. A practical pattern is:

feature_used)Keep funnel steps tied to events you trust and name them consistently. If “first use” can happen in multiple ways, treat it as a step with OR conditions (e.g., import_started OR integration_connected).

Cohorts help you measure improvement over time without mixing old and new users. Common cohorts include:

Track adoption rates within each cohort to see if recent onboarding or UI changes are helping.

Retention is most useful when tied to a feature, not just “app opens.” Define it as repeating the feature’s core event (or value action) on Day 7/30. Also track “time to second use”—it’s often more sensitive than raw retention.

Break metrics down by dimensions that explain behavior: plan, role, industry, device, and acquisition channel. Segments often reveal that adoption is strong for one group and near-zero for another.

Add path analysis to find common sequences before and after adoption (e.g., users who adopt often visit pricing, then docs, then connect an integration). Use this to refine onboarding prompts and remove dead ends.

Dashboards fail when they try to serve everyone with one “master view.” Instead, design a small set of focused pages that match how different people make decisions, and make each page answer a clear question.

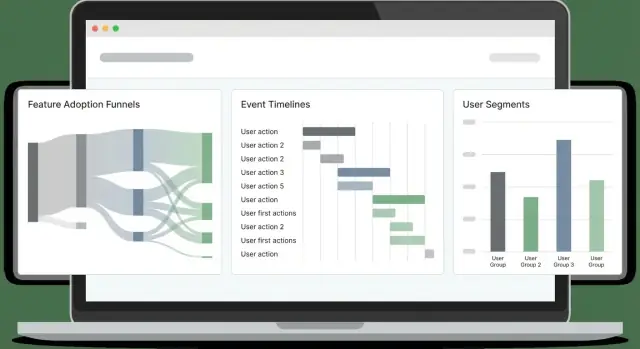

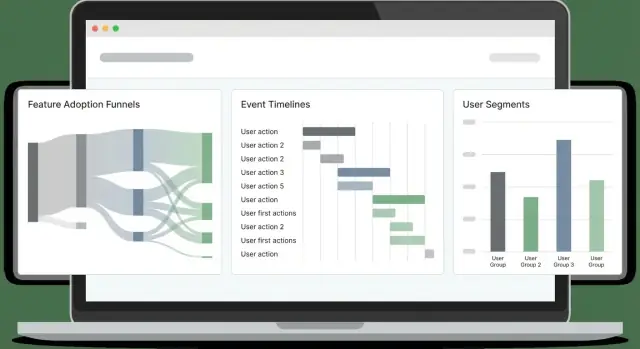

An executive overview should be a quick health check: adoption trend, active users, top features, and notable changes since the last release. A feature deep dive should be built for PMs and engineers: where users start, where they drop off, and what segments behave differently.

A simple structure that works well:

Include trend charts for the “what,” segmented breakdowns for the “who,” and drill-down for the “why.” The drill-down should let someone click a bar/point and see example users or workspaces (with appropriate permissions), so teams can validate patterns and investigate real sessions.

Keep filters consistent across pages so users don’t have to relearn controls. The most useful filters for feature adoption tracking are:

Dashboards become part of workflows when people can share exactly what they’re seeing. Add:

If you’re building this into a product analytics web app, consider a /dashboards page with “Pinned” saved views so stakeholders always land on the few reports that matter.

Dashboards are great for exploration, but teams usually notice problems when a customer complains. Alerts flip that: you learn about a breakage minutes after it happens, and you can tie it back to what changed.

Start with a few high-signal alerts that protect your core adoption flow:

Keep alert definitions readable and version-controlled (even a simple YAML file in your repo) so they don’t become tribal knowledge.

Basic anomaly detection can be very effective without fancy ML:

Add a release marker stream directly into charts: deploys, feature flag rollouts, pricing changes, onboarding tweaks. Each marker should include a timestamp, owner, and a short note. When metrics shift, you’ll immediately see likely causes.

Send alerts to email and Slack-like channels, but support quiet hours and escalation (warn → page) for severe issues. Every alert needs an owner and a runbook link (even a short /docs/alerts page) describing what to check first.

Analytics data quickly becomes personal data if you’re not careful. Treat privacy as part of your tracking design, not a legal afterthought: it reduces risk, builds trust, and prevents painful rework.

Respect consent requirements and let users opt out where needed. Practically, that means your tracking layer should check a consent flag before sending events, and it should be able to stop tracking mid-session if a user changes their mind.

For regions with stricter rules, consider “consent-gated” features:

Minimize sensitive data: avoid raw emails in events; use hashed/opaque IDs. Event payloads should describe behavior (what happened), not identity (who the person is). If you need to connect events to an account, send an internal user_id/account_id and keep the mapping in your database with proper security controls.

Also avoid collecting:

Document what you collect and why; link to a clear privacy page. Create a lightweight “tracking dictionary” that explains each event, its purpose, and retention period. In your product UI, link to /privacy and keep it readable: what you track, what you don’t, and how to opt out.

Implement role-based access so only authorized teams can view user-level data. Most people only need aggregated dashboards; reserve raw event views for a small group (e.g., data/product ops). Add audit logs for exports and user lookups, and set retention limits so old data expires automatically.

Done well, privacy controls won’t slow analysis—they’ll make your analytics system safer, clearer, and easier to maintain.

Shipping analytics is like shipping a feature: you want a small, verifiable first release, then steady iteration. Treat tracking work as production code with owners, reviews, and tests.

Begin with a tight set of golden events for one feature area (for example: Feature Viewed, Feature Started, Feature Completed, Feature Error). These should map directly to questions the team will ask weekly.

Keep scope narrow on purpose: fewer events means you can validate quality quickly, and you’ll learn which properties you actually need (plan, role, source, feature variant) before scaling out.

Use a checklist before you call tracking “done”:

Add sample queries you can run in both staging and production. Examples:

feature_name” (catch typos like Search vs. search)Make instrumentation part of your release process:

Plan for change: deprecate events instead of deleting them, version properties when meaning changes, and schedule periodic audits.

When you add a new required property or fix a bug, decide whether you need a backfill (and document the time window where data is partial).

Finally, keep a lightweight tracking guide in your docs and link it from dashboards and PR templates. A good starting point is a short checklist like /blog/event-tracking-checklist.

Start by writing down what “adoption” means for your product:

Then choose the definition(s) that best match how your feature delivers value and turn them into measurable events.

Pick a small set you can review weekly plus a quick post-release check. Common adoption metrics include:

Add explicit thresholds (e.g., “≥ 25% adoption in 30 days”) so results lead to decisions, not debate.

Define core entities up front so reports stay understandable:

Use a consistent convention like verb_noun and stick to one tense (past or present) across the product.

Practical rules:

Create a minimal “event contract” so every event can be segmented and joined later. A common baseline:

user_id (nullable if anonymous)Track intent in the browser and success on the server.

This hybrid approach reduces data loss from ad blockers/reloads while keeping adoption metrics trustworthy. If you need to connect context, pass a context_id (request ID) from client → API and attach it to server events.

Use three stable keys:

anonymous_id (per browser/device)user_id (per person)account_id (per workspace)Link anonymous → identified only after strong proof (successful login, verified magic link, SSO). Track auth transitions as events (, , ) and avoid rotating the anonymous cookie on logout to prevent fragmented sessions and inflated uniques.

Adoption is rarely a single click, so model it as a funnel:

If “first use” can happen multiple ways, define that step with (e.g., OR ) and keep steps tied to events you trust (often server-side for outcomes).

Start with a few focused pages mapped to decisions:

Keep filters consistent across pages (date range, plan, account attributes, region, app version). Add saved views and CSV export so stakeholders can share exactly what they’re seeing.

Build safeguards into your pipeline and process:

event_version and deprecate rather than deleteFor each event, capture at minimum user_id (or anonymous_id), account_id (if applicable), timestamp, and a small set of relevant properties (plan/role/device/flag).

clicked vs pressed)report_exported vs every hover)feature_key (e.g., bulk_upload) rather than relying on display namesDocument names and when they fire in an instrumentation spec stored with your code.

anonymous_idaccount_id (for B2B/multi-seat)timestamp (server-generated when possible)feature_keyplan (or tier)Keep optional properties limited and consistent (same keys and value formats across events).

login_successlogoutaccount_switchedimport_startedintegration_connectedAlso treat privacy as design: consent gating, avoid raw emails/free-text in events, and restrict access to user-level data with roles + audit logs.