Sep 07, 2025·8 min

How to Build a Web App for Feature Rollback Decisions

Learn how to design and build a web app that centralizes rollback signals, approvals, and audit trails—so teams can decide faster and reduce risk.

Learn how to design and build a web app that centralizes rollback signals, approvals, and audit trails—so teams can decide faster and reduce risk.

A “rollback decision” is the moment a team decides whether to undo a change that’s already in production—disabling a feature flag, reverting a deployment, rolling back a config, or pulling a release. It sounds simple until you’re in the middle of an incident: signals conflict, ownership is unclear, and every minute without a decision has a cost.

Teams struggle because the inputs are scattered. Monitoring graphs live in one tool, support tickets in another, deploy history in CI/CD, feature flags somewhere else, and the “decision” is often just a rushed chat thread. Later, when someone asks “why did we roll back?” the evidence is gone—or painful to reconstruct.

The goal of this web app is to create one place where:

That doesn’t mean it should be a big red button that automatically rolls things back. By default, it’s decision support: it helps people move from “we’re worried” to “we’re confident” with shared context and a clear workflow. You can add automation later, but the first win is reducing confusion and speeding up alignment.

A rollback decision touches multiple roles, so the app should serve different needs without forcing everyone into the same view:

When this works well, you don’t just “roll back faster.” You make fewer panic moves, keep a cleaner audit trail, and turn each production scare into a repeatable, calmer decision process.

A rollback decision app works best when it mirrors how people actually respond to risk: someone spots a signal, someone coordinates, someone decides, and someone executes. Start by defining the core roles, then design journeys around what each person needs in the moment.

On-call engineer needs speed and clarity: “What changed, what’s breaking, and what’s the safest action right now?” They should be able to propose a rollback, attach evidence, and see whether approvals are required.

Product owner needs customer impact and trade-offs: “Who is affected, how severe is it, and what do we lose if we roll back?” They often contribute context (feature intent, rollout plan, comms) and may be an approver.

Incident commander needs coordination: “Are we aligned on the current hypothesis, decision status, and next steps?” They should be able to assign owners, set a decision deadline, and keep stakeholders synchronized.

Approver (engineering manager, release captain, compliance) needs confidence: “Is this decision justified and reversible, and does it follow policy?” They require a concise decision summary plus supporting signals.

Define four clear capabilities: propose, approve, execute, and view. Many teams allow anyone on-call to propose, a small group to approve, and only a limited set to execute in production.

Most rollback decisions go sideways due to scattered context, unclear ownership, and missing logs/evidence. Your app should make ownership explicit, keep all inputs in one place, and capture a durable record of what was known at decision time.

A rollback app succeeds or fails on whether its data model matches how your team actually ships software and handles risk. Start with a small set of clear entities, then add structure (taxonomy and snapshots) that makes decisions explainable later.

At minimum, model these:

Keep relationships explicit so dashboards can answer “what’s affected?” quickly:

Decide early what must never change:

Add lightweight enums that make filtering consistent:

This structure supports fast incident triage dashboards and creates an audit trail that holds up during post-incident reviews.

Before you build workflows and dashboards, define what your team means by “rollback.” Different teams use the same word to describe very different actions—with very different risk profiles. Your app should make the type of rollback explicit, not implied.

Most teams need three core mechanisms:

In the UI, treat these as distinct “action types” with their own prerequisites, expected impact, and verification steps.

A rollback decision often depends on where the issue is happening. Model scope explicitly:

us-east, eu-west, a specific cluster, or a percentage rollout.Your app should let a reviewer see “disable flag in prod, EU only” vs “global prod rollback,” because those are not equivalent decisions.

Decide what the app is allowed to trigger:

Make actions idempotent to avoid conflicting clicks during an incident:

Clear definitions here keep your approval workflow calm and your incident timeline clean.

Rollback decisions get easier when the team agrees on what “good evidence” looks like. Your app should turn scattered telemetry into a consistent decision packet: signals, thresholds, and the context that explains why those numbers changed.

Build a checklist that always appears for a release or feature under review. Keep it short, but complete:

The goal isn’t to show every chart—it’s to confirm the same core signals were checked every time.

Single spikes happen. Decisions should be driven by sustained deviation and rate of change.

Support both:

In the UI, show a small “trend strip” next to each metric (last 60–120 minutes) so reviewers can tell whether the problem is growing, stable, or recovering.

Numbers without context waste time. Add a “Known changes” panel that answers:

This panel should pull from release notes, feature flags, and deployments, and it should make “nothing changed” an explicit statement—not an assumption.

When someone needs details, provide quick links that open the right place immediately (dashboards, traces, tickets) via /integrations, without turning your app into yet another monitoring tool.

A rollback decision app earns its keep when it turns “everyone in a chat thread” into a clear, time-boxed workflow. The goal is simple: one accountable proposer, a defined set of reviewers, and a single final approver—without slowing down urgent action.

The proposer starts a Rollback Proposal tied to a specific release/feature. Keep the form quick, but structured:

The proposal should immediately generate a shareable link and notify the assigned reviewers.

Reviewers should be prompted to add evidence and a stance:

To keep discussions productive, store notes next to the proposal (not scattered across tools), and encourage linking to tickets or monitors using relative links like /incidents/123 or /releases/45.

Define a final approver (often the on-call lead or product owner). Their approval should:

Rollbacks are time-sensitive, so bake in deadlines:

If the SLA is missed, the app should escalate—first to a backup reviewer, then to an on-call manager—while keeping the decision record unchanged and auditable.

Sometimes you can’t wait. Add a Break-glass Execute path that allows immediate action while requiring:

Execution shouldn’t end at “button clicked.” Capture confirmation steps (rollback completed, flags updated, monitoring checked) and close the record only when verification is signed off.

When a release is misbehaving, people don’t have time to “figure out the tool.” Your UI should reduce cognitive load: show what’s happening, what’s been decided, and what the safe next actions are—without burying anyone in charts.

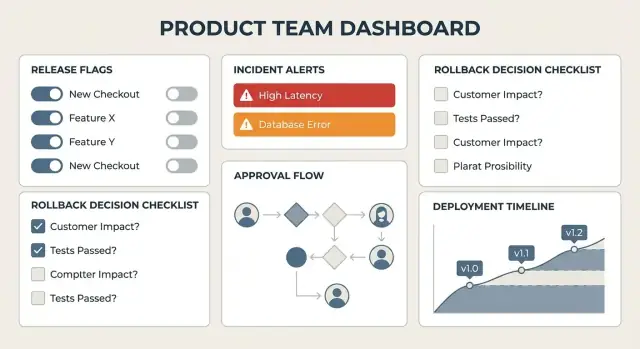

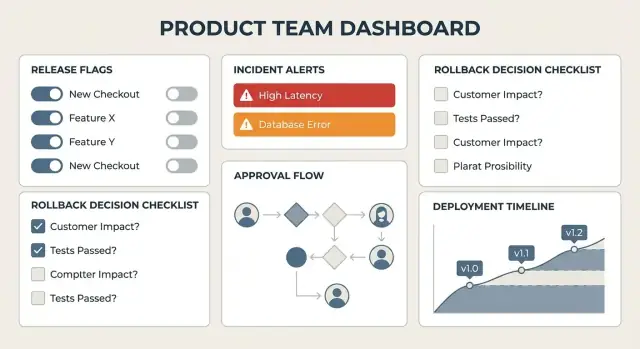

Overview (home dashboard). This is the triage entry point. It should answer three questions in seconds: What’s currently at risk? What decisions are pending? What changed recently? A good layout is a left-to-right scan: active incidents, pending approvals, and a short “latest releases / flag changes” stream.

Incident/Decision page. This is where the team converges. Pair a narrative summary (“What we’re seeing”) with live signals and a clear decision panel. Keep the decision controls in a consistent location (right rail or sticky footer) so people don’t hunt for “Propose rollback.”

Feature page. Treat this as the “owner view”: current rollout state, recent incidents linked to the feature, associated flags, known risky segments, and a history of decisions.

Release timeline. A chronological view of deployments, flag ramps, config changes, and incidents. This helps teams connect cause and effect without jumping between tools.

Use prominent, consistent status badges:

Avoid subtle color-only cues. Pair color with labels and icons, and keep wording consistent across every screen.

A decision pack is a single, shareable snapshot that answers: Why are we considering a rollback, and what are the options?

Include:

This view should be easy to paste into chat and easy to export later for reporting.

Design for speed and clarity:

The goal isn’t flashy dashboards—it’s a calm interface that makes the right action feel obvious.

Integrations are what turn a rollback app from “a form with opinions” into a decision cockpit. The goal isn’t to ingest everything—it’s to reliably pull in the few signals and controls that let a team decide and act quickly.

Start with five sources most teams already use:

Use the least fragile method that still meets your speed requirements:

Different systems describe the same thing differently. Normalize incoming data into a small, stable schema such as:

source (deploy/flags/monitoring/ticketing/chat)entity (release, feature, service, incident)timestamp (UTC)environment (prod/staging)severity and metric_valueslinks (relative links to internal pages like /incidents/123)This lets the UI show a single timeline and compare signals without bespoke logic per tool.

Integrations fail; the app shouldn’t become silent or misleading.

When the system can’t verify a signal, say so plainly—uncertainty is still useful information.

When a rollback is on the table, the decision itself is only half the story. The other half is making sure you can later answer: why did we do this, and what did we know at the time? A clear audit trail reduces second-guessing, speeds up reviews, and makes handoffs between teams calmer.

Treat your audit trail as an append-only record of notable actions. For each event, capture:

This makes the audit log useful without forcing you into a complex “compliance” narrative.

Metrics and dashboards change minute by minute. To avoid “moving target” confusion, store evidence snapshots whenever a proposal is created, updated, approved, or executed.

A snapshot can include: the query used (e.g., error rate for feature cohort), the values returned, charts/percentiles, and links to the original source. The goal is not to mirror your monitoring tool—it’s to preserve the specific signals the team relied on.

Decide retention by practicality: how long you want incident/decision history to stay searchable, and what gets archived. Offer exports that teams actually use:

Add fast search and filters across incidents and decisions (service, feature, date range, approver, outcome, severity). Basic reporting can summarize counts of rollbacks, median time to approval, and recurring triggers—useful for product operations and post-incident reviews.

A rollback decision app is only useful if people trust it—especially when it can change production behavior. Security here isn’t just “who can log in”; it’s how you prevent rushed, accidental, or unauthorized actions while still moving quickly during an incident.

Offer a small set of clear sign-in paths and make the safest one the default.

Use role-based access control (RBAC) with environment scoping so permissions are different for dev/staging/production.

A practical model:

Environment scoping matters: someone might be an Operator in staging but only a Viewer in production.

Rollbacks can be high-impact, so add friction where it prevents mistakes:

Log sensitive access (who viewed incident evidence, who changed thresholds, who executed rollback) with timestamps and request metadata. Make logs append-only and easy to export for reviews.

Store secrets—API tokens, webhook signing keys—in a vault (not in code, not in plain database fields). Rotate them, and revoke immediately when an integration is removed.

A rollback decision app should feel lightweight to use, but it’s still coordinating high-stakes actions. A clean build plan helps you ship an MVP quickly without creating a “mystery box” no one trusts later.

For an MVP, keep the core architecture boring:

This shape supports the most important goal: a single source of truth for what was decided and why, while letting integrations happen asynchronously (so a slow third-party API doesn’t block the UI).

Choose what your team can operate confidently. Typical combinations include:

If you’re a small team, favor fewer moving parts. One repo and one deployable service is often enough until usage proves otherwise.

If you want to accelerate the first working version without sacrificing maintainability, a vibe-coding platform like Koder.ai can be a practical starting point: you can describe the roles, entities, and workflow in chat, generate a React web UI with a Go + PostgreSQL backend, and iterate quickly on forms, timelines, and RBAC. It’s especially useful for this kind of internal tool because you can build an MVP, export the source code, and then harden integrations, audit logging, and deployment over time.

Focus tests on the parts that prevent mistakes:

Treat the app like production software from day one:

Plan the MVP around decision capture + auditability, then expand into richer integrations and reporting once teams rely on it daily.

A rollback decision is the point where the team chooses whether to undo a production change—by reverting a deploy, disabling a feature flag, rolling back a config, or pulling a release. The hard part isn’t the mechanism; it’s aligning quickly on evidence, ownership, and next steps while the incident is unfolding.

It’s primarily for decision support first: consolidate signals, structure the proposal/review/approval flow, and preserve an audit trail. Automation can come later, but the initial value is reducing confusion and speeding up alignment with shared context.

It should serve multiple roles with tailored views:

Start with a small set of core entities:

Then make relationships explicit (e.g., Feature ↔ Release as many-to-many, Decision ↔ Action as one-to-many) so you can answer “what’s affected?” fast during an incident.

Treat “rollback” as distinct action types with different risk profiles:

The UI should force the team to pick the mechanism explicitly and capture scope (env/region/% rollout).

A practical checklist includes:

Support both (e.g., “>2% for 10 minutes”) and comparisons (e.g., “down 5% vs same day last week”), and show small trend strips so reviewers can see direction, not just a point value.

Use a simple, time-boxed flow:

Add SLAs (review/approval deadlines) and escalation to backups so the record stays clear even under time pressure.

Break-glass should allow immediate execution but increase accountability:

This keeps the team fast in true emergencies while still producing a defensible record afterward.

Make actions idempotent so repeated clicks don’t create conflicting changes:

This prevents double rollbacks and reduces chaos when multiple responders are active.

Prioritize five integration points:

Use where immediacy matters, where necessary, and keep a that’s clearly labeled and requires a reason so degraded operation remains trustworthy.

The same decision record should be understandable to all of them, without forcing identical workflows.