Apr 24, 2025·8 min

How to Build a Web App That Tracks Manual Work to Automate

Learn how to plan and build a web app that tracks manual work, captures proof and time, and turns repeated tasks into an automation-ready backlog.

Learn how to plan and build a web app that tracks manual work, captures proof and time, and turns repeated tasks into an automation-ready backlog.

Before you sketch screens or pick a database, get crisp about what you’re trying to measure. The goal isn’t “track everything employees do.” It’s to capture manual work reliably enough to decide what to automate first—based on evidence, not opinions.

Write down the recurring activities that are currently done by hand (copy/paste between systems, re-keying data, checking documents, chasing approvals, reconciling spreadsheets). For each activity, describe:

If you can’t describe it in two sentences, you’re probably mixing multiple workflows.

A tracking app succeeds when it serves everyone who touches the work—not just the person who wants the report.

Expect different motivations: operators want less admin work; managers want predictability; IT wants stable requirements.

Tracking is only useful if it connects to outcomes. Pick a small set you can compute consistently:

Define boundaries early to avoid building an accidental monster.

This app is typically not:

It can complement those systems—and sometimes replace a narrow slice—if that’s explicitly your intention. If you already use tickets, your tracking app might simply attach structured “manual effort” data to existing items (see /blog/integrations).

A tracking app succeeds or fails based on focus. If you try to capture every “busy thing” people do, you’ll collect noisy data, frustrate users, and still won’t know what to automate first. Start with a small, explicit scope that can be measured consistently.

Choose workflows that are common, repeatable, and already painful. A good starting set usually spans different kinds of manual effort, for example:

Write a simple definition everyone can apply the same way. For instance: “Any step where a person moves, checks, or transforms information without a system doing it automatically.” Include examples and a few exclusions (e.g., customer calls, creative writing, relationship management) so people don’t log everything.

Be explicit about where the workflow starts and ends:

Decide how time will be recorded: per task, per shift, or per week. “Per task” gives the best automation signal, but “per shift/week” can be a practical MVP if tasks are too fragmented. The key is consistency, not precision.

Before you pick fields, screens, or dashboards, get a clear picture of how the work actually happens today. A lightweight map will uncover what you should track and what you can ignore.

Start with a single workflow and write it in a straight line:

Trigger → steps → handoffs → outcome

Keep it concrete. “Request arrives in a shared inbox” is better than “Intake happens.” For each step, note who does it, what tool they use, and what “done” means. If there are handoffs (from Sales to Ops, from Ops to Finance), call those out explicitly—handoffs are where work disappears.

Your tracking app should highlight friction, not just activity. As you map the flow, mark:

These delay points later become high-value fields (e.g., “blocked reason”) and high-priority automation candidates.

List the systems people rely on to complete the work: email threads, spreadsheets, ticketing tools, shared drives, legacy apps, chat messages. When multiple sources disagree, note which one “wins.” This is essential for later integrations and for avoiding duplicate data entry.

Most manual work is messy. Note the common reasons tasks deviate: special customer terms, missing documents, regional rules, one-off approvals. You’re not trying to model every edge case—just record the categories that explain why a task took longer or required extra steps.

A manual-work tracker succeeds or fails on one thing: whether people can log work quickly while still generating data you can act on. The goal isn’t “collect everything.” It’s to capture just enough structure to spot patterns, quantify impact, and turn repeated pain into automation candidates.

Keep your core data model simple and consistent across teams:

This structure supports both day-to-day logging and later analysis without forcing users to answer a long questionnaire.

Time is essential for prioritizing automation, but it must be easy:

If time feels “policed,” adoption drops. Position it as a way to remove busywork, not monitor individuals.

Add one required field that explains why the work wasn’t automated:

Use a short dropdown plus an optional note. The dropdown makes reporting possible; the note provides context for exceptions.

Every Task should end with a few consistent outcomes:

With these fields, you can quantify waste (rework), identify failure modes (error types), and build a credible automation backlog from real work—not opinions.

If logging a work item feels slower than just doing the work, people will skip it—or they’ll enter vague data you can’t use later. Your UX goal is simple: capture the minimum useful detail with the least friction.

Start with a small set of screens that cover the full loop:

Design for speed over completeness. Use keyboard shortcuts for common actions (create item, change status, save). Provide templates for repeated work so users aren’t retyping the same descriptions and steps.

Where possible, use in-place editing and sensible defaults (e.g., auto-assign to the current user, set “started at” when they open an item).

Free-text is useful, but it doesn’t aggregate well. Add guided fields that make reporting reliable:

Make the app readable and usable for everyone: strong contrast, clear labels (not placeholder-only), visible focus states for keyboard navigation, and mobile-friendly layouts for quick logging on the go.

If your app is meant to guide automation decisions, people need to trust the data. That trust breaks when anyone can edit anything, approvals are unclear, or there’s no record of what changed. A simple permission model plus a lightweight audit trail solves most of this.

Start with four roles that map to how work actually gets logged:

Avoid per-user custom rules early on; role-based access is easier to explain and maintain.

Decide which fields are “facts” versus “notes,” and lock down the facts once reviewed.

A practical approach:

This keeps reporting stable while still allowing legitimate corrections.

Add an audit log for key events: status changes, time adjustments, approvals/rejections, evidence added/removed, and permission changes. Store at least: actor, timestamp, old value, new value, and (optionally) a short comment.

Make it visible on each record (e.g., an “Activity” tab) so disputes don’t turn into Slack archaeology.

Set retention rules early: how long to keep logs and related evidence (images, files, links). Many teams do 12–24 months for logs, and shorter for bulky attachments.

If you allow uploads, treat them as part of the audit story: version files, record deletions, and restrict access by role. This matters when an entry becomes the basis for an automation project.

A practical MVP should be easy to build, easy to change, and boring to operate. The goal isn’t to predict your future automation platform—it’s to reliably capture manual-work evidence with minimal friction.

Start with a straightforward layout:

This separation keeps the UI fast to iterate while the API remains the source of truth.

Pick a stack your team can ship quickly with strong community support. Common combinations:

Avoid exotic tech early—your biggest risk is product uncertainty, not performance.

If you want to accelerate the MVP without locking yourself into a dead-end tool, a vibe-coding platform like Koder.ai can help you go from a written spec to a working React web app with a Go API and PostgreSQL—via chat—while still keeping the option to export the source code, deploy/host, and roll back safely using snapshots. That’s especially useful for internal tools like manual-work trackers where requirements evolve quickly after the first pilot.

Design endpoints that mirror what users actually do, not what your database tables look like. Typical “verb-shaped” capabilities:

This makes it easier to support future clients (mobile, integrations) without rewriting your core.

POST /work-items

POST /work-items/{id}/time-logs

POST /work-items/{id}/attachments

POST /work-items/{id}/status

GET /work-items?assignee=me&status=in_progress

Even early adopters will ask, “Can I upload what I already have?” and “Can I get my data out?” Add:

It reduces re-entry, speeds onboarding, and prevents your MVP from feeling like a dead-end tool.

If your app depends on people remembering to log everything, adoption will drift. A practical approach is to start with manual entry (so the workflow is clear), then add connectors only where they genuinely remove effort—especially for high-volume, repetitive work.

Look for steps where people already leave a trail elsewhere. Common “low-friction” integrations include:

Integrations get messy fast if you can’t reliably match items across systems. Create a unique identifier (e.g., MW-10482) and store external IDs alongside it (email message ID, spreadsheet row key, ticket ID). Show that identifier in notifications and exports so people can reference the same item everywhere.

The goal isn’t to eliminate humans immediately—it’s to reduce typing and avoid rework.

Pre-fill fields from integrations (requester, subject, timestamps, attachments), but keep human override so the log reflects reality. For example, an email can suggest a category and estimated effort, while the person confirms the actual time spent and outcome.

A good rule: integrations should create drafts by default, and humans should “confirm and submit” until you trust the mapping.

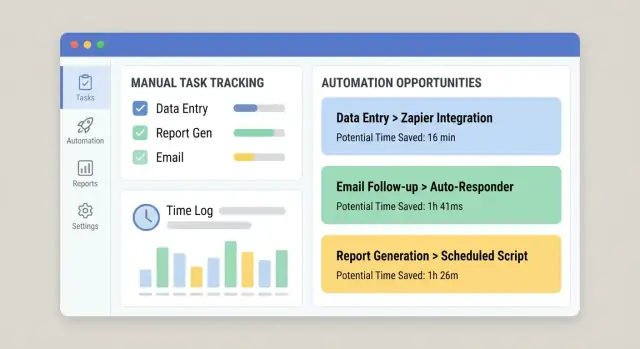

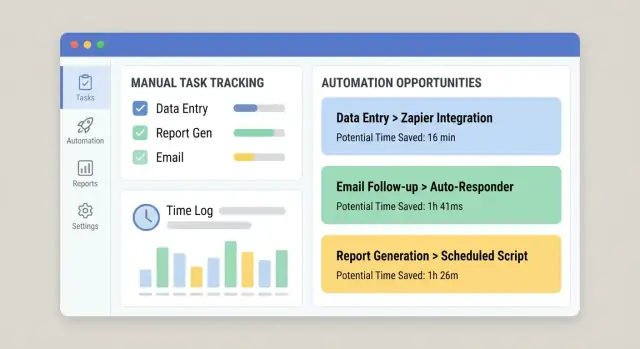

Tracking manual work is only valuable if it turns into decisions. The goal of your app should be to convert raw logs into a prioritized list of automation opportunities—your “automation backlog”—that’s easy to review in a weekly ops or improvement meeting.

Start with a simple, explainable score so stakeholders can see why something rises to the top. A practical set of criteria:

Keep the score visible next to the underlying numbers so it doesn’t feel like a black box.

Add a dedicated view that groups logs into repeatable “work items” (for example: “Update customer address in System A then confirm in System B”). Automatically rank items by score and show:

Make tagging lightweight: one-click tags like system, input type, and exception type. Over time, these reveal stable patterns (good for automation) versus messy edge cases (better for training or process fixes).

A simple estimate is enough:

ROI (time) = (time saved × frequency) − maintenance assumption

For maintenance, use a fixed monthly hours estimate (e.g., 2–6 hrs/month) so teams compare opportunities consistently. This keeps your backlog focused on impact, not opinions.

Dashboards are only useful if they answer real questions quickly: “Where are we spending time?” “What’s slowing us down?” and “Did our last change actually help?” Design reporting around decisions, not vanity charts.

Most leaders don’t want every detail—they want clear signals. A practical baseline dashboard includes:

Keep each card clickable so a leader can move from a headline number to “what’s driving this.”

A single week can mislead. Add trend lines and simple date filters (last 7/30/90 days). When you change a workflow—like adding an integration or simplifying a form—make it easy to compare before vs. after.

A lightweight approach: store a “change marker” (date and description) and show a vertical line on charts. That helps people connect improvements to real interventions instead of guessing.

Tracking manual work often mixes hard data (timestamps, counts) and softer inputs (estimated time). Label metrics clearly:

If time is estimated, say so in the UI. Better to be honest than precise-looking and wrong.

Every chart should support “show me the records.” Drill-down builds trust and speeds action: users can filter by workflow, team, and date range, then open the underlying work items to see notes, handoffs, and common blockers.

Link dashboards to your “automation backlog” view so the biggest time sinks can be converted into candidate improvements while the context is fresh.

If your app captures how work gets done, it will quickly collect sensitive details: customer names, internal notes, attachments, and “who did what when.” Security and reliability aren’t add-ons—you’ll lose trust (and adoption) without them.

Start with role-based access that matches real responsibilities. Most users should only see their own logs or their team’s. Limit admin powers to a small group, and separate “can edit entries” from “can approve/export data.”

For file uploads, assume every attachment is untrusted:

You don’t need enterprise security to ship an MVP, but you do need the basics:

Capture system events for troubleshooting and auditability: sign-ins, permission changes, approvals, import jobs, and failed integrations. Keep logs structured and searchable, but don’t store secrets—never write API tokens, passwords, or full attachment contents to logs. Redact sensitive fields by default.

If you handle PII, decide early on:

These choices affect your schema, permissions, and backups—much easier to plan now than retrofit later.

A tracking app succeeds or fails on adoption. Treat rollout like a product launch: start small, measure behavior, and iterate quickly.

Pilot with one team first—ideally a group that already feels the pain of manual work and has a clear workflow. Keep scope narrow (one or two work types) so you can support users closely and adjust the app without disrupting the whole organization.

During the pilot, collect feedback in the moment: a one-click “Something was hard” prompt after logging, plus a weekly 15-minute check-in. When adoption stabilizes, expand to the next team with similar work patterns.

Set simple, visible targets so everyone knows what “good” looks like:

Track these in an internal dashboard and review them with team leads.

Add in-app guidance where people hesitate:

Set a review cadence (monthly works well) to decide what gets automated next and why. Use the log data to prioritize: high-frequency + high-time tasks first, with clear owners and expected impact.

Close the loop by showing outcomes: “Because you logged X, we automated Y.” That’s the fastest way to keep people logging.

If you’re iterating quickly across teams, consider tooling that supports rapid changes without destabilizing the app. For example, Koder.ai’s planning mode helps you outline scope and flows before generating changes, and snapshots/rollback make it safer to adjust workflows, fields, and permissions as you learn from the pilot.

Start by listing recurring hand-done activities and writing each one in plain terms:

If you can’t describe it in two sentences, split it into multiple workflows so you can measure it consistently.

Start with 3–5 workflows that are common, repeatable, and already painful (copy/paste, data entry, approvals, reconciliations, manual reporting). A narrow scope improves adoption and produces cleaner data for automation decisions.

Use a definition everyone can apply the same way, such as: “Any step where a person moves, checks, or transforms information without a system doing it automatically.”

Also document exclusions (e.g., relationship management, creative writing, customer calls) to prevent people from logging “everything” and diluting your dataset.

Map each workflow as:

For each step, capture who does it, what tool they use, and what “done” means. Explicitly note handoffs and rework loops—those become high-value tracking fields later (like blocker reasons and rework counts).

A practical, reusable core model is:

Offer multiple ways to log time so people don’t avoid the app:

The priority is consistency and low friction, not perfect precision—position it as busywork removal, not surveillance.

Make one required category for why the work stayed manual, using a short dropdown:

Add an optional note for context. This creates reporting-friendly data while still capturing nuance for automation design.

Use simple role-based access:

Lock “facts” (time, status, evidence) after approval and keep an audit log of key changes (who, when, old/new values). This stabilizes reporting and builds trust.

A “boring” MVP architecture is usually enough:

This keeps iteration fast while preserving a reliable source of truth.

Create a repeatable way to turn logs into ranked opportunities using transparent criteria:

Then generate an “automation backlog” view that shows total time spent, trends, top teams, and common blockers so weekly decisions are based on evidence—not opinions.

Keep it consistent across teams so reporting and automation scoring work later.