Jun 27, 2025·8 min

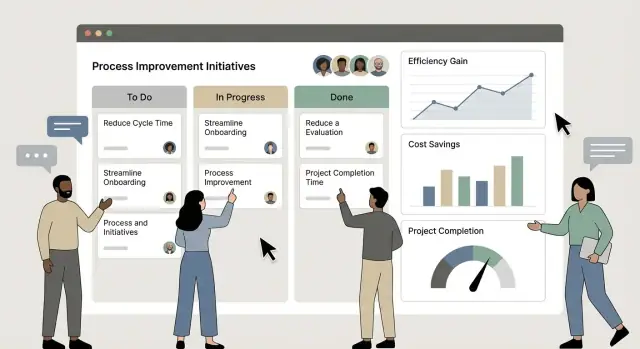

Build a Web App to Track Process Improvement Initiatives

Step-by-step guide to design, build, and launch a web app that captures improvement ideas, tracks initiatives, owners, KPIs, approvals, and results.

Step-by-step guide to design, build, and launch a web app that captures improvement ideas, tracks initiatives, owners, KPIs, approvals, and results.

Before you plan screens or databases, define what a “process improvement initiative” means inside your app. In most organizations, it’s any structured effort to make work better—reducing time, cost, defects, risk, or frustration—tracked from idea to implementation to results. The key is that it’s more than a note or suggestion: it has an owner, a status, and an expected outcome you can measure.

Operators and frontline staff need a quick way to submit ideas and check what happened to them. They care about simplicity and feedback loops (e.g., “approved,” “needs more info,” “implemented”).

Managers need visibility across their area: what’s in progress, who’s responsible, where things are stuck, and what support is needed.

Improvement leads (Lean/CI teams, PMO, ops excellence) need consistency: standard fields, stage gates, lightweight governance, and a way to spot patterns across initiatives.

Executives need the summary view: progress, impact, and confidence that work is controlled—not a spreadsheet guessing game.

A tracking app should deliver three outcomes:

For v1, choose a narrow definition of done. A strong first release might mean: people can submit an idea, it can be reviewed and assigned, it moves through a few clear statuses, and a basic dashboard shows counts and key impact metrics.

If you can replace one spreadsheet and one recurring status meeting, you’ve shipped something valuable.

Before writing requirements, capture how improvement work actually moves today—especially the messy parts. A lightweight “current state” map prevents you from building a tool that only works in theory.

List what’s slowing people down and where information gets lost:

Turn each pain point into a requirement like “single status per initiative” or “visible owner and next step.”

Decide what systems already contain authoritative data so your web app doesn’t become a second, competing record:

Write down which system “wins” for each data type. Your app can store links/IDs and sync later, but it should be clear where people should look first.

Draft a short list of required fields (e.g., title, site/team, owner, stage, due date, expected impact) and must-have reports (e.g., pipeline by stage, overdue items, realized impact by month).

Keep it tight: if a field isn’t used in reporting, automation, or decisions, it’s optional.

Explicitly exclude nice-to-haves: complex scoring models, full resource planning, custom dashboards per department, or deep integrations. Put these in a “later” list so version 1 ships quickly and earns trust.

A tracking app works best when every initiative follows the same “path” from idea to results. Your lifecycle should be simple enough that people can understand it at a glance, but strict enough that work doesn’t drift or get stuck.

A practical default is:

Idea submission → Triage → Approval → Implementation → Verification → Closure

Each stage should answer one question:

Avoid vague labels like “In progress.” Use statuses that describe exactly what’s happening, for example:

For each stage, define what must be filled in before moving forward. Example:

Build these into the app as required fields and simple validation messages.

Real work loops. Make it normal and visible:

Done well, the lifecycle becomes shared language—people know what “Approved” or “Verified” means, and your reporting stays accurate.

Clear roles and permissions keep initiatives moving—and prevent the “everyone can edit everything” problem that quietly breaks accountability. Start with a small set of standard roles, then add flexibility for departments, sites, and cross‑functional work.

Define one primary owner per initiative. If work spans multiple functions, add contributors (or co-owners if you truly need them), but keep a single person responsible for deadlines and final updates.

Also support grouping by team/department/site so people can filter work they care about and leaders can see rollups.

Decide permissions by role and by relationship to the initiative (creator, owner, same department, same site, executive).

| Action | Submitter | Owner | Approver | Reviewer | Admin |

|---|---|---|---|---|---|

| View | Yes (own) | Yes | Yes | Yes | Yes |

| Edit fields | Limited | Yes | Limited | Limited | Yes |

| Approve stage changes | No | No | Yes | No | Yes |

| Close initiative | No | Yes (with approval, if required) | Yes | No | Yes |

| Delete | No | No | No | No | Yes |

Plan for read-only executive access from day one: a dashboard that shows progress, throughput, and impact without exposing sensitive notes or draft cost estimates. This avoids “shadow spreadsheets” while keeping governance tight.

The fastest way to slow down a tracking app is to over-design the data model up front. Aim for a “minimum complete record”: enough structure to compare initiatives, report progress, and explain decisions later—without turning every form into a questionnaire.

Start with a single, consistent initiative record that makes it obvious what the work is and where it belongs:

These fields help teams sort, filter, and avoid duplicate efforts.

Every initiative should answer two questions: “Who is responsible?” and “When did things happen?”

Store:

Timestamps sound boring, but they power cycle-time reporting and prevent “we think it was approved last month” debates.

Keep KPI tracking lightweight but consistent:

To make audits and handoffs painless, include:

If you capture these four areas well, most reporting and workflow features become much easier later.

A tracking app only works if people can update it in seconds—especially supervisors and operators who are juggling real work. Aim for a simple navigation model with a few “home base” pages and consistent actions everywhere.

Keep the information architecture predictable:

If users can’t tell where to go next, the app will become a read-only archive.

Make it easy to find “my stuff” and “today’s priorities.” Add a prominent search bar and filters people actually use: status, owner, site/area, and optionally date ranges.

Saved views turn complex filtering into one click. Examples: “Open initiatives – Site A,” “Waiting on approval,” or “Overdue follow-ups.” If you support sharing saved views, team leads can standardize how their area tracks work.

On both the list and detail pages, enable quick actions:

Use readable fonts, strong contrast, and clear button labels. Support keyboard navigation for office users.

For mobile, prioritize key actions: view status, add a comment, complete a checklist item, and upload a photo. Keep tap targets large and avoid dense tables so the app works on the shop floor as well as at a desk.

A good tech stack is the one your team can support six months after launch—not the trendiest option. Start with what skills you already have (or can hire reliably), then choose tools that make it easy to ship updates and keep data safe.

For many teams, the simplest path is a familiar “standard web app” setup:

If your main challenge is speed—getting from requirements to a working, usable internal tool—Koder.ai can help you prototype and deliver a process-improvement tracker from a chat interface.

In practice, that means you can describe your lifecycle (Idea → Triage → Approval → Implementation → Verification → Closure), your roles/permissions, and your must-have pages (Inbox, Initiative List, Detail, Reports), and generate a working web app quickly. Koder.ai is designed for building web, server, and mobile applications (React for the web UI, Go + PostgreSQL on the backend, and Flutter for mobile), with support for deployment/hosting, custom domains, source code export, and snapshots/rollback—useful when you’re iterating during a pilot.

If you mainly need idea intake, status tracking, approvals, and dashboards, buying continuous improvement software or using low-code (Power Apps, Retool, Airtable/Stacker) can be faster and cheaper.

Build custom when you have specific workflow rules, complex permissions, or integration needs (ERP, HRIS, ticketing) that off‑the‑shelf tools can’t match.

Cloud hosting (AWS/Azure/GCP, or simpler platforms like Heroku/Fly.io/Render) usually wins for speed, scaling, and managed databases. On‑prem can be required for strict data residency, internal network access, or regulated environments—just plan for more operations work.

Define a baseline for:

Security work is easiest when you treat it as part of the product, not a final checklist. For a process-improvement tracker, the goals are simple: make sign-in painless, keep data appropriately restricted, and always be able to explain “what changed, and why.”

If your organization already uses Google Workspace, Microsoft Entra ID (Azure AD), Okta, or similar, single sign-on (SSO) is usually the best default. It reduces password resets, makes offboarding safer (disable the employee account once), and improves adoption because users don’t need a new credential.

Email/password can still work—especially for smaller teams or external collaborators—but you’ll take on more responsibility (password policies, resets, breach monitoring). If you go this route, store passwords using proven libraries and strong hashing (never “roll your own”).

For multi-factor authentication (MFA), consider a “step-up” approach: require MFA for admins, approvers, and anyone viewing sensitive initiatives. If you use SSO, MFA can often be enforced centrally by IT.

Not everyone needs access to everything. Start with a least-privilege model:

This prevents accidental sharing and makes reporting safer—especially when dashboards are shown in meetings.

An audit trail is your safety net when status or KPIs are questioned. Track key events automatically:

Make the audit log easy to find (e.g., an “Activity” tab on each initiative), and keep it append-only. Even admins should not be able to erase history.

Use separate environments—dev, test, and production—so you can safely try new features without risking live initiatives. Keep test data clearly labeled, restrict production access, and ensure configuration changes (like workflow rules) follow a simple promotion process.

Once people start submitting ideas and updating status, the next bottleneck is follow-through. Lightweight automation keeps initiatives moving without turning your app into a complex BPM system.

Define approval steps that match how decisions are made today, then standardize them.

A practical approach is a short, rules-based chain:

Keep the approval UI simple: approve/reject, a required comment on reject, and a way to request clarification without starting over.

Use email and in-app notifications for events people actually act on:

Let users control notification frequency (immediate vs daily digest) to prevent inbox fatigue.

Add automated reminders when an initiative is “In Progress” but hasn’t had an update. A simple rule like “no activity for 14 days” can trigger a check-in to the owner and their manager.

Create templates for common initiative types (e.g., 5S, SOP update, defect reduction). Pre-fill fields such as expected KPIs, typical tasks, default stage timeline, and required attachments.

Templates should speed entry while still allowing edits so teams don’t feel boxed in.

Reporting is what turns a list of initiatives into a management tool. Aim for a small set of views that answer: What’s moving, what’s stuck, and what value are we getting?

A useful dashboard focuses on movement through the lifecycle:

Keep filters simple: team, department, date range, stage, and owner.

Impact metrics build trust when they’re believable. Store impact as ranges or confidence levels instead of overly exact numbers.

Track a few categories:

Pair each impact entry with a short “how we measured it” note so readers understand the basis.

Not everyone will log in daily. Provide:

A team lead view should prioritize operations: “What’s stuck in Review?”, “Which owner is overloaded?”, “What should we unblock this week?”

An executive view should prioritize outcomes: total initiatives completed, impact trends over time, and a small set of strategic highlights (top 5 initiatives by impact, plus key risks).

Integrations can make your tracking app feel “connected,” but they can also turn a simple build into a long, expensive project. The goal is to support the workflow you already have—without trying to replace every system on day one.

Begin by supporting manual and semi-automated options:

These options cover many real needs while keeping complexity low. You can add deeper, two-way syncing after you’ve seen what people actually use.

Most teams get quick value from a small set of connections:

Even lightweight syncing needs rules, or data will drift:

Your best improvement ideas often start elsewhere. Add simple linking fields so one initiative can reference:

A link (plus a short note on the relationship) is usually enough to start—full synchronization can wait until it’s clearly needed.

A process-improvement tracker succeeds when people trust it and actually use it. Treat testing and rollout as part of the build—not an afterthought.

Before you code every feature, run your draft workflow end-to-end using 5–10 real initiatives (a mix of small fixes and larger projects). Walk through:

This quickly reveals gaps in statuses, required fields, and handoffs—without spending weeks building the wrong thing.

Include three groups in UAT:

Give testers scripted tasks (e.g., “submit an idea with attachments,” “send back for clarification,” “close with KPI results”) and capture issues in a simple tracker.

Focus on friction points: confusing labels, too many required fields, and unclear notifications.

Launch to one site or team first. Keep the pilot short (2–4 weeks) with a clear success metric (e.g., % of initiatives updated weekly, approval turnaround time).

Hold a weekly feedback session, then ship small fixes fast—navigation tweaks and better defaults often boost adoption more than big features.

Offer a 20–30 minute training, plus lightweight help content: “How to submit,” “How approvals work,” and “Definition of each stage.”

Set governance rules (who approves what, update frequency, what requires evidence) so the app reflects how decisions are made.

If you’re deciding what to build next, compare options on /pricing, or browse practical rollout and reporting tips on /blog.

If you want to validate your workflow and ship a usable v1 quickly, you can also prototype this tracker on Koder.ai—then iterate during the pilot with snapshots/rollback and export the source code when you’re ready to take it further.

Start by defining what counts as an initiative in your organization: a structured effort with an owner, a status, and an outcome you can measure.

For a solid v1, focus on replacing one spreadsheet and one status meeting: idea submission → review/assignment → a few clear statuses → a basic dashboard with counts and impact.

A practical default lifecycle is:

Keep stages simple but enforceable. Each stage should answer one question (e.g., “Are we committing resources?” at Approval) so people interpret reporting the same way.

Avoid vague labels like “In progress.” Use statuses that tell users what to do next, such as:

This reduces back-and-forth and makes dashboards more reliable.

Define entry/exit criteria per stage and enforce them with required fields. Examples:

Keep rules lightweight: enough to prevent “floating” initiatives, not so strict that people stop updating.

Start with a small set of roles:

Use a permissions matrix based on both role and relationship (e.g., same site/department) and plan read-only executive dashboards from day one.

Aim for a “minimum complete record” across four areas:

If a field doesn’t drive reporting, automation, or decisions, make it optional.

A simple navigation model that works well:

Optimize for “update in seconds”: quick status change, quick comment, and a lightweight checklist—especially for frontline users.

Choose what your team can support long-term. A common, maintainable setup is:

Consider low-code or buying if you mostly need intake + approvals + dashboards; build custom when workflow rules, permissions, or integrations are truly specific.

If you have an identity provider (Microsoft Entra ID, Okta, Google Workspace), use SSO to reduce password resets and improve offboarding.

Implement least-privilege access and restrict sensitive fields (e.g., cost savings). Add an append-only audit trail that records status changes, KPI edits, approvals, and ownership handoffs so you can always answer “who changed what, and when.”

Start with reporting that answers three questions: what’s moving, what’s stuck, and what value are we getting.

Useful core views include:

Add CSV exports and scheduled weekly/monthly summaries so stakeholders don’t have to log in daily.