Nov 23, 2025·8 min

How to Build a Web App for Feedback Collection and Surveys

Learn how to plan, build, and launch a web app for collecting feedback and running user surveys, from UX and data models to analytics and privacy.

Learn how to plan, build, and launch a web app for collecting feedback and running user surveys, from UX and data models to analytics and privacy.

Before you write code, decide what you’re actually building. “Feedback” can mean a lightweight inbox for comments, a structured survey tool, or a mix of both. If you try to cover every use case on day one, you’ll end up with a complicated product that’s hard to ship—and even harder for people to adopt.

Pick the core job your app should do in its first version:

A practical MVP for “both” is: one always-available feedback form + one basic survey template (NPS or CSAT), feeding into the same response list.

Success should be observable within weeks, not quarters. Choose a small set of metrics and set baseline targets:

If you can’t explain how you’ll calculate each metric, it’s not a useful metric yet.

Be specific about who uses the app and why:

Different audiences require different tone, anonymity expectations, and follow-up workflows.

Write down what can’t change:

This problem/MVP definition becomes your “scope contract” for the first build—and it will save you from rebuilding later.

Before you design screens or pick features, decide who the app is for and what “success” looks like for each person. Feedback products fail less from missing tech and more from unclear ownership: everyone can create surveys, nobody maintains them, and results never turn into action.

Admin owns the workspace: billing, security, branding, user access, and default settings (data retention, domains allowed, consent text). They care about control and consistency.

Analyst (or Product Manager) runs the feedback program: creates surveys, targets audiences, watches response rates, and turns results into decisions. They care about speed and clarity.

End user / respondent answers questions. They care about trust (why am I being asked?), effort (how long is this?), and privacy.

Map the “happy path” end-to-end:

Even if you postpone “act” features, document how teams will do it (e.g., export to CSV or push to another tool later). The key is to avoid shipping a system that collects data but can’t drive follow-through.

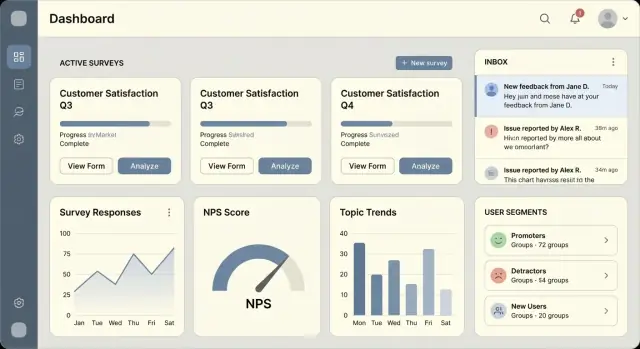

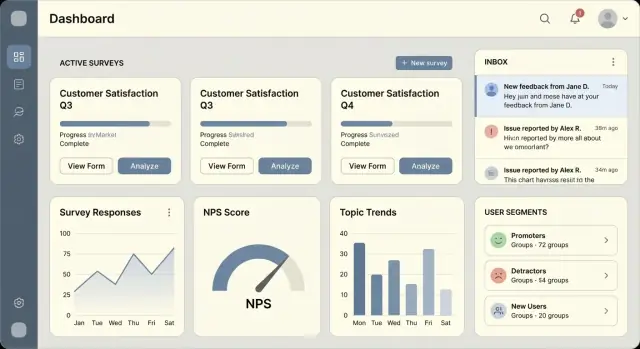

You don’t need many pages, but each must answer a clear question:

Once these journeys are clear, feature decisions get easier—and you can keep the product focused.

A feedback collection web app and user survey application doesn’t need an elaborate architecture to be successful. Your first goal is to ship a reliable survey builder, capture responses, and make it easy to review results—without creating a maintenance burden.

For most teams, a modular monolith is the simplest place to start: one backend app, one database, and clear internal modules (auth, surveys, responses, reporting). You can still keep boundaries clean so parts can be extracted later.

Choose simple services only if you have a strong reason—like sending high-volume email survey invites, heavy analytics workloads, or strict isolation requirements. Otherwise, microservices can slow you down with duplicated code, complex deployments, and harder debugging.

A practical compromise is: monolith + a couple of managed add-ons, such as a queue for background jobs and an object store for exports.

On the frontend, React and Vue are both great fits for a survey builder because they handle dynamic forms well.

On the backend, pick something your team can move quickly in:

Whatever you choose, keep APIs predictable. Your survey builder and response UI will evolve faster if your endpoints are consistent and well-versioned.

If you want to accelerate the “first working version” without committing to months of scaffolding, a vibe-coding platform like Koder.ai can be a practical starting point: you can chat your way to a React frontend plus a Go backend with PostgreSQL, then export the source code when you’re ready to take full control.

Surveys look “document-like,” but most product feedback workflow needs are relational:

A relational database like PostgreSQL is usually the easiest choice for a feedback database because it supports constraints, joins, reporting queries, and future analytics without workarounds.

Start with a managed platform when possible (e.g., a PaaS for the app and managed Postgres). It reduces ops overhead and keeps your team focused on features.

Typical cost drivers for a survey analytics product:

As you grow, you can move pieces to a cloud provider without rewriting everything—if you’ve kept the architecture simple and modular from the start.

A good data model makes everything else easier: building the survey builder, keeping results consistent over time, and producing trustworthy analytics. Aim for a structure that’s simple to query and hard to “accidentally” corrupt.

Most feedback collection web apps can start with six key entities:

This structure maps cleanly to a product feedback workflow: teams create surveys, collect responses, then analyze answers.

Surveys evolve. Someone will fix wording, add a question, or change options. If you overwrite questions in place, older responses become confusing or impossible to interpret.

Use versioning:

That way, editing a survey creates a new version while past results remain intact.

Question types usually include text, scale/rating, and multiple choice.

A practical approach is:

type, title, required, positionquestion_id and a flexible value (e.g., text_value, number_value, plus an option_id for choices)This keeps reporting straightforward (e.g., averages for scales, counts per option).

Plan identifiers early:

created_at, published_at, submitted_at, and archived_at.channel (in-app/email/link), locale, and optional external_user_id (if you need to tie responses to your product users).These basics make your survey analytics more reliable and your audits less painful later.

A feedback collection web app lives or dies by its UI: admins need to build surveys quickly, and respondents need a smooth, distraction-free flow. This is where your user survey application starts to feel “real.”

Start with a simple survey builder that supports a question list with:

If you add branching, keep it optional and minimal: allow “If answer is X → go to question Y.” Store this in your feedback database as a rule attached to a question option. If branching feels risky for v1, ship without it and keep the data model ready.

The response UI should load quickly and feel good on a phone:

Avoid heavy client-side logic. Render simple forms, validate required answers, and submit responses in small payloads.

Make your in-app feedback widget and survey pages usable for everyone:

Public links and email survey invites attract spam. Add lightweight protections:

This combination keeps the survey analytics clean without harming legitimate respondents.

Collection channels are how your survey reaches people. The best apps support at least three: an in-app widget for active users, email invitations for targeted outreach, and shareable links for broad distribution. Each channel has different tradeoffs in response rate, data quality, and risk of misuse.

Keep the widget easy to find but not annoying. Common placements are a small button in the bottom corner, a tab on the side, or a modal that appears after specific actions.

Triggers should be rule-based so you only interrupt when it makes sense:

Add frequency limits (e.g., “no more than once per week per user”) and a clear “don’t show again” option.

Email works best for transactional moments (after a trial ends) or for sampling (N users per week). Avoid shared links by generating single-use tokens tied to a recipient and survey.

Recommended token rules:

Use public links when you want reach: marketing NPS, event feedback, or community surveys. Plan for spam controls (rate limiting, CAPTCHA, optional email verification).

Use authenticated surveys when answers must map to an account or role: customer support CSAT, internal employee feedback, or workspace-level product feedback workflow.

Reminders can lift responses, but only with guardrails:

These basics make your feedback collection web app feel considerate while keeping your data trustworthy.

Authentication and authorization are where a feedback collection web app can quietly go wrong: the product works, but the wrong person can see the wrong survey results. Treat identity and tenant boundaries as core features, not add-ons.

For an MVP user survey application, email/password is usually enough—fast to implement and easy to support.

If you want a smoother sign-in without committing to enterprise complexity, consider magic links (passwordless). They reduce forgotten-password tickets, but require good email deliverability and link-expiry handling.

Plan SSO (SAML/OIDC) as a later upgrade. The key is designing your user model so adding SSO doesn’t force a rewrite (e.g., support multiple “identities” per user).

A survey builder needs clear, predictable access:

Keep permissions explicit in code (policy checks around every read/write), not just in the UI.

Workspaces let agencies, teams, or products share the same platform while isolating data. Every survey, response, and integration record should carry a workspace_id, and every query should scope by it.

Decide early whether you’ll support users in multiple workspaces, and how switching works.

If you expose API keys (for embedding an in-app feedback widget, syncing to a feedback database, etc.), define:

For webhooks, sign requests, retry safely, and let users disable or regenerate secrets from a simple settings screen.

Analytics is where a feedback app becomes useful for decision-making, not just data storage. Start by defining a small set of metrics you can trust, then build views that answer everyday questions quickly.

Instrument key events for each survey:

From these, you can calculate start rate (starts/views) and completion rate (completions/starts). Also log drop-off points—for example, the last question seen or the step where users abandoned the flow. This helps you spot surveys that are too long, confusing, or poorly targeted.

Before advanced BI integrations, ship a simple reporting area with a few high-signal widgets:

Keep charts simple and fast. Most users want to confirm, “Did this change improve sentiment?” or “Is this survey getting traction?”

Add filters early so results are credible and actionable:

Segmenting by channel is especially important: email invites often complete differently than in-product prompts.

Offer CSV export for survey summaries and raw responses. Include columns for timestamps, channel, user attributes (where permitted), and question IDs/text. This gives teams immediate flexibility in spreadsheets while you iterate toward richer reports.

Feedback and survey apps often collect personal data without meaning to: emails in invites, free-text answers that mention names, IP addresses in logs, or device IDs in an in-app widget. The safest approach is to design for “minimum necessary data” from day one.

Create a simple data dictionary for your feedback collection web app that lists every field you store, why you store it, where it appears in the UI, and who can access it. This keeps the survey builder honest and helps you avoid “just in case” fields.

Examples of fields to question:

If you offer anonymous surveys, treat “anonymous” as a product promise: don’t store identifiers in hidden fields, and avoid mixing response data with authentication data.

Make consent explicit when you need it (e.g., marketing follow-ups). Add clear wording at the point of collection, not buried in settings. For GDPR-friendly surveys, also plan operational flows:

Use HTTPS everywhere (encryption in transit). Protect secrets with a managed secrets store (not environment variables copied into docs or tickets). Encrypt sensitive columns at rest when appropriate, and ensure backups are encrypted and tested with restore drills.

Use plain language: who is collecting the data, why, how long you keep it, and how to contact you. If you use subprocessors (email delivery, analytics), list them and provide a way to sign a data processing agreement. Keep your privacy page easy to find from the survey response UI and in-app feedback widget.

Traffic patterns for surveys are spiky: a new email campaign can turn “quiet” into thousands of submissions in minutes. Designing for reliability early prevents bad data, duplicate responses, and slow dashboards.

People abandon forms, lose connectivity, or switch devices mid-survey. Validate inputs server-side, but be deliberate about what must be required.

For long surveys, consider saving progress as a draft: store partial answers with a status like in_progress, and only mark a response submitted once all required questions pass validation. Return clear field-level errors so the UI can highlight exactly what to fix.

Double-clicks, back-button resubmits, and flaky mobile networks can easily create duplicate records.

Make your submission endpoint idempotent by accepting an idempotency key (a unique token generated by the client for that response). On the server, store the key with the response and enforce a uniqueness constraint. If the same key is sent again, return the original result instead of inserting a new row.

This is especially important for:

Keep the “submit response” request fast. Use a queue/worker for anything that doesn’t need to block the user:

Implement retries with backoff, dead-letter queues for repeated failures, and job deduplication where applicable.

Analytics pages can become your slowest part as responses grow.

survey_id, created_at, workspace_id, and any “status” field.A practical rule: store raw events, but serve dashboards from pre-aggregated tables when queries start to hurt.

Shipping a survey app is less about “finishing” and more about preventing regressions as you add question types, channels, and permissions. A small, consistent test suite plus a repeatable QA routine will save you from broken links, missing responses, and incorrect analytics.

Focus automated tests on logic and end-to-end flows that are hard to spot manually:

Keep fixtures small and explicit. If you version survey schemas, add a test that loads “old” survey definitions to ensure you can still render and analyze historic responses.

Before every release, run a short checklist that mirrors real usage:

Maintain a staging environment that mirrors production settings (auth, email provider, storage). Add seed data: a few example workspaces, surveys (NPS, CSAT, multi-step), and sample responses. This makes regression testing and demos repeatable and prevents “it works on my account” surprises.

Surveys fail quietly unless you watch the right signals:

A simple rule: if a customer can’t collect responses for 15 minutes, you should know before they email you.

Shipping a feedback collection web app isn’t a single “go-live” moment. Treat launch as a controlled learning cycle so you can validate your user survey application with real teams while keeping support manageable.

Start with a private beta (5–20 trusted customers) where you can watch how people actually build surveys, share links, and interpret results. Move to a limited rollout by opening access to a waitlist or a specific segment (e.g., startups only), then proceed to a full release once core flows are stable and your support load feels predictable.

Define success metrics for each phase: activation rate (created first survey), response rate, and time-to-first-insight (viewed analytics or exported results). These are more useful than raw signups.

Make onboarding opinionated:

Keep onboarding inside the product, not in docs alone.

Feedback is only useful when it’s acted on. Add a simple workflow: assign owners, tag themes, set a status (new → in progress → resolved), and help teams close the loop by notifying respondents when an issue is addressed.

Prioritize integrations (Slack, Jira, Zendesk, HubSpot), add more NPS/CSAT templates, and refine packaging. When you’re ready to monetize, point users to your plans at /pricing.

If you’re iterating quickly, consider how you’ll manage changes safely (rollbacks, staging, and quick redeploys). Platforms like Koder.ai lean into this with snapshots and rollback plus one-click hosting—useful when you’re experimenting with survey templates, workflows, and analytics without wanting to babysit infrastructure in the early phases.

Start by choosing one primary goal:

Keep the first release narrow enough to ship in 2–6 weeks and measure outcomes quickly.

Pick metrics you can calculate within weeks and define them precisely. Common choices:

If you can’t explain where the numerator/denominator come from in your data model, the metric isn’t ready.

Keep roles simple and aligned with real ownership:

Most early product failures come from unclear permissions and “everyone can publish, nobody maintains.”

A minimal, high-leverage set is:

If a screen doesn’t answer a clear question, cut it from v1.

For most teams, start with a modular monolith: one backend app + one database + clear internal modules (auth, surveys, responses, reporting). Add managed components only where needed, like:

Microservices usually slow early shipping due to deployment and debugging overhead.

Use a relational core (often PostgreSQL) with these entities:

Versioning is key: editing a survey should create a new SurveyVersion so historical responses remain interpretable.

Keep the builder small but flexible:

If you add branching, keep it minimal (e.g., “If option X → jump to question Y”) and model it as rules attached to options.

A practical minimum is three channels:

Design each channel to record channel metadata so you can segment results later.

Treat it as a product promise and reflect it in your data collection:

Also keep a simple data dictionary so you can justify every stored field.

Focus on the failure modes that create bad data:

submitted when completeworkspace_id, survey_id, created_at), and cached aggregatesAdd alerts for “responses dropped to zero” and spikes in submit errors so collection doesn’t fail silently.