Oct 24, 2025·8 min

How to Build a Website That Validates a SaaS Before Coding

Learn how to build a validation website that tests demand, messaging, and pricing before coding a SaaS—using waitlists, smoke tests, and analytics.

Learn how to build a validation website that tests demand, messaging, and pricing before coding a SaaS—using waitlists, smoke tests, and analytics.

“Pre-SaaS validation” means using a simple website to collect evidence that your idea is worth building—before you invest months in product development. Instead of shipping features, you’re testing whether a specific group of people cares enough to take a meaningful action.

A validation site should help you make clear go/no-go decisions in four areas:

Good validation data is tied to behavior: email signups, demo requests, “notify me” clicks, survey completions, or replies to a follow-up message. Page views and time-on-site can add context, but they rarely answer the hard questions.

Validation reduces risk—it doesn’t guarantee a successful SaaS. A landing page can’t prove retention, long-term willingness to pay, or whether your product will beat competitors once they respond. What it can do is prevent you from building something nobody wants.

When you build software, you’re creating functionality. When you build evidence, you’re testing assumptions.

A pre-SaaS validation website is a structured experiment: one clear problem, one specific audience, one crisp value proposition, and one call-to-action. Weak results aren’t failure—they’re a fast, inexpensive signal to revise the idea, narrow the audience, adjust messaging, or rethink pricing before writing real code.

A validation website only works when it’s built around a specific bet. If you try to “appeal to everyone,” you won’t know who the page worked for—or why.

Choose a single primary persona you can describe in one sentence (role + context). Example: “Operations managers at 50–200 person logistics companies who coordinate deliveries with spreadsheets.”

Then define one job-to-be-done that is clearly painful and frequent. Not “be more productive,” but “reduce late deliveries caused by last-minute route changes.” This keeps your copy focused and your results interpretable.

Your hypothesis should read like a testable claim:

Example: “Ops managers at mid-size logistics firms will join a waitlist for a tool that automates route-change alerts because customer penalties for late deliveries have increased.”

List the riskiest assumptions behind your idea, such as:

Decide what outcomes would make you proceed or stop. For example: “At least 20 qualified signups in two weeks from one channel, and 30% of them agree to a 15-minute call.” Pre-defining this prevents you from “interpreting” weak signals as success.

A pre-SaaS validation page isn’t there to “look complete.” It’s there to answer a specific question: Will the right people take the next step when they see this offer? That means every element should support a clear experiment—not a feature tour.

Keep the page tight and predictable, so visitors don’t get lost and your results aren’t muddy.

If you add extra sections, make them answer objections (time, risk, switching, privacy) rather than expanding into a “full product page.”

Choose a single main call-to-action so your data stays clean:

Use secondary links sparingly (e.g., “See how it works”) and keep them from competing with the main CTA.

Feature lists often attract “nice idea” interest, not real commitment. Instead, describe the outcome with a specific scenario your user recognizes:

“Automatically categorize expenses” becomes: “Upload a card statement and get a client-ready expense report—tagged by project—before your next invoicing run.”

Write the way your target customer talks in emails, tickets, or job posts. Replace internal jargon with observable results, time saved, mistakes avoided, and moments of relief. The goal is not to sound impressive—it’s to be instantly understood and easy to say yes to.

If your validation website is a test, your messaging is the measurement tool. The goal isn’t to sound impressive—it’s to make visitors self-select quickly so you can compare conversion rates across different promises.

A practical structure is:

Outcome + audience + time/effort saver

Examples:

This format is measurable because it sets a clear expectation. If the promise resonates, you’ll see higher click-through to your call-to-action (CTA) and more signups.

Your subheadline should clarify two things:

What pain you’re addressing (in the user’s words)

How you solve it (at a high level, not features)

Example:

“Stop losing leads to slow replies. We route inbound requests to the right teammate and send follow-up messages automatically until the prospect books.”

Avoid vague claims like “all-in-one” or “best solution.” They’re hard to test and don’t help a visitor decide.

Benefit bullets work best when they’re specific enough to be checked later. Even if you’re not delivering yet, you’re testing what outcomes people want.

If you don’t have real numbers, use directional wording (“reduce,” “save time,” “fewer”) and test which version converts.

A short, consistent flow removes friction and makes your offer feel real:

When you change messaging, keep the rest of the page stable so your conversion tracking reflects the copy—not a redesign.

Your call-to-action (CTA) is the measurement device on a validation site. If it asks for too little, you’ll collect vague interest. If it asks for too much, you’ll filter out people who would have been great customers. The right CTA depends on what you’re trying to learn right now.

Pick a single “offer” that matches your stage, then build the page around it:

Mixing these (“join the waitlist or book a call or pre-pay”) dilutes the signal and makes conversion rates harder to interpret.

A simple rule: the more confident you are in the audience and problem, the more friction you can add to improve lead quality.

If you use a form, include one question that helps you segment later (e.g., “What are you trying to accomplish?”). That makes follow-up interviews far more useful.

Incentives can help, but they should be specific and safe.

Offer early access or a limited-time discount without implying guaranteed features or dates. Set expectations clearly: what signups will receive (updates, an invitation to a pilot, a short interview request), and a realistic timeline range (e.g., “aiming to start pilots in 4–6 weeks”).

That clarity increases trust and reduces “junk signups” that inflate your numbers but don’t convert later.

Pricing isn’t something to “figure out later.” It’s part of the promise you’re making—and it strongly affects who signs up. A pre-SaaS validation site can test willingness to pay without collecting money or misleading anyone.

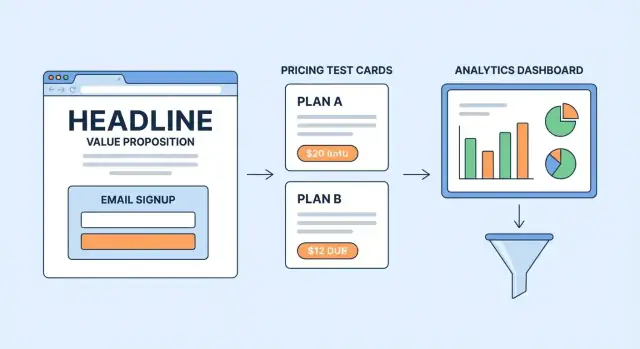

Create 2–3 plan anchors (for example: Starter / Pro / Team) even if the details aren’t final. The point is to learn which range and packaging feels acceptable.

Keep each plan simple: a short description, one main benefit, and a clear monthly price. Avoid fake discounts or “limited time” pressure.

Use a high-intent CTA like “Start trial”—but don’t pretend the product exists.

When someone clicks, send them to a page that says the truth:

This preserves the signal (they tried to buy) while staying transparent.

Don’t just test the number—test the structure. Try variants across different traffic runs:

Track engagement on the pricing section and the click-through rate per plan. Also track where people abandon:

If Pro gets most clicks but few waitlist submissions, your price or positioning may be too high—or the value isn’t clear yet.

When you don’t have a product yet, trust is the currency you’re asking visitors to spend. The fastest way to lose it is to promise outcomes you can’t prove (“cut churn by 40%”) or imply customers you don’t have. Your validation site should feel honest, specific, and low-risk.

You can build credibility without logos or case studies by showing why you’re a believable person (or team) to solve this problem.

Briefly share:

Keep it concrete. “10 years in finance ops” is stronger than “passionate about productivity.”

Only include testimonials if they’re real and attributable. If you don’t have them yet, replace “testimonials” with previews of what people will get.

For instance:

Label these clearly as examples or previews.

Visitors hesitate because they fear spam, wasted time, or being trapped.

Add simple, truthful assurances:

A short FAQ section can do more for trust than another paragraph of hype. Address common concerns like:

The goal isn’t to look big—it’s to look dependable.

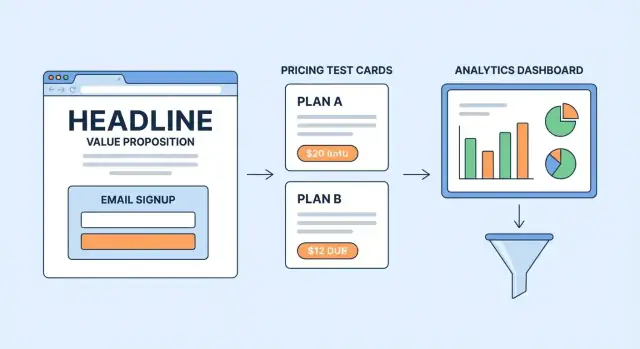

If your validation site can’t tell you who is interested and what they did, you’re guessing. Analytics for pre-SaaS validation should focus on behaviors that map to intent—not vanity numbers like total visits.

Start simple and make sure every important step is measurable. At a minimum, track:

If you have multiple CTAs (e.g., “Join waitlist” vs “Request demo”), track them separately so you can see which promise is pulling.

Raw counts don’t help you decide. Use a small set of ratios that describe where interest drops:

For signup quality, capture one lightweight qualifier in the form (e.g., role, company size, or “What are you trying to solve?”). Then review responses weekly.

Add UTM parameters to every campaign link so you can compare outcomes across sources and angles (e.g., different ad copy or communities). A simple naming convention (utm_source, utm_campaign, utm_content) is enough—as long as you’re consistent.

You don’t need a complex BI tool. A spreadsheet or basic dashboard should show weekly traffic by UTM, event counts, and the key conversion rates above. The goal is to spot meaningful shifts and decide what to test next—without drowning in data.

Traffic is only useful for validation if it resembles your future customers. A thousand random visitors can create misleading conversion rates; fifty right-fit visitors can tell you what to build.

Pick channels where your target user already hangs out and where intent is visible:

Limit yourself to a few channels so you can isolate variables and compare results cleanly.

Write 2–4 variants of your ad or post, each anchored to a different value proposition. Keep everything else constant: same landing page, same CTA, same audience targeting (when possible). This makes the “why” behind performance easier to interpret.

Examples of message angles you can test:

Start with a budget you’re comfortable spending on insight. Your goal is directionally correct signals (which problem framing attracts qualified clicks), not a perfect CAC model.

Track quality, not just clicks: scroll depth, CTA completion, and follow-up actions like replying to a confirmation email.

Create a simple table or doc that records:

The best combo is the one that produces the strongest intent, not the cheapest click.

A signup isn’t the end of validation—it’s permission to learn. Your goal is to turn “interested” into “specific”: who they are, what they’re trying to do, what they’ve already tried, and what would make them switch.

On your signup form, include one short question that turns anonymous demand into actionable context. Keep it multiple-choice or a short text field so completion doesn’t drop.

Examples that work well:

This single question makes your follow-up dramatically better—because you can ask about their reality instead of pitching your idea.

Add an optional checkbox like: “I’m open to a 15-minute call to share how I do this today.” The checkbox is a strong signal of motivation, and it keeps your outreach focused on qualified leads.

If you’re early, prioritize interviews with people who:

Send an automated email immediately after signup that asks one or two clarifying questions. Keep it reply-friendly (no long survey).

For example:

Then follow up manually with a short, specific invite: “If you have 15 minutes, I’d love to understand how you currently do X.”

Don’t lump every signup into one bucket. Segment by persona (role), problem, and workaround, and review conversion and replies per segment. Often, your best segment is smaller—but far more consistent.

If you want a simple next step, create 3–5 persona tags in your spreadsheet/CRM and keep interview notes grouped by tag. This makes patterns obvious and helps you avoid building for “everyone.”

Validation pages can feel “alive” forever—new ideas, new copy, new tweaks. The fastest way to learn is to treat iteration like a lab: controlled changes, clear timelines, and pre-set rules for what counts as a win.

Change one thing at a time so you know what caused the result. If you change the headline and the CTA, you’ll get noise instead of insight.

Good single-variable tests include:

Keep the rest of the page identical, and don’t “peek and tweak” mid-test.

Decide in advance how long the test runs and how many visitors you need before calling it.

A practical rule for early validation:

If you can’t reach minimum traffic, that’s a signal too: your channel may not be viable yet, or targeting is off.

Track: what changed, why you changed it, dates, traffic source, and results (conversion rate, email quality, interview acceptance). This prevents circular testing and helps you explain decisions to teammates or investors.

Stop iterating the page and move to a pilot build when you see consistent signals, such as:

At that point, more button-color tests won’t beat building the smallest real workflow.

Your validation website did its job if it reduced uncertainty: you now know who wants this, what they expect, and how strongly they want it (measured by signups, replies, and willingness to pay). The build phase should be a direct continuation of those signals—not a fresh brainstorming session.

Pick the lightest path that can deliver the promised outcome:

Use your strongest demand segment as your scope filter. Build the first version around:

If pricing tests showed sensitivity, keep the MVP flexible (tiers can come later). If higher-intent users clicked through to pricing, make your initial offering match what they expected to see on /pricing.

Early onboarding should confirm value fast and create a feedback loop:

Once your validation signals are strong, the bottleneck often becomes execution: turning a proven workflow into a real app quickly, while keeping iteration tight.

A vibe-coding platform like Koder.ai can help here because you can go from a spec (or even your landing-page promise + interview notes) to a working web or mobile app through chat—then iterate fast using features like planning mode, snapshots and rollback, and source code export. That’s especially useful when you’re still translating discovery into product scope and want to ship a narrow MVP (commonly React on the front end, a Go backend with PostgreSQL, and Flutter for mobile) without rebuilding your entire process.

Document your decision rule (“We build X because Y users requested it and Z% attempted to pay”) and set a 2–4 week checkpoint. For a practical checklist of what to do next, see /blog/your-next-step.

A pre-SaaS validation website is a simple landing page designed to test whether a specific audience will take a meaningful action (e.g., waitlist signup, demo request, pre-order) before you build the product.

It’s less about “looking legit” and more about collecting evidence to make a go/no-go decision.

Prioritize behaviors that indicate intent:

Use page views and time-on-site only as supporting context, not as the decision metric.

Because you can’t interpret results if you don’t know who the page worked for.

Pick one persona and one painful job-to-be-done so your messaging is specific, your traffic targeting is cleaner, and your conversion rate actually means something.

A useful hypothesis is testable and includes:

This makes your landing page a controlled experiment rather than a generic pitch.

Pre-define pass/fail criteria before you publish, such as:

Without decision rules, it’s easy to rationalize weak signals as success.

Use one clear page with:

Add extra sections only to address objections (switching risk, privacy, time-to-value), not to expand into a full feature tour.

Choose the CTA that matches what you need to learn:

Avoid offering multiple primary CTAs at once, or you’ll dilute the signal and muddle conversion data.

Run an ethical smoke test:

This tests intent without pretending the product exists.

Use verifiable “proof substitutes,” such as:

Avoid fake testimonials, invented logos, or outcome claims you can’t support yet.

Treat signups as the start of customer discovery:

The goal is to learn workflows, switching barriers, and what “must be true” for them to buy.