Nov 06, 2025·8 min

ByteDance’s Attention Engine: Algorithms + Creator Incentives

A practical look at how ByteDance scaled TikTok/Douyin with data-driven recommendations and creator incentives that boost retention, output, and growth.

A practical look at how ByteDance scaled TikTok/Douyin with data-driven recommendations and creator incentives that boost retention, output, and growth.

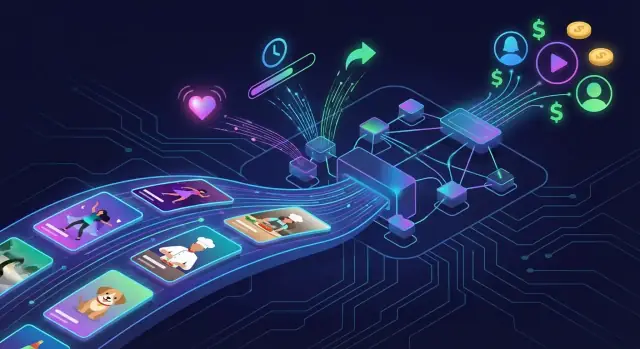

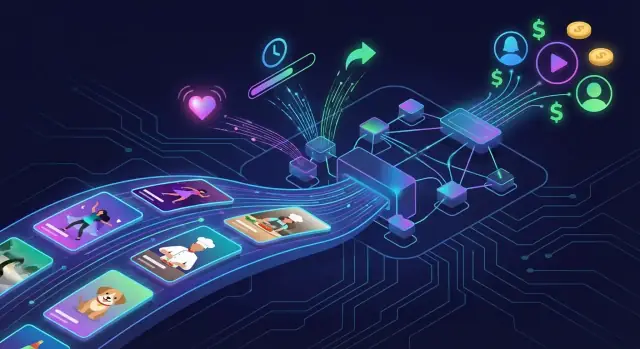

An attention engine is a system designed to do two things at once: keep viewers watching and keep creators posting. For ByteDance products like TikTok and Douyin, the “engine” isn’t just the algorithm that picks your next video—it’s the combination of recommendations, creator rewards, and product design that continuously supplies content people want to watch.

If a traditional social network is built around “who you follow,” ByteDance’s model is built around “what holds your attention.” The app learns what you’ll enjoy quickly, then serves more of it—while also giving creators reasons to publish frequently and improve their videos.

This is not a full history of ByteDance as a company. It focuses on the mechanics most people experience:

It’s also a high-level explanation. There are no proprietary details, internal metrics, or secret formulas here—just practical concepts that help you understand the loop.

Recommendations create fast feedback: when a creator posts, the system can test the video with small audiences and scale it if people watch, rewatch, or share.

Incentives (money, visibility, tools, status) make creators respond to that feedback. Creators learn what performs, adjust, and post again.

Together, those forces form a self-reinforcing cycle: better targeting keeps viewers engaged, and creator motivation keeps the content supply fresh, which gives the recommender even more data to learn from.

Most social networks began with a simple promise: see what your friends (or followed accounts) posted. That’s a social-graph feed—your connections determine your content.

ByteDance popularized a different default: an interest graph. Instead of asking “Who do you know?”, it asks “What do you seem to enjoy right now?” The feed is built around patterns in behavior, not relationships.

In a social-graph feed, discovery is often slow. New creators typically need followers before they can reach people, and users need time to curate who they follow.

In an interest-graph feed, the system can recommend content from anyone, immediately, if it predicts it will satisfy you. This makes the platform feel “alive” even when you’re brand new.

The key product choice is the default landing experience: you open the app and the feed starts.

A “For You”-style page doesn’t wait for you to build a network. It learns from quick signals—what you watch, skip, rewatch, or share—and uses those to assemble a personalized stream within minutes.

Short videos enable fast sampling. You can evaluate a piece of content in seconds, which produces more feedback per minute than long-form media.

More feedback means faster learning: the system can test many topics and styles, then double down on what holds your attention.

Small design choices accelerate the interest graph:

Together, these mechanics turn every session into rapid preference discovery—less about who you follow, more about what you can’t stop watching.

A ByteDance-style feed doesn’t “understand” videos the way people do. It learns from signals: small traces of what you did (or didn’t do) after seeing a piece of content. Over millions of sessions, those signals become a practical map of what keeps different viewers engaged.

The most useful signals are often implicit—what you do naturally, without pressing any buttons. Examples include:

Explicit signals are the actions you choose deliberately:

A key idea: watching is a “vote,” even if you never tap like. That’s why creators obsess over the first second and pacing—because the system can measure attention very precisely.

Not all feedback is positive. The feed also pays attention to signals that suggest a mismatch:

Separate from preference are safety and policy filters. Content can be limited or excluded based on rules (for example, misinformation, harmful challenges, or age-sensitive material), even if some users would watch it.

Signals aren’t one-size-fits-all. Their importance can vary by region (local norms and regulations), content type (music clips vs. educational explainers), and user context (time of day, network conditions, whether you’re a new viewer, and what you’ve recently watched). The system is constantly adjusting which signals it trusts most for this person, right now.

A short-video feed feels like it’s improvising in real time, but it typically follows a simple loop: find a set of possible videos and then pick the best one for you right now.

First, the system builds a shortlist of videos you could like. This isn’t yet a precise choice—it’s a fast sweep to gather options.

Candidates might come from:

The goal is speed and variety: produce options quickly without overfitting too early.

Next, ranking scores those candidates and decides what to show next. Think of it as sorting the shortlist by “most likely to keep you engaged” based on signals like watch time, rewatches, skips, likes, comments, and shares.

To avoid getting stuck showing only “safe” content, feeds also explore. A new or unfamiliar video may be shown to a small group first. If that group watches longer than expected (or interacts), the system widens distribution; if not, it slows down. This is how unknown creators can break through quickly.

Because you give feedback every swipe, your profile can shift in minutes. Watch three cooking clips to the end and you’ll likely see more; start skipping them and the feed pivots just as quickly.

The best feeds mix “more of what worked” with “something new.” Too familiar gets boring; too novel feels irrelevant. The feed’s job is to keep that balance—one next video at a time.

Cold start is the “blank slate” problem: the system has to make good recommendations before it has enough history to know what a person likes—or whether a brand-new video is any good.

With a new user, the feed can’t rely on past watch time, skips, or replays. So it starts with a few strong guesses based on lightweight signals:

The goal isn’t to be perfect on the first swipe—it’s to gather clean feedback quickly (what you watch through vs. skip) without overwhelming you.

A new upload has no performance history, and a new creator may have no followers. Systems like TikTok/Douyin can still make them break out because distribution is not limited to the follower graph.

Instead, a video may be tested in a small batch of viewers who are likely to enjoy that topic or format. If those viewers watch longer, rewatch, share, or comment, the system expands the test to larger pools.

This is why “going viral without followers” is possible: the algorithm is evaluating the video’s early response, not just the creator’s existing audience.

Cold start has a risk: pushing unknown content too widely. Platforms counter this by detecting issues early—spammy behavior, reuploads, misleading captions, or policy violations—while also looking for positive quality cues (clear visuals, coherent audio, strong completion rates). The system tries to learn fast, but it also tries to fail safely.

Short video creates unusually tight feedback loops. In a single session, a viewer might see dozens of clips, each with an immediate outcome: watch, swipe, rewatch, like, share, follow, or stop the session. That means the system collects many more training examples per minute than formats where one decision (start a 30-minute episode) dominates the whole experience.

Each swipe is a small vote. Even without knowing any secret formula, it’s reasonable to say that more frequent decisions give a recommender more chances to test hypotheses:

Because these signals arrive quickly, the ranking model can update its expectations sooner—improving accuracy over time through repeated exposure and correction.

Performance usually isn’t judged by one viral spike. Teams tend to track cohorts (groups of users who started on the same day/week, or who share a characteristic) and study retention curves (how many return on day 1, day 7, etc.).

That matters because short-video feeds can inflate “wins” that don’t last. A clip that triggers a lot of quick taps might boost short-term watch time, but if it increases fatigue, the cohort’s retention curve can bend downward later. Measuring cohorts helps separate “this worked today” from “this keeps people coming back.”

Over time, tight loops can make ranking more personalized: more data, quicker tests, quicker corrections. The exact mechanics differ by product, but the general effect is simple: short video compresses the learn-and-adjust cycle into minutes, not days.

Creators don’t show up just because an app has users—they show up because the platform makes a clear promise: post the right thing in the right way, and you’ll get rewarded.

Most creators are juggling a mix of goals:

ByteDance-style feeds reward outcomes that make the system run better:

These goals shape incentive design: distribution boosts for strong early performance, features that increase output (templates, effects), and monetization pathways that keep creators invested.

When distribution is the prize, creators adapt quickly:

Incentives can create tension:

That’s why “what gets rewarded” matters: it quietly defines the creative culture of the platform—and the content viewers end up seeing.

Creator incentives aren’t just “pay people to post.” The most effective systems mix cash rewards, predictable distribution mechanics, and production tools that reduce the time from idea to upload. Together, they make creating feel both possible and worth repeating.

Across major platforms, the monetary layer typically shows up in a few recognizable forms:

Each option signals what the platform values. Revenue share pushes scale and consistency; bonuses can steer creators toward new formats; tips reward community-building and “appointment viewing.”

Distribution is often the strongest motivator because it arrives fast: a breakout post can change a creator’s week. Platforms encourage production by offering:

Importantly, distribution incentives work best when creators can predict the path: “If I publish consistently and follow format cues, I’ll get more chances.”

Editing, effects, templates, captions, music libraries, and built-in post scheduling reduce friction. So do creator education programs—short tutorials, best-practice dashboards, and reusable templates that teach pacing, hooks, and series formats.

These tools don’t pay creators directly, but they increase output by making good content easier to produce repeatedly.

ByteDance’s biggest edge isn’t “the algorithm” or “creator payouts” on their own—it’s how the two lock together into a self-reinforcing cycle.

When incentives rise (money, easier growth, creator tools), more people post more often. More posting creates more variety: different niches, formats, and styles.

That variety gives the recommendation system more options to test and match. Better matching leads to more watch time, higher session length, and more returning users. A larger, more engaged audience then makes the platform feel even more rewarding for creators—so more creators join, and the loop continues.

You can think of it like this:

On a follower-first network, growth often feels gated: you need an audience to get views, and you need views to get an audience. ByteDance-style feeds break that stalemate.

Because distribution is algorithmic, a creator can post from zero and still get meaningful exposure if the video performs well with a small test group. That “any post can pop” feeling makes incentives more believable—even when only a small percentage actually break out.

Templates, trending sounds, duets/stitches, and remix culture reduce the effort needed to produce something that fits current demand. For creators, it’s faster to ship. For the system, it’s easier to compare performance across similar formats and learn what works.

When the rewards feel close, people optimize hard. That can mean repost farms, repetitive trend-chasing, misleading hooks, or content that’s “made for the algorithm” rather than for viewers. Over time, saturation increases competition and can push creators toward more extreme tactics just to keep distribution.

Keeping people in the feed is often described as a “watch time” game, but watch time alone is a blunt instrument. If a platform only maximizes minutes, it can drift into spammy repetition, extreme content, or addictive loops that users later regret—leading to churn, bad press, and regulatory pressure.

ByteDance-style systems typically optimize a bundle of goals: predicted enjoyment, “would you recommend this?”, completion rate, replays, skips, follows, and negative signals like fast swipes away. The aim is not just more viewing, but better viewing—sessions that feel worth it.

Safety and policy constraints also shape what is eligible to rank.

Burnout often shows up as repetition: the same sound, the same joke structure, the same creator archetype. Even if those items perform well, too much sameness can make the feed feel synthetic.

To avoid that, feeds inject diversity in small ways: rotating topics, mixing familiar creators with new ones, and limiting how often near-duplicate formats appear. Variety protects long-term retention because it keeps curiosity alive.

“Keep watching” has to be balanced with guardrails:

These guardrails aren’t just ethical; they prevent a feed from training itself toward the most inflammatory content.

Many of the visible safety and quality tools are feedback mechanisms: Not interested, topic controls, reporting, and sometimes a reset feed option. They give users a way to correct the system when it overfits—and they help recommendations stay engaging without feeling trapping.

For creators on TikTok/Douyin-style feeds, the “rules” aren’t written in a handbook—they’re discovered through repetition. The platform’s distribution model turns every post into a small experiment, and the results show up quickly.

Most creators settle into a tight cycle:

Because distribution can expand (or stall) within hours, analytics become a creative tool, not just a report card. Retention graphs, average watch time, and saves/shares point to specific moments: a confusing setup, a slow transition, a payoff that arrives too late.

This short learning cycle pushes creators to:

The same rapid feedback that helps creators improve can also pressure them into constant output. Sustainable creators often batch filming, reuse proven formats, set “shipping” days, and keep a realistic cadence. The goal is consistency without turning every hour into production—because long-term relevance depends on energy, not just frequency.

ByteDance’s biggest unlock wasn’t a “social network” feature set—it was an interest graph that learns from behavior, paired with high-frequency feedback (every swipe, replay, pause), and aligned incentives that nudge creators toward formats the system can reliably distribute.

The good news: these mechanics can help people find genuinely useful entertainment or information quickly. The risk: the same loop can over-optimize for short-term attention at the expense of wellbeing and diversity.

First, build around interests, not just follows. If your product can infer what a user wants right now, it can reduce friction and make discovery feel effortless.

Second, shorten the learning cycle. Faster feedback lets you improve relevance quickly—but it also means mistakes scale quickly. Put guardrails in place before you scale.

Third, align incentives. If you reward creators (or suppliers) for the same outcomes your ranking system values, the ecosystem will converge—sometimes in great ways, sometimes toward spammy patterns.

If you’re applying these ideas to your own product, the hardest part is rarely the theory—it’s shipping a working loop where events, ranking logic, experiments, and creator/user incentives can be iterated quickly.

One approach is to prototype the product end-to-end in a tight feedback cycle (UI, backend, database, and analytics hooks), then refine the recommendation and incentive mechanics as you learn. Platforms like Koder.ai are built for that style of iteration: you can create web, backend, and mobile app foundations through chat, export source code when needed, and use planning/snapshots to test changes and roll back quickly—useful when you’re experimenting with engagement loops and don’t want long release cycles to slow learning.

If you’re mapping these ideas to your own product, browse more breakdowns in /blog. If you’re evaluating tools, analytics, or experimentation support, compare approaches and costs on /pricing.

A healthier attention engine can still be highly effective: it helps people find what they value faster. The goal is to earn attention through relevance and trust—while designing intentionally to reduce manipulation, fatigue, and unwanted rabbit holes.

An attention engine is the combined system that (1) personalizes what viewers see next and (2) motivates creators to keep publishing. In TikTok/Douyin’s case, it’s not only ranking models—it also includes product UX (autoplay, swipe), distribution mechanics, and creator rewards that keep the content loop running.

A social graph feed is primarily driven by who you follow, so discovery is gated by your network.

An interest graph feed is driven by what you appear to enjoy, so it can recommend content from anyone immediately. That’s why a new user can open the app and get a compelling feed without building a follow list first.

It learns from implicit signals (watch time, completion rate, rewatches, skips, pauses) and explicit signals (likes, comments, shares, follows). Watching itself is a strong “vote,” which is why retention and pacing matter so much.

It also uses negative signals (quick swipes away, “Not interested”) and applies policy/safety filters that can limit distribution regardless of engagement.

A simplified loop looks like:

Because each swipe creates feedback, personalization can shift within minutes.

Cold start is the problem of making good recommendations with little history.

Safety and spam checks help limit how far unknown content spreads before trust is established.

Because content isn’t limited to a follower graph, a new creator can still be tested in the feed. What matters is how the video performs with early viewers—especially retention signals like completion and rewatches.

Practically, this means “going viral without followers” is possible, but not guaranteed: most posts won’t expand beyond small tests unless early performance is unusually strong.

Creators respond to what gets rewarded:

The upside is rapid learning; the downside can be trend-chasing, clickbait hooks, or volume over craft if incentives skew that way.

Short video produces many “micro-decisions” per session (watch, skip, rewatch, share), creating more training examples per minute than long-form.

That tighter loop helps the system test, learn, and adjust faster—but it also means mistakes (like over-rewarding repetitive formats) can scale quickly if not constrained.

Platforms try to balance engagement with long-term satisfaction by:

From a user perspective, you can usually steer the feed with tools like , topic controls, reporting, and sometimes a option.

Start with a clear definition of success beyond raw watch time. Then ensure your system design is aligned:

For related breakdowns, you can browse /blog or compare options on /pricing.