Dec 19, 2025·6 min

Claude Code for CI failures: prompts for small fixes + tests

Claude Code for CI failures: prompt it to quote the failing output, suggest the smallest fix, and add a regression test to stop repeats.

Claude Code for CI failures: prompt it to quote the failing output, suggest the smallest fix, and add a regression test to stop repeats.

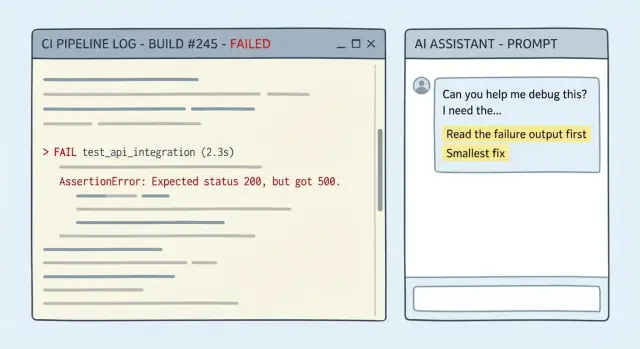

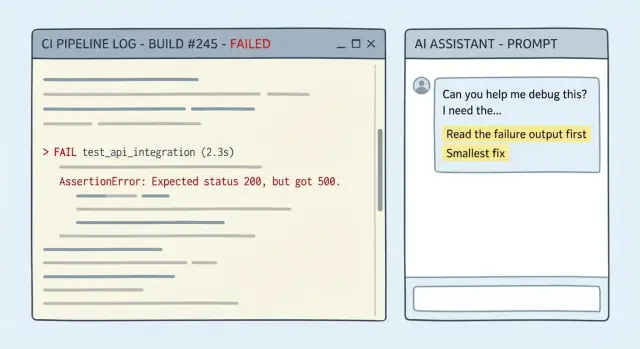

A CI failure usually isn't mysterious. The log tells you where it stopped, what command failed, and the error message. A good run includes a stack trace, a compiler error with a file and line number, or a test report showing which assertion failed. Sometimes you even get a diff-style clue like "expected X, got Y" or a clear failing step like "lint", "build", or "migrate database".

The real problem is that people (and AI) often treat the log as background noise. If you paste a long log and ask for "a fix", many models jump to a familiar explanation instead of reading the last meaningful lines. The guessing gets worse when the error looks common ("module not found", "timeout", "permission denied"). You end up with a big rewrite, a new dependency, or a "try updating everything" answer that doesn't match the actual failure.

The goal isn't "make it pass somehow". It's simpler:

In practice, the "smallest fix" is usually one of these: a few-line code change in one place, a missing import or wrong path, a config value that's clearly wrong for the CI environment, or reverting an accidental breaking change instead of redesigning the code.

A follow-up test matters, too. Passing CI once isn't the same as preventing repeats. If the failure came from an edge case (null input, timezone, rounding, permissions), add a regression test that fails before the fix and passes after. That turns a one-time rescue into a guardrail.

Most bad fixes start with missing context. If you only paste the last red line, the model has to guess what happened earlier, and guesses often turn into rewrites.

Aim to provide enough detail that someone can follow the failure from the first real error to the end, then change as little as possible.

Copy these into your message (verbatim when you can):

go test ./..., npm test, flutter test, golangci-lint run).Add constraints in plain words. If you want a tiny fix, say so: no refactors, no behavior changes unless necessary, keep the patch limited to the failing area.

A simple example: CI fails on a lint step after a dependency bump. Paste the lint output starting from the first warning, include the command CI used, and mention the single package version change. That's enough to suggest a one-line config tweak or a small code change, instead of reformatting half the repo.

If you want something copy-pasteable, this structure is usually enough:

CI command:

Failing output (full):

Recent changes:

Constraints (smallest fix, no refactor):

Flaky? (runs attached):

When a model misses the mark on a CI break, it's usually because your prompt lets it guess. Your job is to make it show its work using the exact failing output, then commit to the smallest change that could make the job pass.

Require evidence and a tiny plan. A good prompt forces five things:

Uncertainty is fine. Hidden uncertainty is what wastes time.

Paste this at the top of your CI question:

Use ONLY the evidence in the CI output below.

1) Quote the exact failing lines you are using.

2) Give ONE sentence: the most likely cause.

3) Propose the smallest fix: 1-3 edits, with file paths.

4) Do NOT do formatting/renames/refactors or "cleanup".

5) List uncertainties + the one extra detail that would confirm the diagnosis.

If the log says "expected 200, got 500" plus a stack trace into user_service.go:142, this structure pushes the response toward that function and a small guard or error handling change, not a redesign of the endpoint.

The fastest wins come from a prompt that forces quoting the logs, stays inside constraints, and stops when something is missing.

You are helping me fix a CI failure.

Repo context (short):

- Language/framework:

- Test/build command that failed: <PASTE THE EXACT COMMAND>

- CI environment (OS, Node/Go/Python versions, etc.):

Failing output (verbatim, include the first error and 20 lines above it):

<PASTE LOG>

Constraints:

- Propose the smallest possible code change that makes CI pass.

- Do NOT rewrite/refactor unrelated code.

- Do NOT touch files you do not need for the fix.

- If behavior changes, make it explicit and justify why it is correct.

Stop rule (no guessing):

- If the log is incomplete or you need more info (missing stack trace, config, versions, failing test name), STOP and ask only the minimum questions needed.

Your response format (follow exactly):

1) Evidence: Quote the exact log lines that matter.

2) Hypothesis: Explain the most likely cause in 2-4 sentences.

3) Smallest fix: Describe the minimal change and why it addresses the evidence.

4) Patch: Provide a unified diff.

5) Follow-up: Tell me the exact command(s) to rerun locally to confirm.

Then, write ONE regression test (or tweak an existing one) that would fail before this fix and pass after it, to prevent the same failure class.

- Keep the test focused. No broad test suites.

- If a test is not feasible, explain why and propose the next-best guardrail (lint rule, type check, assertion).

Two details that reduce back-and-forth:

The quickest way to lose time is to accept a "cleanup" change set that modifies five things at once. Define "minimal" up front: the smallest diff that makes the failing job pass, with the lowest risk and the fastest way to verify.

A simple rule works well: fix the symptom first, then decide if a broader refactor is worth it. If the log points to one file, one function, one missing import, or one edge case, aim there. Avoid "while we're here" edits.

If you truly need alternatives, ask for two and only two: "safest minimal fix" vs "fastest minimal fix." You want tradeoffs, not a menu.

Also require local verification that matches CI. Ask for the same command the pipeline runs (or the closest equivalent), so you can confirm in minutes:

# run the same unit test target CI runs

make test

# or the exact script used in CI

npm test

If the response suggests a large change, push back with: "Show the smallest patch that fixes the failing assertion, with no unrelated formatting or renames."

A fix without a test is a bet you won't hit the same problem again. Always ask for a follow-up test that fails before the fix and passes after.

Be specific about what "good" looks like:

A useful pattern is to require four things: where to put the test, what to name it, what behavior it should cover, and a short note explaining why it prevents future regressions.

Copy-ready add-on:

Example: CI shows a panic when an API handler receives an empty string ID. Don't ask for "a test for this line." Ask for a test that covers invalid IDs (empty, whitespace, wrong format). The smallest fix might be a guard clause that returns a 400 response. The follow-up test should assert behavior for multiple invalid inputs, so the next time someone refactors parsing, CI fails fast.

If your project already has test conventions, spell them out. If you don't, ask it to mirror nearby tests in the same package or folder, and keep the new test minimal and readable.

Paste the CI log section that includes the error and 20-40 lines above it. Also paste the exact failing command CI ran and key environment details (OS, runtime versions, important flags).

Then ask it to restate what failed in plain English and point to the line(s) in the output that prove it. If it can't quote the log, it hasn't really read it.

Ask for the smallest possible code change that makes the failing command pass. Push back on refactors. Before you apply anything, have it list:

Apply the patch and re-run the exact failing command locally (or in the same CI job if that's your only option). If it still fails, paste only the new failing output and repeat. Keeping context small helps keep the response focused.

Once green, add one follow-up test that would have failed before the patch and now passes. Keep it targeted: one test, one reason.

Re-run the command again with the new test included to confirm you didn't just silence the error.

Ask for a short commit message and a PR description that includes what failed, what changed, how you verified it, and what test prevents a repeat. Reviewers move faster when the reasoning is spelled out.

A common failure: everything worked locally, then a small change makes tests fail on the CI runner. Here's a simple one from a Go API where a handler started accepting a date-only value (2026-01-09) but the code still parses only full RFC3339 timestamps.

This is the kind of snippet to paste (keep it short, but include the error line):

--- FAIL: TestCreateInvoice_DueDate (0.01s)

invoice_test.go:48: expected 201, got 400

invoice_test.go:49: response: {"error":"invalid due_date: parsing time \"2026-01-09\" as \"2006-01-02T15:04:05Z07:00\": cannot parse \"\" as \"T\""}

FAIL

exit status 1

FAIL app/api 0.243s

Now use a prompt that forces evidence, a minimal fix, and a test:

You are fixing a CI failure. You MUST use the log to justify every claim.

Context:

- Language: Go

- Failing test: TestCreateInvoice_DueDate

- Log snippet:

<PASTE LOG>

Task:

1) Quote the exact failing line(s) from the log and explain the root cause in 1-2 sentences.

2) Propose the smallest possible code change (one function, one file) to accept both RFC3339 and YYYY-MM-DD.

3) Show the exact patch.

4) Add one regression test that fails before the fix and passes after.

Return your answer with headings: Evidence, Minimal Fix, Patch, Regression Test.

A good response will point to the parsing layout mismatch, then make a small change in one function (for example, parseDueDate in invoice.go) to try RFC3339 first and fall back to 2006-01-02. No refactor, no new packages.

The regression test is the guardrail: send due_date: "2026-01-09" and expect 201. If someone later "cleans up" parsing and removes the fallback, CI breaks immediately with the same failure class.

The fastest way to lose an hour is to give a cropped view of the problem. CI logs are noisy, but the useful part is often 20 lines above the final error.

One trap is pasting only the last red line (for example, "exit 1") while hiding the real cause earlier (a missing env var, a failing snapshot, or the first test that crashed). Fix: include the failing command plus the log window where the first real error appears.

Another time sink is letting the model "tidy up" along the way. Extra formatting, dependency bumps, or refactors make it harder to review and easier to break something else. Fix: lock the scope to the smallest possible code change and reject anything unrelated.

A few patterns to watch for:

If you suspect flakiness, don't paper over it with retries. Remove the randomness (fixed time, seeded RNG, isolated temp dirs) so the signal is clear.

Before you push, do a short sanity pass. The goal is to make sure the change is real, minimal, and repeatable, not a lucky run.

Finally, run a slightly wider set than the single failing job (for example, lint plus unit tests). A common trap is a fix that passes the original job but breaks another target.

If you want this to save time week after week, treat your prompt and response format like team process. The goal is repeatable inputs, repeatable outputs, and fewer "mystery fixes" that break something else.

Turn your best prompt into a shared snippet with a standard response structure: (1) evidence, (2) one-line cause, (3) smallest change, (4) follow-up test, (5) how to verify locally. When everyone uses the same format, reviews get faster because reviewers know where to look.

A lightweight habit loop that works in most teams:

If you prefer a chat-first workflow for building and iterating on apps, you can run the same fix-and-test loop inside Koder.ai, use snapshots while experimenting, and export the source code when you're ready to merge it back into your usual repo.

Start with the first real error, not the final exit 1.

Ask it to prove it read the log.

Use a constraint like:

Default to the smallest patch that makes the failing step succeed.

That usually means:

Avoid “cleanup” changes until CI is green again.

Paste enough context to recreate the failure, not just the last red line.

Include:

Yes—state constraints in plain language and repeat them.

Example constraints:

This keeps the response focused and reviewable.

Fix the earliest real failure first.

When in doubt, ask the model to identify the first failing step in the log and stick to that.

Treat flakiness as a signal to remove randomness, not to add retries.

Common stabilizers:

Once it’s deterministic, the “smallest fix” becomes obvious.

Ask for the exact command CI ran, then run that locally.

If local reproduction is hard, ask for a minimal repro inside the repo (a single test or target) that triggers the same error.

Write one focused regression test that fails before the fix and passes after.

Good targets include:

If it’s a lint/build failure, the equivalent “test” may be tightening a lint rule or adding a check that prevents the same mistake.

Use snapshots/rollback to keep experiments reversible.

A practical loop:

If you build in Koder.ai, snapshots help you iterate quickly without mixing experimental edits into the final patch you’ll export.

go test ./...npm testflutter test