Aug 06, 2025·8 min

How to Create a Mobile App for Field Survey Collection

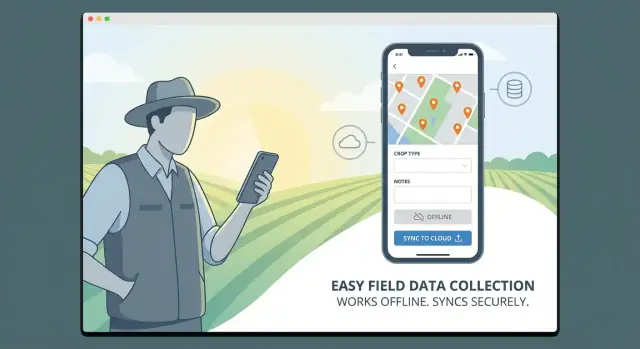

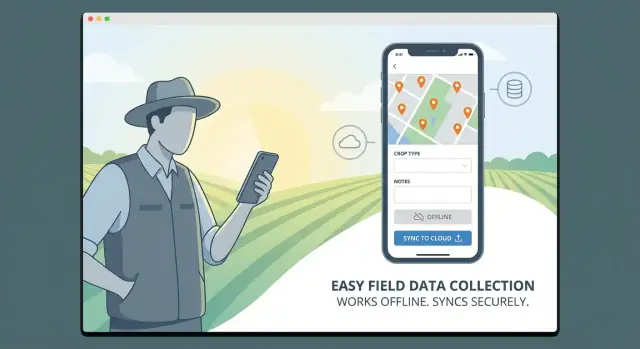

Learn how to plan, design, and build a mobile app for field survey collection: offline forms, GPS, media capture, sync, security, testing, and rollout.

Learn how to plan, design, and build a mobile app for field survey collection: offline forms, GPS, media capture, sync, security, testing, and rollout.

A mobile field survey app isn’t “just a form on a phone.” It’s an end-to-end workflow that helps real people collect evidence, make decisions, and close loops with the office. Before wireframes or feature lists, get clear on what success looks like and who the app is for.

Start by naming the field roles you’re designing around: inspectors, researchers, technicians, auditors, enumerators, or contractors. Each group works differently.

Inspectors may need strict compliance checks and photo proof. Researchers may need flexible notes and sampling. Technicians may need quick issue logging tied to assets. When you’re specific about the user, the rest of the product decisions (form length, media capture, approvals, offline needs) become much easier.

Document what happens after data is collected. Is it used for compliance reports, maintenance prioritization, billing, risk scoring, or regulatory audits? If the data doesn’t drive a decision, it often becomes “nice to have” noise.

A useful exercise: write 3–5 example decisions (“Approve this site,” “Schedule a repair within 48 hours,” “Flag non-compliance”) and note what fields must be present for each.

Decide whether you need one-time surveys (e.g., initial assessments), recurring visits (monthly inspections), audits, or checklist-style tasks. Recurring and audit workflows usually require timestamps, signatures, and traceability, while checklists emphasize speed and consistency.

Pick metrics you can validate early: average completion time, error rate (missing/invalid fields), sync reliability (successful uploads), and rework rate (surveys returned for fixes). These metrics keep your MVP focused and prevent feature creep later.

Before you sketch screens or pick a database, get specific about what the field actually feels like. A survey app that works perfectly in the office can fail quickly when someone is standing in mud, on a roadside, or inside a warehouse.

Start by shadowing a few fieldworkers or running short interviews. Document constraints that directly affect the UI and workflows:

These details should translate into requirements like larger tap targets, autosave, fewer steps per record, and clear progress indicators.

List what the app must use on typical phones/tablets:

Confirm what devices teams already carry and what’s realistic to standardize.

Quantify usage: records per worker per day, peak days, and average attachments per record (photos, audio, documents). This drives offline storage needs, upload time, and how aggressive compression should be.

Decide who owns collected data (client, agency, subcontractor), how long it must be retained, and whether deletion must be auditable. These answers shape permissions, export needs, and long-term storage costs.

Good field data starts with good form design—and a data model that won’t break when requirements evolve. Treat these as one problem: every question type you add should map cleanly to how you store, validate, and report the answer later.

Start with a small, consistent set of inputs that cover most surveys:

Keep options stable by assigning each choice an internal ID, not just a label—labels change, IDs shouldn’t.

Field teams move fast. Conditional logic helps them see only what’s relevant:

Model logic as simple rules (conditions + actions). Store rule definitions with the form version so older submissions remain interpretable.

Validation should prevent common errors while staying practical offline:

Use clear, human error messages (“Enter a value between 0 and 60”) and decide what is a hard block vs. a warning.

A reliable approach is: Form → Sections → Questions → Responses, plus metadata (user, timestamp, location, version). Prefer storing responses as typed values (number/date/string) rather than only as text.

Version your forms. When a question changes, create a new version so analytics can compare apples to apples.

Create templates for common survey patterns (site inspection, customer visit, inventory check). Allow controlled customization—like region-specific options—without forking everything. Templates reduce build time and keep results consistent across teams.

Field teams work in bright sun, rain, gloves, and noisy streets—often with one hand free and weak signal. Your UX should reduce effort, prevent mistakes, and make progress obvious.

Design the app so data entry never depends on a connection. Let people complete a full survey offline, attach photos, and move on.

Make sync status unmissable: a simple indicator like Not synced / Syncing / Synced / Needs attention at the record level and a small global status in the header. Fieldworkers shouldn’t have to guess whether their work is safely uploaded.

Use large touch targets, clear spacing, and high-contrast labels. Keep typing to a minimum by leaning on:

When text is required, offer short suggestions and input masks (e.g., phone numbers) to reduce formatting errors.

Support Save as draft at any time, including mid-question. Fieldwork gets interrupted—calls, gates, weather—so “resume later” must be reliable.

Navigation should be predictable: a simple section list, a “Next incomplete” button, and a review screen that jumps directly to missing or invalid answers.

Show errors inline and explain how to fix them: “Photo is required for this site type” or “Value must be between 0 and 100.” Avoid vague messages like “Invalid input.” When possible, prevent errors earlier with constrained choices and clear examples under the field.

Location is often the difference between “we collected data” and “we can prove where and when it was collected.” A well-designed location layer also reduces back-and-forth with field teams by making assignments and coverage visible on a map.

When a survey starts, record GPS coordinates along with an accuracy value (e.g., in meters). Accuracy matters as much as the pin itself: a point captured at ±5 m is very different from ±80 m.

Allow a manual adjustment when needed—urban canyons, dense forests, and indoor work can confuse GPS. If you allow edits, log both the original reading and the adjusted value, plus a reason (optional), so reviewers understand what happened.

Maps are most valuable when they answer “what should I do next?” Consider map views for:

If your workflow includes quotas or zones, add simple filters (unvisited, due today, high priority) rather than complex GIS controls.

Geofencing can block submissions outside an approved boundary or prompt a warning (“You’re 300 m outside the assigned area”). Use it where it protects data quality, but avoid strict blocking if GPS is unreliable in your region—warnings plus supervisor review may work better.

Record key timestamps (opened, saved, submitted, synced) and the user ID/device ID for each event. This audit trail supports compliance, resolves disputes, and improves QA without adding extra steps for the fieldworker.

Field surveys often need proof: a photo of a damaged pole, a short video of a leak, or an audio note from a resident interview. If your app treats media as an afterthought, fieldworkers will fall back to personal camera apps and send files over chat—creating gaps and privacy risks.

Make media capture a first-class question type, so attachments are automatically tied to the right record (and the right question).

Allow optional annotations that help reviewers later: captions, issue tags, or simple markup (arrows/circles) on images. Keep it lightweight—one tap to capture, one tap to accept, then move on.

For asset surveys, barcode/QR scanning reduces typing errors and speeds up repetitive work. Use scanning as an input method for fields like Asset ID, Inventory code, or Meter number, and show immediate validation feedback (e.g., “ID not found” or “Already surveyed today”).

When scanning fails (dirty label, low light), provide a quick fallback: manual entry plus a “photo of label” option.

Media can overwhelm mobile data plans and slow down sync. Apply sensible defaults:

Always preview the final file size before upload so users understand what will sync.

Define clear limits per question and per submission (count and total MB). When offline, store attachments locally with rules like:

This keeps the app reliable in the field while preventing surprise storage and data bills.

Field survey apps live or die by what happens when connectivity is unreliable. Your goal is simple: a fieldworker should never worry about losing work, and a supervisor should be able to trust what’s in the system.

Decide whether sync is manual (a clear “Sync now” button) or automatic (quietly syncing in the background). Many teams use a hybrid: autosync when a connection is decent, plus a manual control for peace of mind.

Also plan background retries. If an upload fails, the app should queue it and retry later without forcing the user to re-enter anything. Show a small status indicator (“3 items pending”) instead of interrupting the workflow.

Assume the device is the primary workspace. Save every form and edit locally immediately, even if the user is online. This offline-first approach prevents data loss from brief signal drops and makes the app feel faster.

Conflicts happen when the same record is edited on two devices, or a supervisor updates a case while a fieldworker is offline. Pick a strategy that matches your operations:

Document the rule in plain language and keep an audit trail so changes are traceable.

Photos, audio, and videos are where syncing breaks down. Use incremental uploads (send smaller chunks) and resumable transfers so a 30MB video doesn’t fail at 95% and start over. Let users keep working while media uploads in the background.

Provide admin tools to spot trouble early: dashboards or reports that show sync failures, last successful sync per device, storage pressure, and app version. A simple “device health” view can save hours of support time and protect your data quality.

Field survey apps often handle sensitive information (locations, photos, respondent details, operational notes). Security and privacy aren’t “nice to have” features—if people don’t trust the app, they won’t use it, and you may create compliance risks.

Start with role-based access control (RBAC) and keep it simple:

Design permissions around real workflows: who can edit after submission, who can delete records, and who can see personally identifiable information (PII). A useful pattern is to let supervisors see operational fields (status, GPS, timestamps) while restricting respondent details unless necessary.

Fieldwork often happens offline, so your app will store data locally. Treat the phone as a potentially lost device.

Also consider safeguards like automatic logout, biometric/PIN unlock for the app, and the ability to revoke sessions or wipe local data when a device is compromised.

Your sign-in method should match how field teams actually operate:

Whatever you choose, support quick account recovery and clear session handling—nothing slows fieldwork like lockouts.

Collect only what you truly need. If you must gather PII, document why, set retention rules, and make consent explicit.

Build lightweight consent flows: a checkbox with a short explanation, a signature field when required, and metadata that records when and how consent was obtained. This keeps your surveys respectful and easier to audit later.

Your tech stack should fit how field teams actually work: unreliable connectivity, mixed device fleets, and a need to ship updates without breaking data collection. The “best” stack is the one your team can build, maintain, and iterate on quickly.

If you need to support both iOS and Android, a cross-platform framework is often the fastest path to a solid MVP.

A practical compromise is cross-platform for most UI and logic, with small native modules only where needed (e.g., specialized Bluetooth device SDKs).

Your backend must handle user accounts, form definitions, submissions, media files, and sync.

Whichever you pick, design around an offline-first client: local storage on the device, a sync queue, and clear server-side validation.

If you want to accelerate the first working version without committing to a full traditional build right away, a vibe-coding platform like Koder.ai can help you prototype the web admin, backend APIs, and even a companion mobile app from a chat-driven spec. It’s especially useful for field survey products because you can iterate quickly on form definitions, roles/permissions, and sync behavior, then export source code when you’re ready to take the project in-house. (Koder.ai commonly ships React for web, Go + PostgreSQL for backend services, and Flutter for mobile.)

Field data rarely lives alone. Common integration targets include CRM/ERP, GIS systems, spreadsheets, and BI tools. Favor an architecture with:

As a rule of thumb:

If your timeline is tight, keep the first release focused on reliable capture and sync—everything else can build on that foundation.

Before you commit to full build-out, create a small prototype that proves the app works where it matters: in the field, on real devices, under real constraints. A good prototype isn’t a polished demo—it’s a fast way to uncover usability problems and missing requirements while changes are still cheap.

Start with 2–3 key flows that represent daily work:

Keep the prototype focused. You’re validating the core experience, not building every form type or feature.

If you’re moving fast, consider using a planning-first approach (user roles → workflows → data model → screens) and then generating a working skeleton quickly. For example, Koder.ai’s planning mode can help you turn those requirements into a build plan and a baseline implementation, while snapshots and rollback make it safer to iterate aggressively during prototype cycles.

Run quick field tests with real users (not just stakeholders) and real conditions: bright sun, gloves, spotty reception, older phones, and time pressure. Ask participants to “think out loud” as they work so you can hear what’s confusing.

During tests, track concrete issues:

Even small delays add up when someone completes dozens of surveys per day.

Use what you learn to refine question order, grouping, validation messages, and default values (e.g., auto-fill date/time, last-used site, or common answers). Tightening the form design early prevents expensive rework later and sets you up for a smoother MVP build. If you’re defining scope, also see /blog/mobile-app-mvp for prioritization ideas.

Testing a mobile field survey app at a desk is rarely enough. Before release, you want proof that forms, GPS, and sync behave the same way in basements, rural roads, and busy job sites.

Run structured offline scenarios: create surveys in airplane mode, in areas with one bar of signal, and during network handoffs (Wi‑Fi → LTE). Verify that users can still search lists, save drafts, and submit queues without losing work.

Pay special attention to “edge timing” issues: a form saved at 11:58 PM local time, then synced after midnight; or a device that changes time zones mid-trip. Confirm that timestamps remain consistent in the backend and reports.

Test GPS accuracy across device types and environments (urban canyons, indoors near windows, open field). Decide what “good enough” means (e.g., warn under 30m accuracy) and verify those prompts.

Also test permission flows from a clean install: location, camera, storage, Bluetooth integrations, and background sync. A surprising number of failures happen when a user taps “Don’t Allow” once.

Automate regression tests for skip logic, calculations, required fields, and validation rules. Each new form update can break old assumptions—automated checks keep releases safe.

Use a simple checklist so nothing is missed:

A field survey app only delivers value when teams actually use it correctly, consistently, and comfortably. Treat launch as an operational project—not just a button you press in the app store.

Aim for “learn in 10 minutes, master in a day.” Build onboarding into the app so people don’t need to hunt for instructions.

Include:

Start with a pilot team that represents real working conditions (different regions, devices, and skill levels). Keep a tight feedback loop:

A phased rollout reduces risk and builds internal champions who can help train others.

Field data collection isn’t complete until it can be reviewed and used. Provide simple reporting options:

Keep reporting focused on decisions: what’s done, what needs attention, and what looks suspicious.

Use analytics to spot friction points and improve:

Turn those insights into practical changes: shorten forms, clarify wording, tweak validation rules, adjust workflows, and rebalance assignments so teams stay productive and data stays trustworthy.

Start by defining primary users (inspectors, technicians, enumerators, etc.) and the decisions the data must support (e.g., approve a site, schedule a repair, flag non-compliance). Then choose the survey cadence (one-time vs recurring vs audits) and set measurable metrics like completion time, error rate, sync reliability, and rework rate—so your MVP doesn’t drift.

Assume offline is normal. Design for:

These constraints translate into requirements like autosave, fewer steps per record, large tap targets, and clear progress/sync indicators.

Prioritize inputs that are fast and reportable:

Use stable internal IDs for options (labels can change), and keep question types consistent so validation and analytics stay reliable over time.

Use conditional logic to show only what’s relevant (e.g., “If damaged = yes, ask damage type”). Keep it manageable by modeling logic as simple rules (conditions → actions) and storing those rule definitions with the form version so older submissions remain interpretable even after the form evolves.

Focus validation where mistakes are common:

Use clear, actionable messages and decide what’s a hard block vs a warning, especially for offline situations where lookup data may be unavailable.

Use an offline-first approach:

The goal is that fieldworkers never wonder whether their work is safe.

Capture GPS with an accuracy value (meters) and log key timestamps (opened/saved/submitted/synced) plus user/device IDs for traceability. Allow manual location adjustment when GPS is unreliable, but log both the original and adjusted coordinates (and optionally a reason) so reviewers can trust what happened.

Make media a first-class part of the form:

This prevents teams from using personal camera apps and sharing files outside the system.

Pick a conflict strategy you can explain:

Always keep an audit trail of changes so supervisors can see what changed, when, and by whom.

Choose based on device needs and team capacity:

Backends can be managed (hosted Postgres + managed auth), serverless (campaign spikes), or custom (maximum control). Whatever you choose, design around an offline-first client, a sync queue, and a stable API for integrations (CRM/ERP, GIS, BI, exports).