Dec 10, 2025·8 min

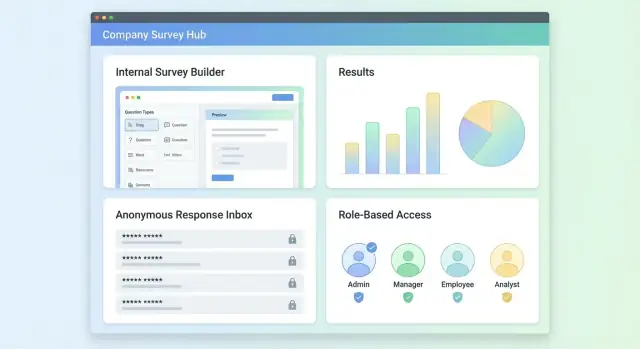

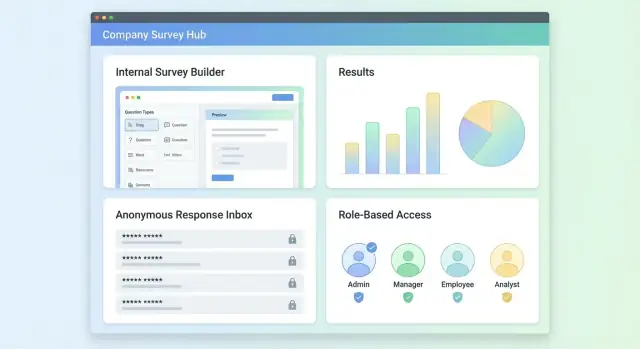

Create a Web App for Internal Surveys and Feedback: Guide

Learn how to plan, design, and build a web app for internal surveys and feedback—roles, anonymity, workflows, analytics, security, and rollout steps.

Learn how to plan, design, and build a web app for internal surveys and feedback—roles, anonymity, workflows, analytics, security, and rollout steps.

An internal survey app should turn employee input into decisions—not just “run surveys.” Before choosing features, define the problem you’re solving and what “done” looks like.

Start by naming the survey types you expect to run regularly. Common categories include:

Each category implies different needs—frequency, anonymity expectations, reporting depth, and follow-up workflows.

Clarify who will own, operate, and trust the system:

Write down stakeholder goals early to prevent feature creep and avoid building dashboards no one uses.

Set measurable outcomes so you can judge the app’s value after rollout:

Be explicit about constraints that affect scope and architecture:

A tight first version is usually: create surveys, distribute them, collect responses safely, and produce clear summaries that drive follow-up actions.

Roles and permissions determine whether the tool feels credible—or politically risky. Start with a small set of roles, then add nuance only when real needs appear.

Employee (respondent)

Employees should be able to discover surveys they’re eligible for, submit responses quickly, and (when promised) trust that responses can’t be traced back to them.

Manager (viewer + action owner)

Managers typically need team-level results, trends, and follow-up actions—not raw row-level responses. Their experience should focus on understanding themes and improving their team.

HR/Admin (program owner)

HR/admin users usually create surveys, manage templates, control distribution rules, and view cross-organization reporting. They also handle exports (when allowed) and audit requests.

System admin (platform owner)

This role maintains integrations (SSO, directory sync), access policies, retention settings, and system-wide configuration. They should not automatically see survey results unless explicitly granted.

Create survey → distribute: HR/admin selects a template, adjusts questions, sets eligible audiences (e.g., department, location), and schedules reminders.

Respond: Employee receives an invite, authenticates (or uses a magic link), completes the survey, and sees a clear confirmation.

Review results: Managers see aggregated results for their scope; HR/admin sees org-wide insights and can compare groups.

Act: Teams create follow-up actions (e.g., “improve onboarding”), assign owners, set dates, and track progress.

Define permissions in plain language:

A frequent failure is letting managers see results that are too granular (e.g., breaking down to a 2–3 person subgroup). Apply minimum reporting thresholds and suppress filters that could identify individuals.

Another is unclear permissions (“Who can see this?”). Every results page should show a short, explicit access note like: “You’re viewing aggregated results for Engineering (n=42). Individual responses are not available.”

Good survey design is the difference between “interesting data” and feedback you can act on. In an internal survey app, aim for surveys that are short, consistent, and easy to reuse.

Your builder should start with a few opinionated formats that cover most HR and team needs:

These types benefit from consistent structure so results can be compared over time.

A solid MVP question library usually includes:

Make the preview show exactly what respondents will see, including required/optional markers and scale labels.

Support basic conditional logic such as: “If someone answers No, show a short follow-up question.” Keep it to simple rules (show/hide individual questions or sections). Overly complex branching makes surveys harder to test and harder to analyze later.

Teams will want to reuse surveys without losing history. Treat templates as starting points and create versions when publishing. That way, you can edit next month’s pulse survey without overwriting the previous one, and analytics stay tied to the exact questions that were asked.

If your teams span regions, plan for optional translations: store per-question text by locale and keep answer choices consistent across languages to preserve reporting.

Trust is a product feature. If employees aren’t sure who can see their answers, they’ll either skip the survey or “answer safely” instead of honestly. Make visibility rules explicit, enforce them in reporting, and avoid accidental identity leaks.

Support three distinct modes and label them consistently across the builder, invite, and respondent screens:

Even without names, small groups can “out” someone. Enforce minimum group sizes everywhere results are broken down (team, location, tenure band, manager):

Comments are valuable—and risky. People may include names, project details, or personal data.

Maintain audit trails for accountability, but don’t turn them into a privacy leak:

Before submission, show a short “Who can see what” panel that matches the selected mode. Example:

Your responses are anonymous. Managers will only see results for groups of 7+ people. Comments may be reviewed by HR to remove identifying details.

Clarity reduces fear, increases completion rates, and makes your feedback program credible.

Getting a survey in front of the right people—and only once—matters as much as the questions. Your distribution and login choices directly affect response rate, data quality, and trust.

Support multiple channels so admins can choose what fits the audience:

Keep messages short, include time-to-complete, and make the link one tap away.

For internal surveys, common approaches are:

Be explicit in the UI about whether a survey is anonymous or identified. If a survey is anonymous, don’t ask users to “log in with their name” unless you clearly explain how anonymity is preserved.

Build reminders as a first-class feature:

Define behavior up front:

Combine methods:

Great UX matters most when your audience is busy and not particularly interested in “learning a tool.” Aim for three experiences that feel purpose-built: the survey builder, the respondent flow, and the admin console.

The builder should feel like a checklist. A left-side question list with drag-and-drop ordering works well, with the selected question shown in a simple editor panel.

Include essentials where people expect them: required toggles, help text (what the question means and how answers will be used), and quick controls for scale labels (e.g., “Strongly disagree” → “Strongly agree”). A persistent Preview button (or split-view preview) helps creators catch confusing wording early.

Keep templates lightweight: let teams start from a “Pulse check,” “Onboarding,” or “Manager feedback” template and edit in place—avoid multi-step wizards unless they meaningfully reduce errors.

Respondents want speed, clarity, and confidence. Make the UI mobile-friendly by default, with readable spacing and touch targets.

A simple progress indicator reduces drop-off (“6 of 12”). Provide save and resume without drama: autosave after each answer, and make the “Resume” link easy to find from the original invite.

When logic hides/shows questions, avoid surprise jumps. Use small transitions or section headers so the flow still feels coherent.

Admins need control without hunting through settings. Organize around real tasks: manage surveys, select audiences, set schedules, and assign permissions.

Key screens usually include:

Cover the basics: full keyboard navigation, visible focus states, sufficient contrast, and labels that make sense without context.

For errors and empty states, assume non-technical users. Explain what happened and what to do next (“No audience selected—choose at least one group to schedule”). Provide safe defaults and undo where possible, especially around sending invites.

A clean data model keeps your survey app flexible (new question types, new teams, new reporting needs) without turning every change into a migration crisis. Keep a clear separation between authoring, distribution, and results.

At minimum you’ll want:

Information architecture follows naturally: a sidebar with Surveys and Analytics, and within a survey: Builder → Distribution → Results → Settings. Keep “Teams” separate from “Surveys” so access control stays consistent.

Store raw answers in an append-friendly structure (e.g., an answers table with response_id, question_id, typed value fields). Then build aggregated tables/materialized views for reporting (counts, averages, trend lines). This avoids recalculating every chart on every page load while preserving auditability.

If anonymity is enabled, separate identifiers:

responses holds no user referenceinvitations holds the mapping, with stricter access and shorter retentionMake retention configurable per survey: delete invitation links after N days; delete raw responses after N months; keep only aggregates if needed. Provide exports (CSV/XLSX) aligned with those rules (/help/data-export).

For attachments and links in answers, default to deny unless there’s a strong use case. If allowed, store files in private object storage, scan uploads, and record only metadata in the database.

Free-text search is useful, but it can undermine privacy. If you add it, limit indexing to admins, support redaction, and document that search may increase the risk of re-identification. Consider “search within a single survey” instead of global search to reduce exposure.

A survey app doesn’t need exotic technology, but it does need clear boundaries: a fast UI for building and answering surveys, a reliable API, a database that can handle reporting, and background workers for notifications.

Pick a stack your team can operate confidently:

If you expect heavy analytics, Postgres still holds up well, and you can add a data warehouse later without rewriting the app.

If you want to prototype the full stack quickly (UI, API, database, and auth) from a requirements doc, Koder.ai can accelerate the build using a chat-based workflow. It’s a vibe-coding platform that generates production-oriented apps (commonly React + Go + PostgreSQL) with features like planning mode, source code export, and snapshots/rollback—useful when you’re iterating on an internal tool with sensitive permissions and privacy rules.

A practical baseline is a three-tier setup:

Add a worker service for scheduled or long-running tasks (invites, reminders, exports) to keep the API responsive.

REST is usually the simplest choice for internal tools: predictable endpoints, easy caching, straightforward debugging.

Typical REST endpoints:

POST /surveys, GET /surveys/:id, PATCH /surveys/:idPOST /surveys/:id/publishPOST /surveys/:id/invites (create assignments/invitations)POST /responses and GET /surveys/:id/responses (admin-only)GET /reports/:surveyId (aggregations, filters)GraphQL can be helpful if your builder UI needs many nested reads (survey → pages → questions → options) and you want fewer round-trips. It adds operational complexity, so use it only if the team is comfortable.

Use a job queue for:

If you support file uploads or downloadable exports, store files outside the database (e.g., S3-compatible object storage) and serve them via a CDN. Use time-limited signed URLs so only authorized users can download.

Run dev / staging / prod separately. Keep secrets out of code (environment variables or a secrets manager). Use migrations for schema changes, and add health checks so deployments fail fast without breaking active surveys.

Analytics should answer two practical questions: “Did we hear from enough people?” and “What should we do next?” The goal isn’t flashy charts—it’s decision-ready insight that leaders can trust.

Start with a participation view that’s easy to scan: response rate, invite coverage, and distribution over time (daily/weekly trend). This helps admins spot drop-offs early and tune reminders.

For “top themes,” be careful. If you summarize open-text comments (manually or with automated theme suggestions), label it as directional and let users click through to underlying comments. Avoid presenting “themes” as facts when the sample is small.

Breakdowns are useful, but they can expose individuals. Reuse the same minimum-group thresholds you define for anonymity everywhere you slice results. If a subgroup is under the threshold, roll it into “Other” or hide it.

For smaller organizations, consider a “privacy mode” that automatically raises thresholds and disables overly granular filters.

Exports are where data often leaks. Keep CSV/PDF exports behind role-based access controls and log who exported what and when. For PDFs, optional watermarking (name + timestamp) can discourage casual sharing without blocking legitimate reporting.

Open-ended responses need a workflow, not a spreadsheet.

Provide lightweight tools: tagging, theme grouping, and action notes attached to comments (with permissions so sensitive notes aren’t visible to everyone). Keep the original comment immutable and store tags/notes separately for auditability.

Close the loop by letting managers create follow-ups from insights: assign an owner, set a due date, and track status updates (e.g., Planned → In Progress → Done). An “Actions” view that links back to the source question and segment makes progress review straightforward during check-ins.

Security and privacy aren’t add-ons for an internal surveys app—they shape whether employees trust the tool enough to use it honestly. Treat this as a checklist you can review before launch and at every release.

Use HTTPS everywhere and set secure cookie flags (Secure, HttpOnly, and an appropriate SameSite policy). Enforce strong session management (short-lived sessions, logout on password change).

Protect all state-changing requests with CSRF defenses. Validate and sanitize input on the server (not just the browser), including survey questions, open-text responses, and file uploads (if any). Add rate limiting for login, invitation, and reminder endpoints.

Implement role-based access control with clear boundaries (e.g., Admin, HR/Program Owner, Manager, Analyst, Respondent). Default every new feature to “deny” until explicitly permitted.

Apply least privilege in the data layer too—survey owners should only access their own surveys, and analysts should get aggregated views unless explicitly granted response-level access.

If your culture requires it, add approvals for sensitive actions such as enabling anonymity modes, exporting raw responses, or adding new survey owners.

Encrypt data in transit (TLS) and at rest (database and backups). For especially sensitive fields (e.g., respondent identifiers or tokens), consider application-layer encryption.

Store secrets (DB credentials, email provider keys) in a secrets manager; rotate them regularly. Never log access tokens, invitation links, or response identifiers.

Decide data residency early (where the database and backups live) and document it for employees.

Define retention rules: how long to keep invitations, responses, audit logs, and exports. Provide a deletion workflow that’s consistent with your anonymity model.

Be DPA-ready: maintain a list of subprocessors (email/SMS, analytics, hosting), document processing purposes, and have a contact point for privacy requests.

Add unit and integration tests for permissions: “Who can view what?” and “Who can export what?” should be covered.

Test privacy edge cases: small-team thresholds, forwarded invitation links, repeated submissions, and export behavior. Run periodic security reviews and keep an audit log of admin actions and sensitive data access.

A successful internal survey app isn’t “done” at launch. Treat the first release as a learning tool: it should solve a real feedback need, prove reliability, and earn trust—then expand based on usage.

Keep the MVP focused on the full loop from creation to insight. At minimum, include:

Aim for “fast to publish” and “easy to answer.” If admins need a training session just to send a survey, adoption will stall.

If you’re resource-constrained, this is also where tools like Koder.ai can help: you can describe roles, anonymity modes, reporting thresholds, and distribution channels in planning mode, generate an initial app, and iterate quickly—while still retaining the option to export source code and run it in your own environment.

Start with a pilot in a single team or department. Use a short pulse survey (5–10 questions) and set a tight timeline (e.g., one week open, results reviewed in the following week).

Include a couple of questions about the tool itself: Was it easy to access? Did anything feel confusing? Did anonymity expectations match reality? That meta-feedback helps you fix friction before a wider launch.

Even the best product needs internal clarity. Prepare:

If you have an intranet, publish a single source of truth (e.g., /help/surveys) and link to it from invitations.

Track a small set of operational signals every day during the first runs: deliverability (bounces/spam), response rate by audience, app errors, and page performance on mobile. Most drop-offs happen at login, device compatibility, or unclear consent/anonymity copy.

Once the MVP is stable, prioritize improvements that reduce admin effort and increase actionability: integrations (HRIS/SSO, Slack/Teams), a templates library for common surveys, smarter reminders, and more advanced analytics (trends over time, segmentation with privacy thresholds, and action tracking).

Keep your roadmap tied to measurable outcomes: faster survey creation, higher completion rates, and clearer follow-through.

Start by listing the recurring survey categories you need (pulse, engagement, suggestions, 360, post-event). For each, define:

This prevents building a generic tool that fits none of your real programs.

Use a small, clear set of roles and scope results by default:

Write permissions in plain language and show an access note on results pages (e.g., “Aggregated results for Engineering (n=42)”).

Track a few measurable outcomes:

Use these to judge value after rollout and to prioritize what to build next.

Support explicit modes and label them consistently in builder, invites, and the respondent UI:

Also add a short “Who can see what” panel before submission so the promise is unambiguous.

Enforce privacy rules everywhere results can be sliced:

Show clear messaging like “Not enough responses to protect anonymity.”

Treat comments as high value/high risk:

Keep original comments immutable and store tags/notes separately for auditability.

Offer multiple invite channels and keep messages short (time-to-complete + close date):

For authentication, common options are SSO, magic links, or employee ID–based access. If the survey is anonymous, explain how anonymity is preserved even if users authenticate to prevent duplicates.

Include these essentials:

Invest in empty states and error messages that tell non-technical users exactly what to do next.

Use a small set of core entities and separate authoring, distribution, and results:

Store raw answers in a typed answers structure, then build aggregates/materialized views for reporting. For anonymous surveys, keep identity mappings (if any) separated and tightly controlled.

Ship an MVP that completes the loop from creation to insight:

Pilot with one team using a 5–10 question pulse for one week, then review results the next week. Include a couple questions about tool access and whether anonymity expectations matched reality.