Jul 25, 2025·8 min

How to Create a Web App to Track Customer Training Completion

Learn how to plan, design, and build a web app that tracks customer course enrollment, progress, and completion—plus reminders, reports, and certificates.

Learn how to plan, design, and build a web app that tracks customer course enrollment, progress, and completion—plus reminders, reports, and certificates.

Training completion tracking is not just a checklist—it answers a concrete operational question: who completed which training, when, and with what result. If your team can’t trust that answer, customer onboarding training slows down, renewals get riskier, and compliance conversations become stressful.

At minimum, your learning progress web app should make it easy to:

This becomes your “source of truth” for customer training tracking—especially when multiple teams (CS, Support, Sales, Compliance) need the same answer.

“Customer training” can mean different audiences:

Clarifying the audience early affects everything: required vs optional courses, reminder cadence, and what “completion” actually means.

A practical education completion dashboard usually needs:

Define success beyond “it works”:

These metrics guide what you build first—and what you can safely leave for later.

A training-completion app becomes much easier to manage when you separate who someone is (their role) from who they belong to (their customer account). This keeps reporting accurate, prevents accidental data exposure, and makes permissions predictable.

Learner

Learners should have the simplest experience: view assigned courses, start/resume training, and see their own progress and completion status. They should not see other people’s data, even within the same customer.

Customer Admin

A customer admin manages training for their organization: invite learners, assign courses, view completion for their teams, and export reports for audits. They can edit user attributes (name, team, status) but should not change global course content unless you explicitly support customer-specific courses.

Internal Admin (your team)

Internal admins need visibility across customers: manage accounts, troubleshoot access, correct enrollments, and run global reports. This role should also control sensitive actions like deleting users, merging accounts, or changing billing-related fields.

Instructor / Content Manager (optional)

If you run live sessions or have staff updating course materials, this role can create/edit courses, manage sessions, and review learner activity. They typically shouldn’t see customer billing data or cross-customer analytics unless required.

Most B2B apps work best with a simple hierarchy:

Teams help with day-to-day management; cohorts help with reporting and deadlines.

Treat every customer organization as its own secure container. At minimum:

Designing roles and tenant boundaries early prevents painful rewrites when you add reporting, reminders, and integrations later.

A clear data model prevents most “why does this user look incomplete?” problems later. Aim to store what was assigned, what happened, and why you consider it complete—without guessing.

Start by modeling training content in a way that matches how you deliver it:

Even if your MVP only has “courses,” designing for modules/lessons avoids painful migrations when you add structure.

Completion should be explicit, not implied. Common rules include:

At the course level, define whether completion requires all required lessons, all required modules, or any N of M items. Store the version of the rule used, so reporting stays consistent if you change requirements later.

Track a progress record per learner and item. Useful fields:

started_at, last_activity_at, completed_atexpires_at (for annual renewals or compliance cycles)This supports reminders (“inactive for 7 days”), renewal reporting, and audit trails.

Decide what evidence to store for each completion:

Keep evidence lightweight: store identifiers and summaries in your app, and link to raw artifacts (quiz answers, video logs) only if you truly need them for compliance.

Getting authentication and enrollment right makes the app feel effortless for learners and controllable for admins. The goal is to reduce friction without losing track of who completed what—and for which customer account.

For an MVP, pick one primary sign-in option and one fallback:

You can add SSO later (SAML/OIDC) when larger customers request it. Design now for it by keeping identities flexible: a user can have multiple auth methods connected to the same profile.

Most training apps need three enrollment paths:

A practical rule: enrollment should always record who enrolled the learner, when, and under which customer account.

Re-enrollment and retakes: allow admins to reset progress or create a new attempt. Keep history so reporting can show “latest attempt” vs “all attempts.”

Course version updates: when content changes, decide whether completions stay valid. Common options are:

If you use passwords, support “forgot password” via email with short-lived tokens, rate limits, and clear messaging. If you use magic links, you still need recovery for cases like changed emails—usually handled by admin support or a verified email change flow.

The best test: can a learner join a course from an invite in under a minute, and can an admin fix mistakes (wrong email, wrong course, retake) without engineering help?

A training tracker only works if learners can quickly understand what they need to do next—without hunting through menus or guessing what “complete” means. Design the learner experience to reduce decisions and keep momentum.

Start with a single home screen that answers three questions: What’s assigned to me? When is it due? How far along am I?

Show assigned trainings as cards or rows with:

If you have compliance needs, add a clear status label like “Overdue” or “Due in 3 days,” but avoid alarmist UI.

Most customers will do training between meetings, on phones, or in short bursts. Make the player resume-first: open on the last unfinished step and keep navigation obvious.

Practical essentials:

Show completion requirements near the top of the course (and on every step if needed): e.g., “Complete all lessons,” “Pass quiz (80%+),” “Watch video to 90%.” Then display what’s left: “2 lessons remaining” or “Quiz not attempted.”

When learners finish, confirm it immediately with a completion screen and a link to certificates or history (e.g., /certificates).

Bake in a few basics from day one: keyboard navigation for the player, visible focus states, good color contrast, captions/transcripts for video, and clear error messages. These improvements reduce support tickets and drop-off.

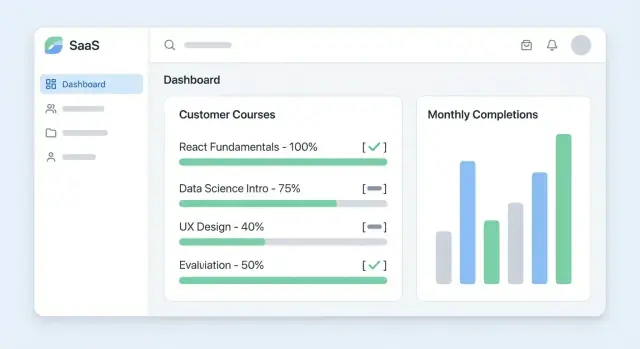

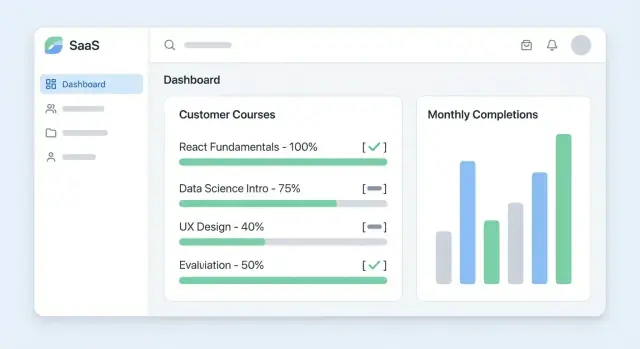

Your admin dashboard should answer one question immediately: “Are our customers actually finishing the training?” The best dashboards do this without making admins click through five screens or exporting data just to understand what’s going on.

Start with an account selector (or account switcher) so the admin always knows which customer they’re viewing. Inside each customer account, show a clear table of enrolled learners with the essentials:

A small “health summary” above the table helps admins scan quickly: total enrolled, completion rate, and how many are stalled (e.g., no activity in 14 days).

Admins typically ask questions like “Who hasn’t started Course A?” or “How is the Support team doing?” Make filters prominent and fast:

Keep the results instantly sortable by last activity, status, and completion date. This turns the dashboard into a daily working tool, not just a report.

Completion tracking becomes valuable when admins can take action right away. Add bulk actions directly on the results list:

Bulk actions should respect filters. If an admin filters to “In progress → Course B → Team: Onboarding,” the export should include exactly that cohort.

From any row in the table, admins should be able to click into a learner detail view. The key is a readable timeline that explains why someone is stuck:

This drill-down reduces back-and-forth with customers (“I swear I finished it”) because admins can see what happened and when.

Reports are where training completion tracking turns into something you can act on—and something you can prove during an audit or renewal.

Start with a small set of reports that map to common decisions:

Keep each report drillable: from a chart to the underlying list of learners, so admins can follow up quickly.

Many teams live in spreadsheets, so CSV export is the default. Include stable columns like customer account, learner email, course name, enrollment date, completion date, status, and score (if applicable).

For compliance or customer reviews, a PDF summary can be optional: one page per customer account or per course with totals and a dated snapshot. Don’t block your MVP on perfect PDF formatting—ship CSV first.

Certificate generation is usually straightforward:

/verify/<certificate_id>.The verification page should confirm the learner, course, and issue date without exposing extra personal details.

Completion history grows fast. Define how long to keep:

Make retention configurable per customer account so you can support different compliance needs without rebuilding later.

Notifications are the difference between “we assigned training” and “people actually finish it.” The goal isn’t to nag—it’s to create a gentle, predictable system that prevents customers from falling behind.

Start with a small set of triggers that cover most cases:

Keep triggers configurable per course or customer account, because compliance training and product onboarding have very different tolerance for urgency.

Email is the primary channel for most customer training tracking because it reaches learners who aren’t logged in. In-app notifications are useful for people already active in the learning progress web app—think of them as reinforcement, not the main delivery mechanism.

If you add both, ensure they share the same underlying schedule so the learner doesn’t get double-pinged.

Give admins simple controls:

This keeps reminders aligned with customer onboarding training style and avoids spam complaints.

Store a notification history record for each send attempt: trigger type, channel, template version, recipient, timestamp, and result (sent, bounced, suppressed). This prevents duplicates, supports training compliance reporting, and helps explain “why did I get this email?” when customers ask.

Integrations turn a training tracker from “another tool to update” into a system your team can trust. The goal is simple: keep customer accounts, learners, and completion status consistent across the tools you already use.

Start with the systems that already define customer identity and workflows:

Pick one “system of record” per entity to avoid conflicts:

Keep the surface area small and stable:

POST /api/users (create/update by external_id or email)POST /api/enrollments (enroll user in course)POST /api/completions (set completion status + completed_at)GET /api/courses (for external systems to map course IDs)Document one core webhook your customers can rely on:

course.completedaccount_id, user_id, course_id, completed_at, score (optional)If you later add more events (enrolled, overdue, certificate issued), keep the same conventions so integrations stay predictable.

Training completion data looks harmless—until you connect it to real people, customer accounts, certificates, and audit history. A practical MVP should treat privacy and security as product features, not afterthoughts.

List every piece of personal data you plan to store (name, email, job title, training history, certificate IDs). If you don’t need it to prove completion or manage enrollment, don’t collect it.

Decide early whether you must support audits (for regulated customers). Audits usually require immutable timestamps (enrolled, started, completed), who made changes, and what was changed.

If learners are in the EU/UK or similar jurisdictions, you’ll likely need a clear lawful basis for processing and, in some cases, consent. Even when consent isn’t required, be transparent: provide a simple privacy notice and explain what admins can see. Consider a dedicated page like /privacy.

Use least-privilege permissions:

Treat “export all” and “delete user” as high-risk actions—gate them behind elevated roles.

Encrypt data in transit (HTTPS) and protect sessions (secure cookies, short-lived tokens, logout on password change). Add rate limits to login and invitation flows to reduce abuse.

Store passwords with strong hashing (e.g., bcrypt/argon2), and never log secrets.

Plan for:

These basics prevent most “we can’t prove it” and “who changed this?” problems later.

Your MVP should optimize for speed of delivery and clarity of ownership: who manages courses, who sees progress, and how completion is recorded. The “best” tech is the one your team can support for the next 12–24 months.

Custom app is ideal when you need account-based access, tailored reporting, or a branded learner portal. It gives you control over roles, certificates, and integrations—but you own maintenance.

Low-code (e.g., internal tools + database) can work if requirements are simple and you’re mostly tracking checklists and attendance. Watch out for limits around permissions, exports, and audit history.

Existing LMS + portal is often fastest when you need quizzes, SCORM, or rich course authoring. Your “app” becomes a thin customer portal and reporting layer, pulling completion data from the LMS.

Keep the architecture boring: one web app + one API + one database is enough for an MVP.

If the main constraint is delivery speed (not long-term differentiation), a vibe-coding platform like Koder.ai can help you ship a credible first version faster. You can describe your desired flows in chat—multi-tenant customer accounts, enrollment, course progress, admin tables, CSV export—and generate a working baseline using a modern stack (React on the frontend, Go + PostgreSQL on the backend).

Two practical advantages for an MVP like this:

Plan three environments early: dev (fast iteration), staging (safe testing with realistic data), production (locked-down access, backups, monitoring). Use managed hosting (e.g., AWS/GCP/Render/Fly) to reduce ops work.

MVP (weeks): auth + customer accounts, course enrollment, progress/completion tracking, basic admin dashboard, CSV export.

Nice-to-haves (later): certificates with templates, advanced analytics, fine-grained permissions, LMS/CRM sync, automated reminder journeys, audit logs.

A training completion app succeeds when it’s boringly dependable: learners can finish, admins can verify, and everyone trusts the numbers. The fastest path is to ship a narrow MVP, prove it with real customers, then expand.

Pick the minimum set of screens and capabilities that deliver “proof of completion” end to end:

Decide completion rules now (e.g., “all modules viewed” vs “quiz passed”) and write them down as acceptance criteria.

Keep a single checklist the whole team shares:

If you’re using Koder.ai to accelerate delivery, this checklist also translates cleanly into a “spec in chat” that you can iterate on (and validate quickly with stakeholders).

Run realistic tests that mirror how customers will use it:

Pilot with one customer account for 2–3 weeks. Track time-to-first-completion, drop-off points, and admin questions. Use feedback to prioritize the next iteration: certificates, reminders, integrations, and richer analytics.

If you want help scoping an MVP and shipping it quickly, reach out via /contact.

Start with the operational question: who completed which training, when, and with what result. Your MVP should reliably capture:

started_at, last_activity_at, completed_atIf those fields are trustworthy, dashboards, exports, and compliance conversations become straightforward.

Define completion rules explicitly and store them (and their version) rather than inferring completion from clicks.

Common rule types:

At the course level, decide whether completion requires all required items or N of M, and store the rule version so old completions remain auditable after content changes.

In most B2B training trackers, keep tenant boundaries simple:

Then layer roles on top:

Minimum set that covers most workflows:

Always record , , and on the enrollment to avoid “how did they get in?” ambiguity later.

Magic links reduce password friction and support load, but you still need:

Passwords are fine if your customers expect them, but budget time for resets, lockouts, and security hardening. A common path is magic link now, add SSO (SAML/OIDC) when larger customers require it.

Make “what’s next” obvious and “finish” predictable:

/certificates)If learners can’t tell what remains, they stall—even if your tracking is perfect.

Include a table that answers who is stuck and why:

Then add actions where the admin is looking:

This turns the dashboard into a daily tool instead of a once-a-quarter report.

Track attempts as first-class data rather than overwriting fields.

Practical approach:

This supports honest reporting (“they passed on attempt 3”) and reduces disputes.

Treat content changes as a versioning problem.

Options:

Store course_version_id on enrollments/completions so reports don’t change retroactively when you update requirements.

Prioritize integrations that anchor identity and workflows:

Keep the API minimal:

This prevents data leakage and makes reporting reliable.

enrolled_byenrolled_atorganization_idPOST /api/usersPOST /api/enrollmentsPOST /api/completionsGET /api/coursesAdd one webhook customers can rely on (e.g., course.completed) with signing, retries, and idempotency to keep downstream systems consistent.