Nov 24, 2025·8 min

From Figma to Production Code: How AI Bridges Design Gaps

Learn how AI turns Figma designs into production-ready code by mapping components, tokens, and specs—reducing rework and speeding up releases.

Learn how AI turns Figma designs into production-ready code by mapping components, tokens, and specs—reducing rework and speeding up releases.

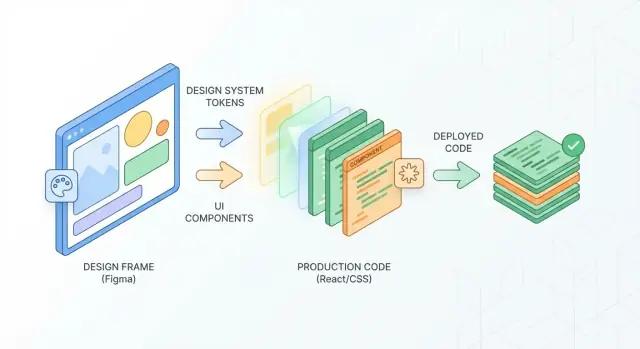

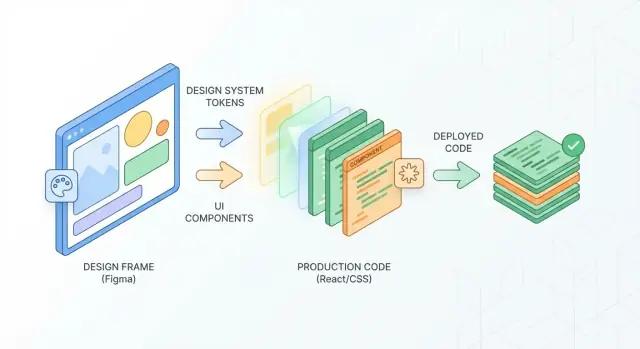

“Figma to production” is often treated as “export some CSS and ship.” In reality, production-ready UI includes responsive behavior, interactive states, real data, accessibility, performance constraints, and integration with a design system. A design can look perfect in a static frame while still leaving dozens of implementation decisions unanswered.

A front-end build has to translate design intent into reusable components, tokens (colors, type, spacing), layout rules across breakpoints, and edge cases like long text, empty states, loading, and errors. It also needs consistent interaction details (hover, focus, pressed), keyboard support, and predictable behavior across browsers.

The gap isn’t just about tooling—it’s about missing or ambiguous information:

Every unresolved design decision becomes a conversation, a PR comment thread, or—worse—rework after QA. That rework often introduces bugs (layout regressions, missing focus rings) and makes the UI feel inconsistent across screens.

AI reduces the repetitive parts of bridging the gap: mapping frames to existing UI components, flagging token inconsistencies, checking spacing and type against rules, and generating clearer handoff docs (props, states, acceptance criteria). It doesn’t replace judgment, but it can catch mismatches early and keep implementation closer to design intent.

In practice, the biggest gains show up when AI is connected to your real production constraints—your component APIs, tokens, and conventions—so it can generate output that’s compatible with how your team actually ships UI.

“Production code” is less about perfectly matching pixels and more about shipping UI that your team can safely maintain. When AI helps convert Figma to code, clarity on the target prevents a lot of frustration.

A screen-level export can look right and still be a dead end. Production work aims for reusable UI components (buttons, inputs, cards, modals) that can be composed into many screens.

If a generated layout can’t be expressed as existing components (or a small number of new ones), it’s not production-ready—it’s a prototype snapshot.

Define your bar in terms everyone can verify:

AI can accelerate implementation, but it can’t guess your team’s conventions unless you state them (or provide examples).

It doesn’t mean:

A small, intentional deviation that preserves consistency and maintainability is often a better outcome than a perfect replica that increases long-term cost.

AI performs best when Figma is structured like a system:

Button/Primary, Icon/Close).Before handing off for AI-assisted frontend implementation:

AI doesn’t “see” a Figma file the way a person does. It reads structure: frames, groups, layers, constraints, text styles, and the relationships between them. The goal is to translate those signals into something a developer can implement reliably—often as reusable components plus clear layout rules.

A strong AI pipeline starts by finding repetition and intent. If multiple frames share the same hierarchy (icon + label, same padding, same corner radius), AI can flag them as the same pattern—even when names are inconsistent.

It also looks for common UI signatures:

The better your design system alignment, the more confidently AI can classify these elements.

Interpreting a “button” is useful; mapping it to your Button component is where the real time savings happen. AI typically matches by comparing properties (size, typography, color token usage, state variants) and then suggests a component name and props.

For example, a primary button might become:

Buttonvariant="primary", size="md", iconLeft, disabledWhen AI can map to existing components, you avoid one-off UI code and keep the product consistent.

Figma already contains layout intent through Auto Layout, constraints, and spacing. AI uses that to infer:

If constraints are missing, AI may guess from visual proximity—helpful, but less predictable.

Beyond code suggestions, AI can produce developer-friendly output: measurements, typography details, color references, component usage notes, and edge cases (empty states, long text wrapping). Think of it as turning a frame into a checklist a developer can actually build against—without manually writing specs for every screen.

AI can generate UI code faster when your Figma file is predictable. The goal isn’t to “design for the machine” at the expense of creativity—it’s to remove ambiguity so automation can make safe assumptions.

Most AI tools infer intent from layer names, hierarchy, and repeated patterns. If a button is called Rectangle 12 inside Frame 8, the tool has to guess whether it’s a button, a card, or a decorative shape. Clear structure turns guessing into matching.

A good rule: if a developer would ask “what is this?” the AI will too.

Use a consistent layout:

Web, iOS, Marketing)Checkout, Onboarding)Checkout — Payment)For reusable UI, rely on components + variants:

Button, Input, Cardsize=md, state=hover, tone=primaryBlue Button 2Flattening and masking are fine—but “mystery layers” aren’t. Delete hidden leftovers, unused groups, and duplicated shapes. Prefer Auto Layout over manual spacing, and avoid per-instance overrides that silently change padding, corner radius, or font styles.

If something must be unique, label it clearly (e.g., Promo banner (one-off)), so it doesn’t get mistaken for a system component.

For icons, use a single source format (SVG preferred) and consistent naming (icon/chevron-right). Don’t outline text inside icons.

For images, mark intent: Hero image (cropped), Avatar (circle mask). Provide aspect ratios and safe-crop guidance when necessary.

For complex illustrations, treat them as assets: export once, store versions, and reference them consistently so AI doesn’t attempt to rebuild intricate vector art as UI shapes.

Design tokens are the named, reusable decisions behind a UI—so designers and developers can talk about the same thing without arguing over pixels.

A token is a label plus a value. Instead of “use #0B5FFF,” you use color.primary. Instead of “14px with 20px line height,” you use font.body.sm. Common token families include:

The win isn’t just consistency—it’s speed. When a token changes, the system updates everywhere.

Figma files often contain a mix of intentional styles and one-off values created during iteration. AI tools can scan frames and components, then propose token candidates by clustering similar values. For example, it can detect that #0B5FFF, #0C5EFF, and #0B60FF are likely the same “primary blue” and recommend a single canonical value.

It can also infer meaning from usage: the color used for links across multiple screens is probably “link,” while the one used only in error banners is likely “danger.” You still approve the naming, but AI reduces the tedious audit work.

Small inconsistencies are the fastest way to break a design system. A practical rule: if two values are visually indistinguishable at normal zoom, they probably shouldn’t both exist. AI can flag near-duplicates and show where they appear, so teams can consolidate without guesswork.

Tokens only help if they stay aligned. Treat them as a shared source of truth: update tokens intentionally (with a brief changelog), then propagate to both Figma and code. Some teams review token changes the same way they review UI components—lightweight, but consistent.

If you already have a system, link your token updates to the same workflow as component updates (see /blog/component-mapping-and-reuse-at-scale).

Scaling UI delivery isn’t mainly a “convert Figma to code” problem—it’s a “convert the right components the same way every time” problem. AI helps most when it can reliably map what’s in the design file to what already exists in your codebase, including names, variants, and behavior.

Start by giving AI stable anchors: consistent component names, clear variant properties, and a predictable library structure. When those anchors exist, AI can propose a mapping like:

Button with properties size, intent, state<Button size="sm" variant="primary" disabled />This is where design tokens and component APIs meet. If your code component expects variant="danger" but Figma uses intent="error", AI can flag the mismatch and suggest a translation layer (or a naming update) so mapping doesn’t become guesswork.

At scale, the most expensive bugs are “almost right” components: the default state looks correct, but edge states are missing or inconsistent. AI can scan your library and highlight gaps such as:

The useful output isn’t just a warning—it’s a concrete to-do: “Add state=loading to Button variants and document its spacing + spinner alignment.”

AI can detect near-duplicates by comparing structure (padding, typography, border radius) and recommend reuse: “This ‘Primary CTA’ is 95% identical to Button/primary/lg—use the existing component and override only the icon placement.” That keeps your UI consistent and prevents a slow drift into one-off styles.

A practical rule AI can help enforce:

If you document these rules once, AI can apply them repeatedly—turning component decisions from debates into consistent, reviewable recommendations.

Good handoff documentation isn’t about writing more—it’s about writing the right details in a format developers can act on quickly. AI can help by turning design intent into clear tasks, acceptance criteria, and implementation notes that fit naturally into your existing workflow.

Instead of copying measurements and behavior notes manually, use AI to generate task-ready text from a selected frame/component:

Example acceptance criteria AI can draft (then you refine):

AI is most useful when it consistently extracts the “small” rules that cause the biggest mismatches:

Have AI summarize these as concise implementation notes attached to the component or frame—short enough to scan, specific enough to code.

Documentation only works if people can find it.

The goal: fewer clarification threads, faster estimates, and less “almost matches the design” UI.

Accessibility shouldn’t be a separate “compliance sprint” after UI is built. When you use AI alongside Figma and your component library, you can turn accessibility and core UX rules into guardrails that run continuously—while designs are still changing and before code ships.

AI works well as a fast reviewer that compares what’s in Figma against known standards (WCAG basics, platform conventions, your team’s patterns). Practical checks include:

These checks are most effective when AI understands your design system. If a “TextField” component is mapped to a real input component in code, the AI can look for required states (label, help text, error state, disabled, focus) and warn when a design uses a “custom input look” without the supporting semantics.

The goal isn’t a long report—it’s a short list of changes designers and developers can act on. Good AI tooling will attach each issue to a concrete node in Figma (frame, component instance, or variant) and suggest the smallest viable fix, such as:

TextField/Error variant and include an error message placeholder.”Add a lightweight gate: designs can’t be marked “ready for implementation” until key accessibility/UX checks pass, and PRs can’t be merged if the implemented UI regresses. When guardrails run early and often, accessibility becomes a routine quality signal—not a last-minute scramble.

AI can speed up implementation, but it also makes it easier to ship small inconsistencies quickly. The fix is to treat “design fidelity” like any other quality goal: measurable, automated, and reviewed at the right level.

Visual diffing is the most direct way to spot drift. After a component or page is implemented, generate screenshots in a controlled environment (same viewport sizes, fonts loaded, deterministic data) and compare them to a baseline.

AI can help by:

Most “looks slightly off” bugs come from a few recurring sources: spacing scales, font styles, and color values. Rather than waiting for a full-page review, validate these at the smallest unit:

When AI is connected to your design tokens, it can flag mismatches as the code is written, not after QA finds them.

Page-level QA is slow and noisy: one small component discrepancy can ripple across multiple screens. Component-level checks make fidelity scalable—fix once, benefit everywhere.

A useful pattern is “component snapshots + contract tests”: snapshots catch visual drift, while small checks confirm props, states, and token usage stay consistent.

Not every mismatch is a bug. Platform constraints (font rendering, native controls, responsive reflow, performance tradeoffs) can create legitimate differences. Agree on tolerances upfront—like sub-pixel rounding or font anti-aliasing—and record exceptions in a short decision log linked from your handoff docs (e.g., /docs/ui-qa). This keeps reviews focused on real regressions instead of endless pixel debates.

AI is most useful when it’s treated like a teammate with a narrow job, not a replacement for design judgment or engineering ownership. The patterns below help teams get speed without sacrificing consistency.

Before dev, use AI to pre-flight the file: identify missing states, inconsistent spacing, unlabeled components, and token violations. This is the quickest win because it prevents rework.

During dev, use AI as an implementation assistant: generate first-pass UI code from selected frames, suggest component matches from your library, and draft CSS/token mappings. Developers should still wire real data, routing, and state.

After dev, use AI to validate: compare screenshots to Figma, flag visual diffs, check accessible names/contrast, and confirm token usage. Treat this as an automated reviewer that finds “paper cuts” early.

The most reliable setup is designer + developer + reviewer:

AI supports each role, but doesn’t replace the “final say” responsibility.

Define lightweight approval rules:

Write these rules down once and link them in your team docs (e.g., /design-system/governance).

Drift happens when the model invents spacing, colors, or components that are “close enough.” Reduce it by:

When AI can only build with your system’s Lego bricks, output stays consistent—even at speed.

Rolling out AI-assisted “Figma to production code” works best when you treat it like any other process change: start small, measure, then expand.

Choose one feature area with clear UI boundaries (for example: settings page, onboarding step, or a single dashboard card). Avoid core navigation or heavily stateful flows for the first run.

Define success metrics upfront, such as:

Before generating anything, agree on a small baseline:

The goal isn’t completeness—it’s consistency. Even a dozen well-defined components can prevent most “almost right” output.

Treat AI output as a draft. In each pilot PR, capture:

Turn these into a short checklist that lives next to your design handoff docs, and update it weekly.

Once the pilot is stable, expand by feature teams—not by “turning it on everywhere.” Provide a template repo or “golden path” example, and a single place to track learnings (a page in /blog or your internal wiki). If you’re evaluating tools, keep procurement friction low with a clear comparison and budget reference (/pricing).

If you want to test this approach without rebuilding your pipeline first, platforms like Koder.ai can help teams go from chat to working web apps quickly—especially when you standardize on a design system and expect output to align with real components and tokens. Because Koder.ai supports building React frontends with Go + PostgreSQL backends (and Flutter for mobile), it’s a practical environment for validating “design-to-production” workflows end-to-end, including iteration, deployment, and source code export.

Audit one Figma file for token usage, align naming with your code variables, and map 5–10 core components end-to-end. That’s enough to start seeing reliable gains.

It includes more than visual styles:

A static frame can’t encode all of those decisions by itself.

Because “production-ready” is primarily about maintainability and reuse, not perfect pixels. A team-friendly definition usually means:

Pixel-perfect output that duplicates styles and hardcodes values often increases long-term cost.

Start with a checklist your team can verify:

If you can’t measure it, you’ll debate it in PRs.

AI helps most with repetitive and review-heavy work:

It’s a force multiplier for consistency, not a replacement for engineering decisions.

AI reads structure and relationships, not “intent” the way people do. It relies on:

If those signals are weak (random names, detached instances, manual spacing), AI has to guess—and output becomes less predictable.

Prioritize predictability:

This turns generation from “best guess” into “reliable mapping.”

Token drift is when “close enough” values sneak in (e.g., 12px vs 13px gaps, near-identical blues). It matters because:

AI can flag near-duplicates and show where they appear, but teams still need a consolidation decision.

A practical split:

AI can suggest which path fits, but you should enforce a written rule so decisions stay consistent.

Use AI to produce task-ready text tied to a frame/component:

Paste the output into tickets and PR templates so reviewers check the same requirements every time.

Treat it as a continuous guardrail, not a late audit:

Keep findings actionable: each issue should point to a specific component/frame and a smallest viable fix.