Oct 19, 2025·8 min

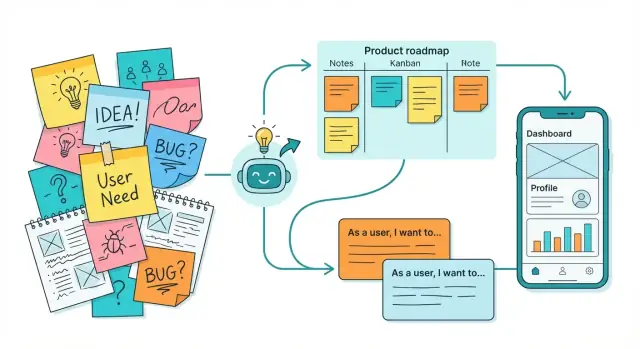

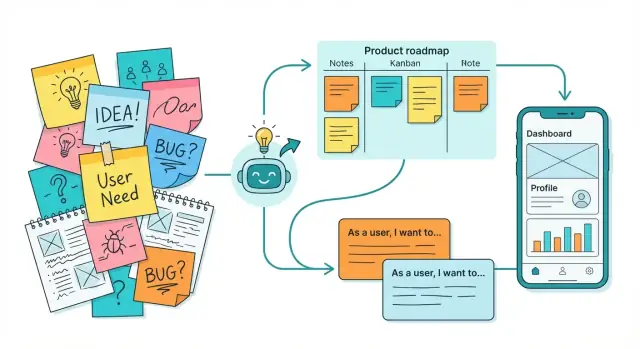

From Messy Ideas to Shippable Products with AI Tools

See how AI turns rough notes into clear problem statements, user insights, prioritized features, and ready-to-build specs, roadmaps, and prototypes.

See how AI turns rough notes into clear problem statements, user insights, prioritized features, and ready-to-build specs, roadmaps, and prototypes.

Most product work doesn’t start with a neat brief. It starts as “messy ideas”: a Notion page full of half-sentences, Slack threads where three different problems get mixed together, meeting notes with action items but no owner, screenshots of competitor features, voice memos recorded on the way home, and a backlog of “quick wins” that no one can explain anymore.

The mess isn’t the problem. The stall happens when the mess becomes the plan.

When ideas stay unstructured, teams spend time re-deciding the same things: what you’re building, who it’s for, what success looks like, and what you’re not doing. That leads to slow cycles, vague tickets, misaligned stakeholders, and avoidable rewrites.

A small amount of structure changes the pace of work:

AI is good at turning raw inputs into something you can work with: summarizing long threads, extracting key points, grouping similar ideas, drafting problem statements, and proposing first-pass user stories.

AI cannot replace product judgment. It won’t know your strategy, constraints, or what your customers truly value unless you provide context—and you still need to validate outcomes with real users and data.

No magic prompts. Just repeatable steps to move from scattered inputs to clear problems, options, priorities, and shippable plans—using AI to reduce busywork while your team focuses on decisions.

Most product work doesn’t fail because ideas are bad—it fails because evidence is scattered. Before you ask AI to summarize or prioritize, you need a clean, complete input stream.

Pull raw material from meetings, support tickets, sales calls, internal docs, emails, and chat threads. If your team already uses tools like Zendesk, Intercom, HubSpot, Notion, or Google Docs, start by exporting or copying the relevant snippets into one workspace (a single doc, database, or inbox-style board).

Use whatever method matches the moment:

AI is helpful even here: it can transcribe calls, clean up punctuation, and standardize formatting—without rewriting meaning.

When you add an item, attach lightweight labels:

Keep originals (verbatim quotes, screenshots, ticket links) alongside your notes. Remove obvious duplicates, but don’t over-edit. The goal is one trustworthy workspace that your AI tool can reference later without losing provenance.

After you’ve captured raw inputs (notes, Slack threads, call transcripts, surveys), the next risk is “infinite rereading.” AI helps you compress volume without losing what matters—then group the signal into a few clear buckets your team can act on.

Start by asking AI to produce a one-page brief per source: the context, the top takeaways, and any direct quotes worth keeping.

A helpful pattern is: “Summarize this into: goals, pains, desired outcomes, constraints, and verbatim quotes (max 8). Keep unknowns.” That last line prevents AI from pretending everything is clear.

Next, combine multiple briefs and ask AI to:

This is where scattered feedback becomes a map, not a pile.

Have AI rewrite themes into problem-shaped statements, separated from solutions:

A clean problem list makes the next steps—user journeys, solution options, and prioritization—much easier.

Teams stall when the same word means different things (“account,” “workspace,” “seat,” “project”). Ask AI to propose a glossary from your notes: terms, plain-language definitions, and examples.

Keep this glossary in your working doc and link it from future artifacts (PRDs, roadmaps) so decisions stay consistent.

After you’ve clustered raw notes into themes, the next move is to turn each theme into a problem statement people can agree on. AI helps by rewriting vague, solution-shaped ideas (“add a dashboard”) into user-and-outcome language (“people can’t see progress without exporting data”).

Use AI to draft a few options, then pick the clearest one:

For [who], [what job] is hard because [current friction], which leads to [impact].

Example: For team leads, tracking weekly workload is hard because data lives in three tools, which leads to missed handoffs and overtime.

Ask AI to propose metrics, then choose ones you can actually track:

Problem statements fail when hidden beliefs sneak in. Have AI list likely assumptions (e.g., users have consistent data access), risks (e.g., incomplete integrations), and unknowns to validate in discovery.

Finally, add a short “not in scope” list so the team doesn’t drift (e.g., “not redesigning the entire admin area,” “no new billing model,” “no mobile app in this phase”). This keeps the problem crisp—and sets up the next steps cleanly.

If your ideas feel scattered, it’s often because you’re mixing who it’s for, what they’re trying to achieve, and where the pain actually happens. AI helps you separate those threads quickly—without inventing a fantasy customer.

Start with what you already have: support tickets, sales call notes, user interviews, app reviews, and internal feedback. Ask AI to draft 2–4 “light personas” that reflect patterns in the data (goals, constraints, vocabulary), not stereotypes.

A good prompt: “Based on these 25 notes, summarize the top 3 user types. For each: primary goal, biggest constraint, and what triggers them to look for a solution.”

Personas describe who; JTBD describes why. Have AI propose JTBD statements, then edit them to sound like something a real person would say.

Example format:

When [situation], I want to [job], so I can [outcome].

Ask AI to produce multiple versions per persona and highlight differences in outcomes (speed, certainty, cost, compliance, effort).

Create a one-page journey that focuses on behavior, not screens:

Then ask AI to identify friction points (confusion, delays, handoffs, risk) and moments of value (relief, confidence, speed, visibility). This gives you a grounded picture of where your product can genuinely help—and where it shouldn’t try to.

Once your problem statements are clear, the fastest way to avoid “solution lock-in” is to deliberately generate multiple directions before you pick one. AI is useful here because it can explore alternatives quickly—while you keep the judgment.

Prompt the AI to propose 3–6 distinct solution approaches (not variations of the same feature). For example: self-serve UX changes, automation, policy/process changes, education/onboarding, integrations, or a lightweight MVP.

Then force contrast by asking: “What would we do if we couldn’t build X?” or “Give one option that avoids new infrastructure.” This produces real trade-offs you can evaluate.

Have AI list constraints you might miss:

Use these as a checklist for later requirements—before you’ve designed yourself into a corner.

For each option, ask AI to produce a short narrative:

These mini-stories are easy to share in Slack or a doc and help non-technical stakeholders react with concrete feedback.

Finally, ask AI to map likely dependencies: data pipelines, analytics events, third-party integrations, security review, legal approval, billing changes, or app-store considerations. Treat the output as hypotheses to validate, but it will help you start the right conversations before timelines slip.

Once your themes and problem statements are clear, the next step is turning them into work the team can build and test. The goal isn’t a perfect document—it’s a shared understanding of what “done” looks like.

Start by rewriting each idea as a feature (what the product will do), then break that feature into small deliverables (what can ship in a sprint). A useful pattern is: Feature → capabilities → thin slices.

If you’re using AI product planning tools, paste your clustered notes and ask for a first pass breakdown. Then edit it with your team’s language and constraints.

Ask AI to convert each deliverable into a consistent user story format, such as:

A good prompt: “Write 5 user stories for this feature, keep them small enough for 1–3 days each, and avoid technical implementation details.”

AI is especially helpful for proposing acceptance criteria and edge cases you might miss. Ask for:

Create a lightweight checklist the whole team accepts, for example: requirements reviewed, analytics event named, error states covered, copy approved, QA passed, and release notes drafted. Keep it short—if it’s painful to use, it won’t be used.

Once you have a clean set of problem statements and solution options, the goal is to make trade-offs visible—so decisions feel fair, not political. A simple set of criteria keeps the conversation grounded.

Start with four signals most teams can agree on:

Write one sentence per criterion so “impact = revenue” doesn’t mean one thing to Sales and another to Product.

Paste your idea list, any notes from discovery, and your definitions. Ask AI to create a first-pass table you can react to:

| Item | Impact (1–5) | Effort (1–5) | Confidence (1–5) | Risk (1–5) | Notes |

|---|---|---|---|---|---|

| Passwordless login | 4 | 3 | 3 | 2 | Reduces churn in onboarding |

| Admin audit export | 3 | 2 | 2 | 4 | Compliance benefit, higher risk |

Treat this as a draft, not an answer key. The win is speed: you’re editing a starting point instead of inventing structure from scratch.

Ask: “What breaks if we don’t do this in the next cycle?” Capture the reason in one line. This prevents “must-have inflation” later.

Combine high impact + low effort for quick wins, and high impact + high effort for longer bets. Then confirm sequencing: quick wins should still support the larger direction, not distract from it.

A roadmap isn’t a wish list—it’s a shared agreement about what you’re doing next, why it matters, and what you’re not doing yet. AI helps you get there by turning your prioritized backlog into a clear, testable plan that’s easy to explain.

Start with the items you already prioritized (from the previous step) and ask an AI assistant to propose 2–4 milestones that reflect outcomes, not just features. For example: “Reduce onboarding drop-off” or “Enable teams to collaborate” is more trustworthy than “Ship onboarding revamp.”

Then pressure-test each milestone with two questions:

For each milestone, generate a short release definition:

This “included/excluded” boundary is one of the fastest ways to reduce stakeholder anxiety, because it prevents silent scope creep.

Ask AI to turn your roadmap into a one-page narrative with:

Keep it readable—if someone can’t summarize it in 30 seconds, it’s too complicated.

Trust increases when people know how plans change. Add a small “change policy” section: what triggers a roadmap update (new research, missed metrics, technical risk, compliance changes) and how decisions will be communicated. If you share updates in a predictable place (e.g., /roadmap), the roadmap stays credible even when it evolves.

Prototypes are where vague ideas get honest feedback. AI won’t magically “design the right thing,” but it can remove a lot of busywork so you can test sooner—especially when you’re iterating on multiple options.

Start by asking AI to translate a theme or problem statement into a screen-by-screen flow. Give it the user type, the job they’re trying to do, and any constraints (platform, accessibility, legal, pricing model). You’re not looking for pixel-perfect design—just a coherent path that a designer or PM can sketch quickly.

Example prompt: “Create a 6-screen flow for first-time users to accomplish X on mobile. Include entry points, main actions, and exit states.”

Microcopy is easy to skip—and painful to fix late. Use AI to draft:

Provide your product tone (“calm and straightforward,” “friendly but brief”) and any words you avoid.

AI can generate a lightweight test plan so you don’t overthink it:

Before building more screens, ask AI for a prototype checklist: what must be validated first (value, comprehension, navigation, trust), what signals count as success, and what would make you stop or pivot. This keeps the prototype focused—and your learning fast.

Once you’ve validated a flow, the next bottleneck is often turning “approved screens” into a real, working app. This is where a vibe-coding platform like Koder.ai can fit naturally into the workflow: you can describe the feature in chat (problem, user stories, acceptance criteria), and generate a working web, backend, or mobile build faster than a traditional handoff-heavy process.

In practice, teams use it to:

The key idea is the same as this guide: reduce busywork and cycle time, while keeping human decisions (scope, trade-offs, quality bar) firmly in your team’s hands.

By this point you likely have themes, problem statements, user journeys, options, constraints, and a prioritized plan. The last step is making it easy for other people to consume—without sitting through another meeting.

AI is useful here because it can turn your raw notes into consistent documents with clear sections, sensible defaults, and obvious “fill this in” placeholders.

Ask your AI tool to draft a PRD from your inputs, using a structure your team recognizes:

Keep placeholders like “TBD metric owner” or “Add compliance review notes” so reviewers know what’s missing.

Have AI generate two FAQ sets from the PRD: one for Support/Sales (“What changed?”, “Who is this for?”, “How do I troubleshoot?”) and one for internal teams (“Why now?”, “What’s not included?”, “What should we avoid promising?”).

Use AI to produce a simple checklist covering: tracking/events, release notes, docs updates, announcements, training, rollback plan, and a post-launch review.

When you share, link people to the next steps using relative paths like /pricing or /blog/how-we-build-roadmaps, so the docs stay portable across environments.

AI can speed up product thinking, but it can also quietly steer you off course. The best teams treat AI output as a first draft—useful, but never final.

The biggest problems usually start with inputs:

Before you copy anything into a PRD or roadmap, do a quick quality pass:

If something feels “too neat,” ask the model to show support: “Which lines in my notes justify this requirement?”

If you don’t know how a tool stores data, don’t paste sensitive information: customer names, tickets, contracts, financials, or unreleased strategy. Redact details, or replace them with placeholders (e.g., “Customer A,” “Pricing Plan X”).

When possible, use an approved workspace or your company’s managed AI. If data residency and deployment geography matter, favor platforms that can run workloads globally to meet privacy and cross-border transfer requirements—especially when you’re generating or hosting real application code.

Use AI to generate options and highlight trade-offs. Switch to people for final prioritization, risk calls, ethical decisions, and commitments—especially anything that affects customers, budgets, or timelines.

You don’t need a “big process” to get consistent outcomes. A lightweight weekly cadence keeps ideas flowing while forcing decisions early.

Capture → cluster → decide → draft → test

When prompting AI, paste:

Keep it small: PM owns decisions and documentation, designer shapes flows and testing, engineer flags feasibility and edge cases. Add support/sales input weekly (15 minutes) to keep priorities grounded in real customer pain.

Track fewer recurring alignment meetings, shorter time from idea → decision, and fewer “missing details” bugs. If specs are clearer, engineers ask fewer clarifying questions—and users see fewer surprise changes.

If you’re experimenting with tools like Koder.ai in the build phase, you can also track delivery signals: how quickly a validated prototype becomes a deployed app, how often you use rollback/snapshots during iteration, and whether stakeholders can review working software earlier in the cycle.

As a practical bonus, if your team publishes learnings from your workflow (what worked, what didn’t), some platforms—including Koder.ai—offer ways to earn credits through content creation or referrals. It’s not the point of the process, but it can make experimentation cheaper while you refine your product system.

Messy inputs become a problem when they’re treated as the plan. Without structure, teams keep re-litigating basics (who it’s for, what success is, what’s in/out), which creates vague tickets, misalignment, and rework.

A small amount of structure turns “a pile of notes” into:

Start by centralizing raw material into one workspace (single doc, database, or inbox board) without over-editing.

Minimum capture checklist:

Keep originals nearby (screenshots, ticket links) so AI summaries remain traceable.

Ask for a structured summary and force the model to preserve uncertainty.

Example instruction pattern:

Combine multiple source briefs, then ask AI to:

A practical output is a short theme table with: theme name, description, supporting evidence, and open questions. That becomes your working map instead of rereading everything.

Rewrite each theme into a problem-shaped statement before discussing solutions.

Template:

Then add:

Use real inputs (tickets, calls, interviews) to draft 2–4 lightweight personas, then express motivation as Jobs To Be Done.

JTBD format:

Finally, map a simple journey (before/during/after) and mark:

Generate multiple distinct approaches first to avoid solution lock-in.

Ask AI for 3–6 options across different levers, such as:

Then force trade-offs with prompts like: “What would we do if we couldn’t build X?” and “Give one option that avoids new infrastructure.”

Start with Feature → capabilities → thin slices so work can ship incrementally.

Then have AI draft:

Keep stories outcome-focused and avoid baking in implementation details unless the team needs them for feasibility.

Define scoring criteria everyone understands (e.g., Impact, Effort, Confidence, Risk) with one sentence each.

Use AI to draft a scoring table from your backlog and discovery notes, but treat it as a starting point. Then:

Use AI for first drafts, but apply a short quality and privacy gate before sharing or committing.

Quality checks:

Privacy basics:

That last bullet prevents “confident hallucinations” from becoming assumed truth.