Jul 08, 2025·8 min

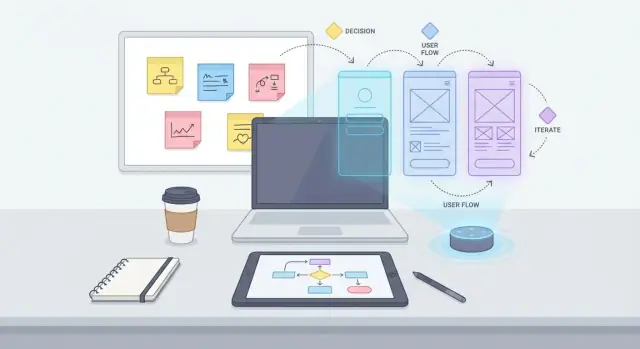

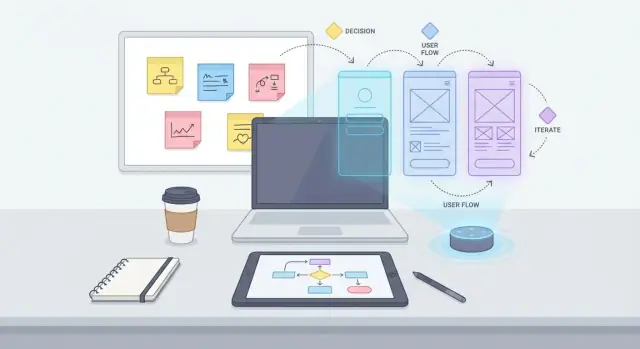

How AI Turns Loose Ideas into Screens, Logic, and Flows

Learn how AI can turn brainstorms into organized app screens, user flows, and simple logic—helping teams move from ideas to a clear plan faster.

Learn how AI can turn brainstorms into organized app screens, user flows, and simple logic—helping teams move from ideas to a clear plan faster.

When people say “turn the idea into screens, logic, and flows,” they’re describing three connected ways to make a product plan concrete.

Screens are the pages or views a user interacts with: a sign-up page, a dashboard, a settings page, a “create task” form. A screen isn’t just a title—it includes what’s on it (fields, buttons, messages) and what it’s for (the user’s intent on that screen).

Flows describe how a user moves between screens to complete something. Think of flows as a guided route: what happens first, what happens next, and where the user ends up. A flow usually includes a “happy path” (everything goes smoothly) plus variations (forgot password, error state, returning user, etc.).

Logic is everything the system decides or enforces behind the scenes (and often explains on the screen):

A practical product plan ties all three together:

AI is helpful here because it can take messy notes (features, wishes, constraints) and propose a first pass at these three layers—so you can react, correct, and refine.

Imagine a simple task app:

That’s the core meaning: what users see, how they move, and what rules govern the experience.

Raw product ideas rarely show up as a neat document. They arrive as scattered pieces: notes in a phone app, long chat threads, meeting takeaways, quick sketches on paper, voice memos, support tickets, and “one more thing” thoughts added right before the deadline. Each piece can be valuable, but together they’re hard to turn into a clear plan.

Once you collect everything in one place, patterns appear—and so do problems:

These issues aren’t a sign the team is doing something wrong. They’re normal when input comes from different people, at different times, with different assumptions.

Ideas get stuck when the “why” isn’t firm. If the goal is fuzzy (“make onboarding better”), the flow becomes a grab bag of screens: extra steps, optional detours, and unclear decision points.

Compare that with a goal like: “Help new users connect their account and complete one successful action in under two minutes.” Now the team can judge every step: does it move the user toward that outcome, or is it noise?

Without clear goals, teams end up debating screens instead of outcomes—and flows become complicated because they’re trying to satisfy multiple purposes at once.

When structure is missing, decisions get deferred. That feels fast at first (“we’ll figure it out in design”), but it usually shifts the pain downstream:

A designer creates wireframes that reveal missing states. Developers ask for edge cases. QA finds contradictions. Stakeholders disagree on what the feature was supposed to do. Then everyone backtracks—rewriting logic, redoing screens, retesting.

Rework is expensive because it happens when many pieces are already connected.

Brainstorming produces volume. Planning requires shape.

Organized ideas have:

AI is most useful at this stuck point—not to generate even more suggestions, but to turn a pile of input into a structured starting point the team can build from.

Most early product notes are a mix of half-sentences, screenshots, voice memos, and “don’t forget this” thoughts scattered across tools. AI is useful because it can turn that mess into something you can actually discuss.

First, AI can condense raw input into clear, consistent bullets—without changing the intent. It typically:

This cleanup matters because you can’t group ideas well if they’re written in ten different styles.

Next, AI can cluster similar notes into themes. Think of it as automatically sorting sticky notes on a wall—then suggesting labels for each pile.

For example, it might create clusters like “Onboarding,” “Search & Filters,” “Notifications,” or “Billing,” based on repeated intent and shared vocabulary. Good clustering also highlights relationships (“these items all affect checkout”) rather than just matching keywords.

In brainstorms, the same requirement often appears multiple times with small variations. AI can flag:

Instead of deleting anything, preserve the original phrasing and propose a merged version, so you can choose what’s accurate.

To prepare for screens and flows, AI can pull out entities such as:

Clustering is a starting point, not a decision. You still need to review group names, confirm what’s in/out of scope, and correct any incorrect merges—because one wrong assumption here can ripple into your screens and user flows later.

Once your ideas are clustered (for example: “finding content,” “saving,” “account,” “payments”), the next step is turning those clusters into a first-pass map of the product. This is information architecture (IA): a practical outline of what lives where, and how people move around.

AI can take each cluster and propose a small set of top-level sections that feel natural to users—often the kind of things you’d see in a tab bar or main menu. For instance, a “discover” cluster might become Home or Explore, while “identity + preferences” might become Profile.

The goal isn’t perfection; it’s picking stable “buckets” that reduce confusion and make later flow work easier.

From those sections, AI can generate a screen list in plain language. You’ll typically get:

This screen inventory is useful because it exposes scope early: you can see what’s “in the product” before anyone starts drawing wireframes.

AI can also propose how navigation might work, without getting too design-heavy:

You can review these suggestions based on your users’ priorities—not on UI trends.

AI can flag screens teams often forget, like empty states (no results, nothing saved), error states (offline, payment failed), Settings, Help/Support, and confirmation screens.

Start broad: pick a small number of sections and a short screen list. Then refine boundaries—split “Home” into “Home” and “Explore,” or move “Notifications” under Profile—until the map matches real user expectations and your product goals.

A useful user flow starts with intent, not screens. If you feed AI a messy brainstorm, ask it to first extract user goals—what the person is trying to accomplish—and the tasks they’ll do to get there. That reframes the conversation from “What should we build?” to “What must happen for the user to succeed?”

Have AI list the top 3–5 goals for a specific user type (new user, returning user, admin, etc.). Then choose one goal and ask for a flow that’s narrowly scoped (one outcome, one context). This prevents “everything flows” that nobody can implement.

Next, ask AI to produce a happy path step-by-step: the simplest sequence where everything goes right. The output should read like a story with numbered steps (e.g., “User selects plan → enters payment → confirms → sees success screen”).

Once the happy path is stable, branch into common alternatives:

Ask it to label which steps are user choices (buttons, selections, confirmations) versus automatic steps (validation, saving, syncing). That distinction helps teams decide what needs UI, what needs messaging, and what needs background logic.

Finally, convert the flow into a simple diagram description your team can paste into docs or tickets:

Start: Goal selected

1. Screen: Choose option

2. Screen: Enter details

3. System: Validate

- If invalid -> Screen: Error + Fix

4. Screen: Review & Confirm

5. System: Submit

- If fail -> Screen: Retry / Cancel

6. Screen: Success

End

This keeps conversations aligned before anyone opens Figma or writes requirements.

A user flow shows where someone can go. Logic explains why they can (or can’t) go there, and what the product should do when things go wrong. This is often where teams lose time: flows look “done,” but decisions, states, and error handling are still implicit.

AI is useful here because it can turn a visual or written flow into a plain-language “logic layer” that non-technical stakeholders can review before design and development.

Start by rewriting each step as a small set of if/then rules and permission checks. The goal is clarity, not completeness.

Examples of key decisions that change the flow:

When AI drafts these rules, label them with human-friendly names (e.g., “R3: Must be signed in to save”). This makes discussions easier in review meetings.

Every screen in a flow should have explicit states. Ask for a checklist per screen:

Flows become real when you specify the data behind them. AI can extract a first pass like:

List “unhappy paths” in plain language:

To keep logic readable for non-technical stakeholders, format it as a short “Decision + Outcome” table and keep jargon out. If you need a lightweight template for this, reuse the same structure across features so reviews stay consistent (see /blog/prompt-templates-for-flows).

Once you have a draft screen map and a few user flows, the next risk is “every screen feels invented from scratch.” AI can act as a consistency checker: it can spot when the same action has three names, when similar screens use different layouts, or when microcopy changes tone.

Propose a small component set based on what your flows repeat. Instead of designing per-screen, standardize building blocks:

This keeps wireframes and later UI work faster—and reduces logic bugs, because the same component can reuse the same rules.

Normalize your vocabulary into a simple naming system:

Produce a glossary and flag mismatches across screens and flows.

Even early on, draft basic microcopy:

Attach reminders per component: keyboard focus states, clear language, and contrast requirements. Also flag where patterns should match your existing brand guidelines (terminology, tone, button hierarchy), so new screens don’t drift away from what users already recognize.

AI speeds up collaboration only if everyone is looking at the same “current truth.” The goal isn’t to let the model run ahead—it’s to use it as a structured editor that keeps your plan readable as more people weigh in.

Start with one master document, then generate views for each group without changing the underlying decisions:

Reference specific sections (e.g., “Based on ‘Flow A’ and ‘Rules’ below, write an exec summary”) so outputs stay anchored.

When feedback lands in messy forms (Slack threads, meeting notes), paste it in and produce:

This reduces the classic “we discussed it, but nothing changed” gap.

Each iteration should include a short changelog. Generate a diff-style summary:

Set explicit checkpoints where humans approve the direction: after the screen map, after the main flows, after logic/edge cases. Between checkpoints, instruct AI to only propose, not finalize.

Publish the master doc in one place (e.g., /docs/product-brief-v1) and link out from tasks to that doc. Treat AI-generated variations as “views,” while the master remains the reference everyone aligns on.

Validation is where “nice-looking flowcharts” turn into something you can trust. Before anyone opens Figma or starts building, pressure-test the flow the way real users will.

Create short, believable tasks that match your goal and audience (including one “messy” task). For example:

Run each scenario through your proposed user flow step by step. If you can’t narrate what happens without guessing, the flow isn’t ready.

Draft a checklist for every screen in the flow:

This surfaces missing requirements that otherwise appear during QA.

Scan your flow for:

Propose a “shortest path” and compare it to your current flow. If you need extra steps, make them explicit (why they exist, what risk they reduce).

Generate targeted questions like:

Bring those questions into your review doc or link them to your next section on prompt templates at /blog/prompt-templates-turning-brainstorms-into-screens-and-flows.

A good prompt is less about “being clever” and more about giving AI the same context you’d give a teammate: what you know, what you don’t, and what decisions you need next.

Use this when you have messy notes from a workshop, call, or whiteboard.

You are my product analyst.

Input notes (raw):

[PASTE NOTES]

Task:

1) Rewrite as a clean, structured summary in plain English.

2) Extract key terms and define them (e.g., “account”, “workspace”, “project”).

3) List any contradictions or duplicates.

Constraints:

- Platform: [iOS/Android/Web]

- Timeline: [date or weeks]

- Must-haves: [list]

- Non-goals: [list]

Output format: headings + short bullets.

This converts “everything we said” into buckets you can turn into screens.

Cluster the items below into 5–8 themes.

For each theme: name it, include the items, and propose a goal statement.

Important:

- If you infer anything, put it under “Assumptions (AI)” and label each A1, A2...

- Also output “Open Questions” we must answer to confirm/deny assumptions.

Items:

[PASTE LIST]

Ask for at least two levels so stakeholders can choose complexity.

Based on these themes and goals:

[PASTE THEMES/GOALS]

Create:

1) An initial screen list grouped by area (IA draft).

2) Two user flow options:

- Option A: simplest viable flow

- Option B: advanced flow with power-user paths

3) For each option: entry points, success end state, and failure/edge paths.

4) Output an “Open Questions” list for the next meeting.

Constraints:

Platform: [ ]

Must-haves: [ ]

Compliance/permissions: [ ]

If you reuse the same templates, your team will start producing inputs in a consistent format—which makes the AI outputs easier to compare and iterate.

If your end goal is not just planning but shipping, it helps to connect these artifacts (screens, flows, and logic) to implementation. Koder.ai is a vibe-coding platform that can take a structured plan and help you move from “draft flows” to working web, backend, or mobile apps via chat—especially when you treat the AI output as a reviewable spec first, then generate incrementally. Features like planning mode, snapshots, and rollback can be useful when you’re iterating on flows and logic and want to keep a clear history of what changed.

AI is great at accelerating structure—turning messy notes into draft screens, rules, and flows. But it will also confidently fill in gaps when information is missing. The safest mindset is simple: AI proposes, your team decides.

Most problems come from hidden assumptions. AI may:

Treat every output as a hypothesis—especially anything that sounds like a requirement (“Users will…”, “The system should…”).

When brainstorming with AI, don’t paste:

Instead, anonymize and summarize (“User A”, “Enterprise customer”, “Refund scenario”) and keep sensitive context in your team docs.

Assign a clear owner for the flow and logic (often the PM or designer). Use AI drafts to speed up writing, but store decisions in your canonical place (PRD, spec, or ticketing system). If you want, link supporting docs with relative links like /blog/flow-walkthrough-checklist.

A lightweight checklist prevents “pretty but wrong” outputs:

A good AI-assisted flow is:

If it doesn’t meet these criteria, prompt again—using your corrections as the new input.

Screens are the individual views a user interacts with (pages, modals, forms). A useful screen definition includes:

If you can’t describe what the user is trying to accomplish on the screen, it’s usually not a real screen yet—just a label.

A flow is the step-by-step path a user takes to reach a goal, typically across multiple screens. Start with:

Then write a numbered happy path, and only after that add branches (skip, edit, cancel, retry).

Logic is the rules and decisions that determine what the system allows and what the user sees. Common categories include:

Because early input is usually scattered and inconsistent—notes, chats, sketches, last-minute ideas—so it contains:

Without structure, teams defer decisions until design/dev, which increases rework when gaps surface later.

Yes—AI is particularly good at a first “cleanup pass”:

Best practice: keep the original notes, and treat the AI version as an editable draft you review and correct.

AI can cluster similar items into themes (like sorting sticky notes) and help you:

Human review matters: don’t auto-merge items unless the team confirms they’re truly the same requirement.

Turn clusters into a draft information architecture (IA) by asking for:

A good IA draft reveals scope early and surfaces forgotten screens like empty states, error states, settings, and help/support.

Use a goal-first prompt:

This keeps flows implementable and prevents “everything flows” that collapse under their own scope.

Translate the flow into reviewable logic by asking for:

Formatting it as “Decision → Outcome” keeps it readable for non-technical stakeholders.

Use AI to produce “views” of the same master plan, but keep one source of truth:

This prevents drift where different people follow different AI-generated versions.

If a flow says where users go, logic explains why and what happens when it fails.