May 29, 2025·8 min

How AI Tools Help You Iterate Faster with Better Feedback

Learn how AI tools speed up iteration by gathering feedback, spotting issues, suggesting improvements, and helping teams test, measure, and refine work.

Learn how AI tools speed up iteration by gathering feedback, spotting issues, suggesting improvements, and helping teams test, measure, and refine work.

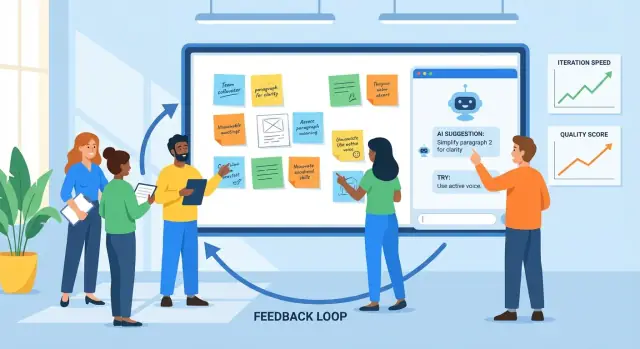

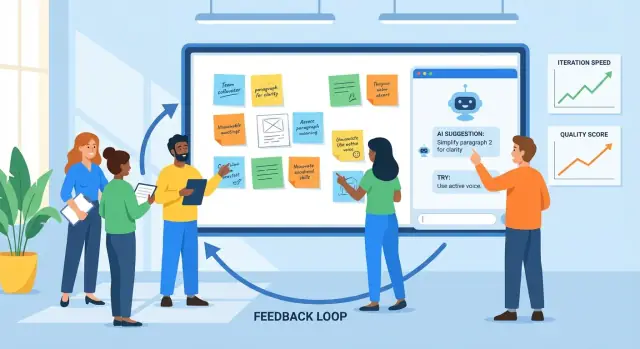

Iteration is the practice of making something, getting feedback, improving it, and repeating the cycle. You see it in product design (ship a feature, watch usage, refine), marketing (test a message, learn, rewrite), and writing (draft, review, edit).

Feedback is any signal that tells you what’s working and what isn’t: user comments, support tickets, bug reports, survey answers, performance metrics, stakeholder notes—even your own gut-check after using the thing yourself. Improvement is what you change based on those signals, from small tweaks to larger redesigns.

Shorter feedback cycles usually lead to better outcomes for two reasons:

A good iteration rhythm isn’t “move fast and break things.” It’s “move in small steps and learn quickly.”

AI is useful inside the loop when there’s a lot of information and you need help processing it. It can:

But AI can’t replace the core decisions. It doesn’t know your business goals, legal constraints, or what “good” means for your users unless you define it. It may confidently suggest changes that are off-brand, risky, or based on wrong assumptions.

Set expectations clearly: AI supports judgment. Your team still chooses what to prioritize, what to change, what success looks like—and validates improvements with real users and real data.

Iteration is easier when everyone follows the same loop and knows what “done” looks like. A practical model is:

draft → feedback → revise → check → ship

Teams often get stuck because one step is slow (reviews), messy (feedback scattered across tools), or ambiguous (what exactly should change?). Used deliberately, AI can reduce friction at each point.

The goal isn’t perfection; it’s a solid first version that others can react to. An AI assistant can help you outline, generate alternatives, or fill gaps so you reach “reviewable” faster.

Where it helps most: turning a rough brief into a structured draft, and producing multiple options (e.g., three headlines, two onboarding flows) to compare.

Feedback usually arrives as long comments, chat threads, call notes, and support tickets. AI is useful for:

The bottleneck you’re removing: slow reading and inconsistent interpretation of what reviewers meant.

This is where teams lose time to rework: unclear feedback leads to edits that don’t satisfy the reviewer, and the loop repeats. AI can suggest concrete edits, propose revised copy, or generate a second version that explicitly addresses the top feedback themes.

Before release, use AI as a second pair of eyes: does the new version introduce contradictions, missing steps, broken requirements, or tone drift? The goal isn’t to “approve” the work; it’s to catch obvious issues early.

Iteration speeds up when changes live in one place: a ticket, doc, or PR description that records (1) the feedback summary, (2) the decisions, and (3) what changed.

AI can help maintain that “single source of truth” by drafting update notes and keeping acceptance criteria aligned with the latest decisions. In teams that build and ship software directly (not just docs), platforms like Koder.ai can also shorten this step by keeping planning, implementation, and deployment tightly connected—so the “what changed” narrative stays close to the actual release.

AI can only improve what you feed it. The good news is that most teams already have plenty of feedback—just spread across different places and written in different styles. Your job is to collect it consistently so AI can summarize it, spot patterns, and help you decide what to change next.

AI is strongest with messy, text-heavy inputs, including:

You don’t need perfect formatting. What matters is capturing the original words and a small amount of metadata (date, product area, plan, etc.).

Once collected, AI can cluster feedback into themes—billing confusion, onboarding friction, missing integrations, slow performance—and show what repeats most often. This matters because the loudest comment isn’t always the most common problem.

A practical approach is to ask AI for:

Feedback without context can lead to generic conclusions. Attach lightweight context alongside each item, such as:

Even a few consistent fields make AI’s grouping and summaries far more actionable.

Before analysis, redact sensitive information: names, emails, phone numbers, addresses, payment details, and anything confidential in call notes. Prefer data minimization—share only what’s needed for the task—and store raw exports securely. If you’re using third-party tools, confirm your team’s policy on retention and training, and restrict access to the dataset.

Raw feedback is usually a pile of mismatched inputs: support tickets, app reviews, survey comments, sales notes, and Slack threads. AI is useful here because it can read “messy” language at scale and help you turn it into a short list of themes you can actually work on.

Start by feeding AI a batch of feedback (with sensitive data removed) and ask it to group items into consistent categories such as onboarding, performance, pricing, UI confusion, bugs, and feature requests. The goal isn’t perfect taxonomy—it’s a shared map your team can use.

A practical output looks like:

Once feedback is grouped, ask AI to propose a priority score using a rubric you can review:

You can keep it lightweight (High/Med/Low) or numeric (1–5). The key is that AI drafts the first pass and humans confirm assumptions.

Summaries get dangerous when they erase the “why.” A useful pattern is: theme summary + 2–4 representative quotes. For example:

“I connected Stripe but nothing changed—did it sync?”

“The setup wizard skipped a step and I wasn’t sure what to do next.”

Quotes preserve emotional tone and context—and they prevent the team from treating every issue as identical.

AI can overweight dramatic language or repeat commenters if you don’t guide it. Ask it to separate:

Then sanity-check against usage data and segmentation. A complaint from power users may matter a lot—or it may reflect a niche workflow. AI can help you see patterns, but it can’t decide what “represents your users” without your context.

A useful way to think about an AI tool is as a version generator. Instead of asking for a single “best” response, ask for several plausible drafts you can compare, mix, and refine. That mindset keeps you in control and makes iteration faster.

This is especially powerful when you’re iterating on product surfaces (onboarding flows, UI copy, feature spec wording). For example, if you’re building an internal tool or a simple customer app in Koder.ai, you can use the same “generate multiple versions” approach to explore different screens, flows, and requirements in Planning Mode before you commit—then rely on snapshots and rollback to keep rapid changes safe.

If you request “write this for me,” you’ll often get generic output. Better: define boundaries so the AI can explore options within them.

Try specifying:

With constraints, you can generate “Version A: concise,” “Version B: more empathetic,” “Version C: more specific,” without losing accuracy.

Ask for 3–5 alternatives in one go and make the differences explicit: “Each version should use a different structure and opening line.” This creates real contrast, which helps you spot what’s missing and what resonates.

A practical workflow:

Before you send a draft for review or testing, check that it has:

Used this way, AI doesn’t replace judgment—it speeds up the search for a better version.

Before you ship a draft—whether it’s a product spec, release note, help article, or marketing page—an AI tool can act as a fast “first reviewer.” The goal isn’t to replace human judgment; it’s to surface obvious issues early so your team spends time on the hard decisions, not basic cleanup.

AI reviews are especially useful for:

Paste your draft and ask for a specific type of critique. For example:

A quick way to broaden perspective is to ask the model to review from different roles:

AI can confidently critique wording while being wrong about product details. Treat factual items—pricing, feature availability, security claims, timelines—as “needs verification.” Keep a habit of marking claims with sources (links to docs, tickets, or decisions) so the final version reflects reality, not a plausible-sounding guess.

Raw feedback is rarely ready to implement. It tends to be emotional (“this feels off”), mixed (“I like it but…”), or underspecified (“make it clearer”). AI can help translate that into work items your team can actually ship—while keeping the original comment attached so you can justify decisions later.

Ask your AI tool to rewrite each piece of feedback using this structure:

Problem → Evidence → Proposed change → Success metric

This forces clarity without “inventing” new requirements.

Example input feedback:

“The checkout page is confusing and takes too long.”

AI-assisted output (edited by you):

Then convert it into a task with boundaries:

Task: Add progress indicator + update button label on checkout.

Out of scope: Changing payment providers, redesigning the entire checkout layout, rewriting all product copy.

Use AI to draft acceptance criteria, then tighten it:

Always store:

That traceability protects accountability, prevents “AI said so” decisions, and makes future iterations faster because you can see what changed—and why.

Iteration gets real when you test a change against a measurable outcome. AI can help you design small, fast experiments—without turning every improvement into a week-long project.

A practical template is:

You can ask an AI tool to propose 3–5 candidate hypotheses based on your feedback themes (e.g., “users say setup feels confusing”), then rewrite them into testable statements with clear metrics.

Email subject lines (metric: open rate):

Onboarding message (metric: completion rate of step 1):

UI microcopy on a button (metric: click-through rate):

AI is useful here because it can produce multiple plausible variants quickly—different tones, lengths, and value propositions—so you can choose one clear change to test.

Speed is great, but keep experiments readable:

AI can tell you what “sounds better,” but your users decide. Use AI to:

That way each test teaches you something—even when the new version loses.

Iteration only works when you can tell whether the last change actually helped. AI can speed up the “measurement to learning” step, but it can’t replace discipline: clear metrics, clean comparisons, and written decisions.

Pick a small set of numbers you’ll check every cycle, grouped by what you’re trying to improve:

The key is consistency: if you change your metric definitions every sprint, the numbers won’t teach you anything.

Once you have experiment readouts, dashboards, or exported CSVs, AI is useful for turning them into a narrative:

A practical prompt: paste your results table and ask the assistant to produce (1) a one-paragraph summary, (2) the biggest segment differences, and (3) follow-up questions to validate.

AI can make results sound definitive even when they aren’t. You still need to sanity-check:

After each cycle, write a short entry:

AI can draft the entry, but your team should approve the conclusion. Over time, this log becomes your memory—so you stop repeating the same experiments and start compounding wins.

Speed is nice, but consistency is what makes iteration compound. The goal is to turn “we should improve this” into a routine your team can run without heroics.

A scalable loop doesn’t need heavy process. A few small habits beat a complicated system:

Treat prompts like assets. Store them in a shared folder and version them like other work.

Maintain a small library:

A simple convention helps: “Task + Audience + Constraints” (e.g., “Release notes — non-technical — 120 words — include risks”).

For anything that affects trust or liability—pricing, legal wording, medical or financial guidance—use AI to draft and flag risks, but require a named approver before publishing. Make that step explicit so it doesn’t get skipped under time pressure.

Fast iteration creates messy files unless you label clearly. Use a predictable pattern like:

FeatureOrDoc_Scope_V#_YYYY-MM-DD_Owner

Example: OnboardingEmail_NewTrial_V3_2025-12-26_JP.

When AI generates options, keep them grouped under the same version (V3A, V3B) so everyone knows what was compared and what actually shipped.

AI can speed up iteration, but it can also speed up mistakes. Treat it like a powerful teammate: helpful, fast, and sometimes confidently wrong.

Over-trusting AI output. Models can produce plausible text, summaries, or “insights” that don’t match reality. Build a habit of checking anything that could affect customers, budgets, or decisions.

Vague prompts lead to vague work. If your input is “make this better,” you’ll get generic edits. Specify audience, goal, constraints, and what “better” means (shorter, clearer, on-brand, fewer support tickets, higher conversion, etc.).

No metrics, no learning. Iteration without measurement is just change. Decide upfront what you’ll track (activation rate, time-to-first-value, churn, NPS themes, error rate) and compare before/after.

Don’t paste personal, customer, or confidential information into tools unless your organization explicitly allows it and you understand retention/training policies.

Practical rule: share the minimum needed.

AI may invent numbers, citations, feature details, or user quotes. When accuracy matters:

Before publishing an AI-assisted change, do a quick pass:

Used this way, AI stays a multiplier for good judgment—not a replacement for it.

Iteration is a repeatable cycle of making a version, getting signals about what works, improving it, and repeating.

A practical loop is: draft → feedback → revise → check → ship—with clear decisions and metrics each time.

Short cycles help you catch misunderstandings and defects early, when they’re cheapest to fix.

They also reduce “debate without evidence” by forcing learning from real feedback (usage, tickets, tests) instead of assumptions.

AI is best when there’s lots of messy information and you need help processing it.

It can:

AI doesn’t know your goals, constraints, or definition of “good” unless you specify them.

It can also produce plausible-but-wrong suggestions, so the team still needs to:

Give it a “reviewable” brief with constraints so it can generate usable versions.

Include:

Then ask for 3–5 alternatives so you can compare options instead of accepting a single draft.

AI performs well on text-heavy inputs such as:

Add lightweight metadata (date, product area, user type, plan tier) so summaries stay actionable.

Ask for:

Then sanity-check the output against segmentation and usage data so loud comments don’t outweigh common issues.

Use a consistent structure like:

Keep the original feedback attached so decisions are traceable and you avoid “AI said so” justification.

Yes—if you use it to generate versions and draft testable hypotheses, not to “pick winners.”

Keep experiments interpretable:

AI can also draft a results summary and suggest follow-up questions based on segment differences.

Start with data minimization and redaction.

Practical safeguards: