Mar 29, 2025·8 min

Building a Web App to Run Content Moderation Workflows

Learn how to design and build a web app for content moderation: queues, roles, policies, escalation, audit logs, analytics, and safe integrations.

Learn how to design and build a web app for content moderation: queues, roles, policies, escalation, audit logs, analytics, and safe integrations.

Before you design a content moderation workflow, decide what you’re actually moderating and what “good” looks like. A clear scope prevents your moderation queue from filling with edge cases, duplicates, and requests that don’t belong there.

Write down every content type that can create risk or user harm. Common examples include user-generated text (comments, posts, reviews), images, video, livestreams, profile fields (names, bios, avatars), direct messages, community groups, and marketplace listings (titles, descriptions, photos, pricing).

Also note sources: user submissions, automated imports, edits to existing items, and reports from other users. This avoids building a system that only works for “new posts” while missing edits, re-uploads, or DM abuse.

Most teams balance four goals:

Be explicit about which goal is primary in each area. For example, high-severity abuse may prioritize speed over perfect consistency.

List the full set of outcomes your product requires: approve, reject/remove, edit/redact, label/age-gate, restrict visibility, place under review, escalate to a lead, and account-level actions like warnings, temporary locks, or bans.

Define measurable targets: median and 95th-percentile review time, backlog size, reversal rate on appeal, policy accuracy from QA sampling, and the percentage of high-severity items handled within an SLA.

Include moderators, team leads, policy, support, engineering, and legal. Misalignment here causes rework later—especially around what “escalation” means and who owns final decisions.

Before you build screens and queues, sketch the full lifecycle of a single piece of content. A clear workflow prevents “mystery states” that confuse reviewers, break notifications, and make audits painful.

Start with a simple, end-to-end state model you can put in a diagram and in your database:

Submitted → Queued → In review → Decided → Notified → Archived

Keep states mutually exclusive, and define which transitions are allowed (and by whom). For example: “Queued” can move to “In review” only when assigned, and “Decided” should be immutable except through an appeal flow.

Automated classifiers, keyword matches, rate limits, and user reports should be treated as signals, not decisions. A “human-in-the-loop” design keeps the system honest:

This separation also makes it easier to improve models later without rewriting policy logic.

Decisions will be challenged. Add first-class flows for:

Model appeals as new review events rather than editing history. That way you can tell the full story of what happened.

For audits and disputes, define which steps must be recorded with timestamps and actors:

If you can’t explain a decision later, you should assume it didn’t happen.

A moderation tool lives or dies on access control. If everyone can do everything, you’ll get inconsistent decisions, accidental data exposure, and no clear accountability. Start by defining roles that match how your trust and safety team actually works, then translate them into permissions your app can enforce.

Most teams need a small set of clear roles:

This separation helps avoid “policy changes by accident” and keeps policy governance distinct from day-to-day enforcement.

Implement role-based access control so each role gets only what it needs:

can_apply_outcome, can_override, can_export_data) rather than by page.If you later add new features (exports, automations, third-party integrations), you can attach them to permissions without redefining your whole org structure.

Plan for multiple teams early: language pods, region-based groups, or separate lines for different products. Model teams explicitly, then scope queues, content visibility, and assignments by team. This prevents cross-region review mistakes and keeps workloads measurable per group.

Admins sometimes need to impersonate users to debug access or reproduce a reviewer issue. Treat impersonation as a sensitive action:

For irreversible or high-risk actions, add admin approval (or two-person review). That small friction protects against both mistakes and insider abuse, while keeping routine moderation fast.

Queues are where moderation work becomes manageable. Instead of a single endless list, split work into queues that reflect risk, urgency, and intent—then make it hard for items to fall through the cracks.

Start with a small set of queues that match how your team actually operates:

Keep queues mutually exclusive when possible (an item should have one “home”), and use tags for secondary attributes.

Within each queue, define scoring rules that determine what rises to the top:

Make priorities explainable in the UI (“Why am I seeing this?”) so reviewers trust the ordering.

Use claiming/locking: when a reviewer opens an item, it’s assigned to them and hidden from others. Add a timeout (e.g., 10–20 minutes) so abandoned items return to the queue. Always log claim, release, and completion events.

If the system rewards speed, reviewers may pick quick cases and skip hard ones. Counter this by:

The goal is consistent coverage, not just high throughput.

A moderation policy that only exists as a PDF will be interpreted differently by every reviewer. To make decisions consistent (and auditable), translate policy text into structured data and UI choices that your workflow can enforce.

Start by breaking policy into a shared vocabulary reviewers can select from. A useful taxonomy usually includes:

This taxonomy becomes the foundation for queues, escalation, and analytics later.

Instead of asking reviewers to write a decision from scratch each time, provide decision templates tied to taxonomy items. A template can prefill:

Templates make the “happy path” fast, while still allowing exceptions.

Policies change. Store policies as versioned records with effective dates, and record which version was applied for every decision. This prevents confusion when older cases are appealed and ensures you can explain outcomes months later.

Free text is hard to analyze and easy to forget. Require reviewers to pick one or more structured reasons (from your taxonomy) and optionally add notes. Structured reasons improve appeals handling, QA sampling, and trend reporting—without forcing reviewers to write essays.

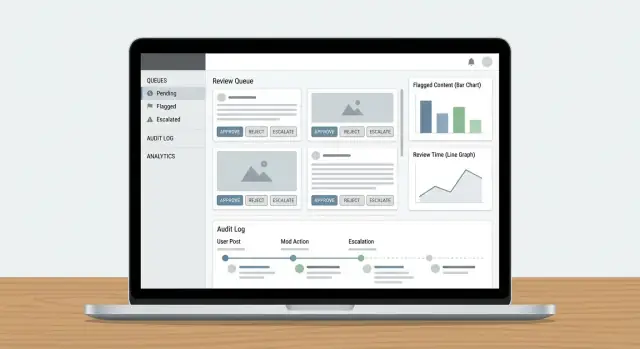

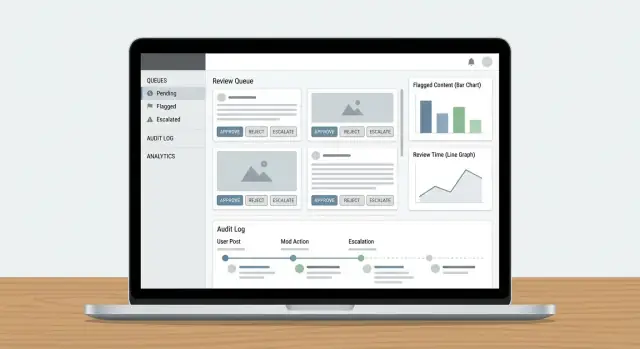

A reviewer dashboard succeeds when it minimizes “hunting” for information and maximizes confident, repeatable decisions. Reviewers should be able to understand what happened, why it matters, and what to do next—without opening five tabs.

Don’t display an isolated post and expect consistent outcomes. Present a compact context panel that answers common questions at a glance:

Keep the default view concise, with expand options for deeper dives. Reviewers should rarely need to leave the dashboard to decide.

Your action bar should match your policy outcomes, not generic CRUD buttons. Common patterns include:

Make actions visible and irreversible steps explicit (confirmation only when needed). Capture a short reason code plus optional notes for later audits.

High volume work demands low friction. Add keyboard shortcuts for the top actions (approve, reject, next item, add label). Display a shortcut cheat-sheet inside the UI.

For queues with repetitive work (e.g., obvious spam), support bulk selection with guardrails: show a preview count, require a reason code, and log the batch action.

Moderation can expose people to harmful material. Add safety defaults:

These choices protect reviewers while keeping decisions accurate and consistent.

Audit logs are your “source of truth” when someone asks: Why was this post removed? Who approved the appeal? Did the model or a human make the final call? Without traceability, investigations turn into guesswork, and reviewer trust drops fast.

For each moderation action, log who did it, what changed, when it happened, and why (policy reason + free-text notes). Just as important: store before/after snapshots of the relevant objects—content text, media hashes, detected signals, labels, and the final outcome. If the item can change (edits, deletions), snapshots prevent “the record” from drifting.

A practical pattern is an append-only event record:

{

"event": "DECISION_APPLIED",

"actor_id": "u_4821",

"subject_id": "post_99102",

"queue": "hate_speech",

"decision": "remove",

"policy_code": "HS.2",

"reason": "slur used as insult",

"before": {"status": "pending"},

"after": {"status": "removed"},

"created_at": "2025-12-26T10:14:22Z"

}

Beyond decisions, log the workflow mechanics: claimed, released, timed out, reassigned, escalated, and auto-routed. These events explain “why it took 6 hours” or “why this item bounced between teams,” and they’re essential for detecting abuse (e.g., reviewers cherry-picking easy items).

Give investigators filters by user, content ID, policy code, time range, queue, and action type. Include export to a case file, with immutable timestamps and references to related items (duplicates, re-uploads, appeals).

Set clear retention windows for audit events, snapshots, and reviewer notes. Keep the policy explicit (e.g., 90 days for routine queue logs, longer for legal holds), and document how redaction or deletion requests affect stored evidence.

A moderation tool is only useful if it closes the loop: reports become review tasks, decisions reach the right people, and user-level actions are executed consistently. This is where many systems break—someone resolves the queue, but nothing else changes.

Treat user reports, automated flags (spam/CSAM/hash matches/toxicity signals), and internal escalations (support, community managers, legal) as the same core object: a report that can spawn one or more review tasks.

Use a single report router that:

If support escalations are part of the flow, link them directly (e.g., /support/tickets/1234) so reviewers don’t context-switch.

Moderation decisions should generate templated notifications: content removed, warning issued, no action, or account action taken. Keep messaging consistent and minimal—explain the outcome, reference the relevant policy, and provide appeal instructions.

Operationally, send notifications via an event like moderation.decision.finalized, so email/in-app/push can subscribe without slowing the reviewer.

Decisions often require actions beyond a single piece of content:

Make these actions explicit and reversible, with clear durations and reasons. Link every action back to the decision and the underlying report for traceability, and provide a fast path to Appeals so decisions can be revisited without manual detective work.

Your data model is the “source of truth” for what happened to every item: what was reviewed, by whom, under which policy, and what the result was. If you get this layer right, everything else—queues, dashboards, audits, and analytics—gets easier.

Avoid storing everything in one record. A practical pattern is to keep:

HARASSMENT.H1 or NUDITY.N3, stored as references so policies can evolve without rewriting history.This keeps policy enforcement consistent and makes reporting clearer (e.g., “top violated policy codes this week”).

Don’t put large images/videos directly in your database. Use object storage and store only object keys + metadata in your content table.

For reviewers, generate short-lived signed URLs so media is accessible without making it public. Signed URLs also let you control expiration and revoke access if needed.

Queues and investigations depend on fast lookups. Add indexes for:

Model moderation as explicit states (e.g., NEW → TRIAGED → IN_REVIEW → DECIDED → APPEALED). Store state transition events (with timestamps and actor) so you can detect items that haven’t progressed.

A simple safeguard: a last_state_change_at field plus alerts for items that exceed an SLA, and a repair job that re-queues items left IN_REVIEW after a timeout.

Trust & Safety tools often handle the most sensitive data your product has: user-generated content, reports, account identifiers, and sometimes legal requests. Treat the moderation app as a high-risk system and design security and privacy in from day one.

Start with strong authentication and tight session controls. For most teams, that means:

Pair this with role-based access control so reviewers only see what they need (for example: one queue, one region, or one content type).

Encrypt data in transit (HTTPS everywhere) and at rest (managed database/storage encryption). Then focus on exposure minimization:

If you handle consent or special categories of data, make those flags visible to reviewers and enforce them in the UI (e.g., restricted viewing or retention rules).

Reporting and appeal endpoints are frequent targets for spam and harassment. Add:

Finally, make every sensitive action traceable with an audit trail (see /blog/audit-logs) so you can investigate reviewer mistakes, compromised accounts, or coordinated abuse.

A content moderation workflow only gets better if you can measure it. Analytics should tell you whether your moderation queue design, escalation rules, and policy enforcement are producing consistent decisions—without burning out reviewers or letting harmful content sit too long.

Start with a small set of metrics tied to outcomes:

Put these into an SLA dashboard so ops leads can see which queues are falling behind and whether the bottleneck is staffing, unclear rules, or a surge in reports.

Disagreement isn’t always bad—it can indicate edge cases. Track:

Use your audit log to connect every sampled decision to the reviewer, applied rule, and evidence. This gives you explainability when coaching reviewers and when evaluating whether your review dashboard UI is nudging people toward inconsistent choices.

Moderation analytics should help you answer: “What are we seeing that our policy doesn’t cover well?” Look for clusters like:

Turn those signals into concrete actions: rewrite policy examples, add decision trees to the reviewer dashboard, or update enforcement presets (e.g., default timeouts vs. warnings).

Treat analytics as part of a human-in-the-loop system. Share queue-level performance publicly inside the team, but handle individual metrics carefully to avoid incentivizing speed over quality. Pair quantitative KPIs with regular calibration sessions and small, frequent policy updates—so the tooling and the people improve together.

A moderation tool fails most often at the edges: the weird posts, the rare escalation paths, and the moments when multiple people touch the same case. Treat testing and rollout as part of the product, not a final checkbox.

Build a small “scenario pack” that mirrors real work. Include:

Use production-like data volumes in a staging environment so you can spot queue slowdowns and pagination/search issues early.

A safer rollout pattern is:

Shadow mode is especially useful for validating policy enforcement rules and automation without risking false positives.

Write short, task-based playbooks: “How to process a report,” “When to escalate,” “How to handle appeals,” and “What to do when the system is uncertain.” Then train with the same scenario pack so reviewers practice the exact flows they’ll use.

Plan maintenance as continuous work: new content types, updated escalation rules, periodic sampling for QA, and capacity planning when queues spike. Keep a clear release process for policy updates so reviewers can see what changed and when—and so you can correlate changes with moderation analytics.

If you’re implementing this as a web application, a big portion of the effort is repetitive scaffolding: RBAC, queues, state transitions, audit logs, dashboards, and the event-driven glue between decisions and notifications. Koder.ai can speed up that build by letting you describe the moderation workflow in a chat interface and generate a working foundation you can iterate on—typically with a React frontend and a Go + PostgreSQL backend.

Two practical ways to use it for trust & safety tooling:

Once the baseline is in place, you can export the source code, connect your existing model signals as “inputs,” and keep the reviewer’s decision as the final authority—matching the human-in-the-loop architecture described above.

Start by listing every content type you will handle (posts, comments, DMs, profiles, listings, media), plus every source (new submissions, edits, imports, user reports, automated flags). Then define what is out of scope (e.g., internal admin notes, system-generated content) so your queue doesn’t become a dumping ground.

A practical check: if you can’t name the content type, source, and owner team, it probably shouldn’t create a moderation task yet.

Pick a small set of operational KPIs that reflect both speed and quality:

Set targets per queue (e.g., high-risk vs. backlog) so you don’t accidentally optimize low-urgency work while harmful content waits.

Use a simple, explicit state model and enforce allowed transitions, for example:

SUBMITTED → QUEUED → IN_REVIEW → DECIDED → NOTIFIED → ARCHIVEDMake states mutually exclusive, and treat “Decided” as immutable except through an appeal/re-review flow. This prevents “mystery states,” broken notifications, and hard-to-audit edits.

Treat automated systems as signals, not final outcomes:

This keeps policy enforcement explainable and makes it easier to improve models later without rewriting your decision logic.

Build appeals as first-class objects linked to the original decision:

Start with a small, clear RBAC set:

Use multiple queues with clear “home” ownership:

Prioritize within a queue using explainable signals like severity, reach, unique reporters, and SLA timers. In the UI, show “Why am I seeing this?” so reviewers trust ordering and you can spot gaming.

Implement claiming/locking with timeouts:

This reduces duplicate effort and gives you the data to diagnose bottlenecks and cherry-picking behaviors.

Turn your policy into a structured taxonomy and templates:

This improves consistency, makes analytics meaningful, and simplifies audits and appeals.

Log everything needed to reconstruct the story:

Make logs searchable by actor, content ID, policy code, queue, and time range, and define retention rules (including legal holds and how deletion requests affect stored evidence).

Always record which policy version was applied originally and which version is applied during the appeal.

Then add least-privilege permissions by capability (e.g., can_export_data, can_apply_account_penalty) so new features don’t blow up your access model.