May 18, 2025·8 min

How to Create a Mobile App for Micro-Task Completion

Learn how to plan, design, build, and launch a mobile app for micro-task completion—from MVP features and UX to payments, safety, and growth.

Learn how to plan, design, build, and launch a mobile app for micro-task completion—from MVP features and UX to payments, safety, and growth.

A micro-task app is a mobile marketplace for small, well-defined pieces of work that can be completed quickly—often in minutes. “Micro” doesn’t mean “low value”; it means the task has a clear scope, repeatable steps, and an objective outcome (for example: “Upload 3 photos of the store entrance,” “Tag 20 images,” or “Confirm this address exists”).

Micro-task apps are typically two-sided:

Your app’s job is to match these two sides efficiently, while keeping instructions, proof, and approvals simple.

Micro-tasks usually fall into a few practical categories:

A micro-task app is not a general freelancing platform for long projects, complex negotiations, or custom scoping. If each job requires detailed discovery calls and bespoke pricing, it’s not a micro-task marketplace.

These apps only work when supply and demand stay in sync: enough quality tasks to keep workers engaged, and enough reliable workers to deliver results quickly.

Most micro-task marketplaces earn revenue through:

Pick a model that matches how often tasks are posted and how time-sensitive they are.

A micro-task app lives or dies on repeatable demand: the same types of tasks posted frequently, completed quickly, and paid fairly. Before you design screens or write code, get specific about who you’re helping and why they’ll switch from their current workaround.

Start by naming two sides of your marketplace:

Interview 10–15 people on each side. Ask what slows them down today (finding someone, trust, pricing, coordination, no-shows) and what “success” looks like (time saved, predictability, safety, getting paid fast).

Pick a niche where tasks are:

Then choose a small starting area (one city, one campus, a few neighborhoods). Density matters: too wide and you’ll have long wait times and cancellations.

Look at direct micro-task apps and indirect alternatives (Facebook groups, Craigslist, local agencies). Document gaps in:

Example: “A same-day photo-verified task marketplace for local retailers to handle quick in-store checks within 2 hours.” If you can’t say it in one sentence, your scope is too broad.

Set measurable goals for your first release, such as:

These metrics keep you focused while validating real demand.

A micro-task app lives or dies by how smoothly work moves from “posted” to “paid.” Before screens and features, map the marketplace flow end to end for both sides (posters and workers). This reduces confusion, support tickets, and abandoned tasks.

For posters, the critical path is: post → match → completion → approve → payout.

For workers, it’s: discover → accept → complete → get approved → receive payout.

Write these as short step-by-step stories, including what the user sees, what the system does in the background, and what happens when something goes wrong.

Every task should specify proof requirements up front. Common “done” signals include:

Be explicit about accept/reject criteria so approvals feel fair and predictable.

Decide how workers get tasks:

Start with one model and add another later, but avoid mixing rules in the MVP.

Notifications should support action, not noise: new tasks, deadlines, acceptance confirmations, approval/rejection, and payout status. Also consider reminders when a task is accepted but not started.

List the biggest breakdowns—no-shows, incomplete proof, missed deadlines, and disputes—and define the app response (reassign, partial payment, escalation, or cancellation). Make these rules visible in the task details so users trust the system.

An MVP for a micro-task app isn’t “a smaller version of everything.” It’s the minimum set of features that lets two groups—task posters and workers—successfully complete a task, get paid, and feel safe enough to return.

At launch, posters need a clean path from idea to approved submission:

Keep task creation opinionated. Provide templates (e.g., “Take a shelf photo,” “Verify address,” “Transcribe receipt”) so posters don’t write vague tasks that cause disputes.

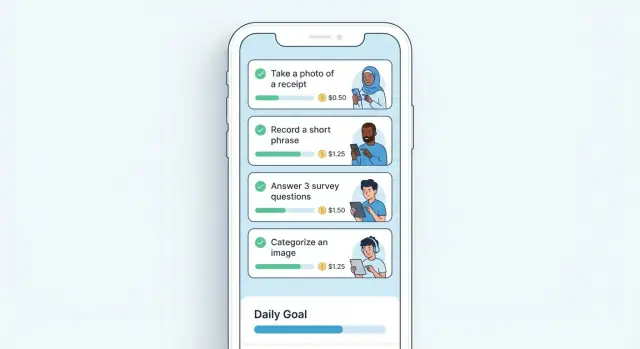

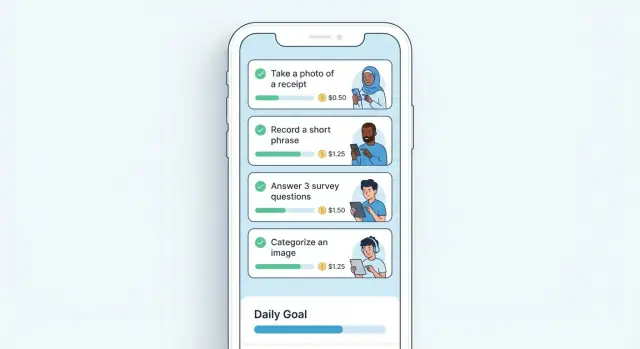

Workers should be able to earn without friction:

Clarity beats cleverness: show payout, steps, and proof requirements before a worker commits.

Trust is an MVP feature in a marketplace:

To ship, push these to v2:

Before building any feature, confirm:

If you can reliably complete real tasks end-to-end with these basics, you have an MVP that can launch, learn, and improve.

If you want to reduce the time from “spec” to “shippable MVP,” a vibe-coding platform like Koder.ai can help you iterate on screens, flows, and backend APIs via a chat interface—useful when you’re validating a marketplace and expect to change requirements weekly.

A micro-task app wins or loses in the first 30 seconds. People open it in a queue, on a break, or between errands—so every screen should help them start, complete, and get paid with minimal thinking.

Confusion creates disputes and drop-offs. Treat task creation like filling out a proven template, not a blank page. Provide task templates with:

Add small helpers (examples, character limits, and required fields) so posters can’t accidentally publish vague tasks.

Users should always know what’s next. Use a consistent set of statuses across lists, task details, and notifications:

Available → In progress → Submitted → Approved → Paid

Pair each status with one primary action button (e.g., “Start task,” “Submit proof,” “View payout”) to reduce decision fatigue.

Micro-tasks should be doable with one hand and a few taps:

If a user needs to scroll past long instructions, show a sticky checklist or “Steps” drawer they can reference while working.

Use readable font sizes, strong contrast, and simple language. Avoid relying on color alone for status (add labels/icons). Keep error messages specific (“Photo is required”) and show them near the field.

Your “no data yet” screens are onboarding. Plan guidance for:

A single sentence plus a clear button (“Browse available tasks”) beats paragraphs of instructions.

Your tech approach should match your budget, timeline, and how quickly you need to iterate. A micro-task app lives or dies on speed: fast posting, fast claiming, fast proof submission, and fast payout.

Native (Swift iOS + Kotlin Android) is best when you need top-tier performance, polished UI, and deep OS integrations (camera, background uploads, location). It typically costs more because you maintain two codebases.

Cross-platform (Flutter / React Native) is often the best fit for an MVP: one codebase, quicker delivery, and simpler feature parity across iOS/Android. Performance is usually more than enough for task feeds, chat, and photo uploads. If budget and speed matter most, start here.

Plan these parts upfront:

If you’re building quickly, consider tooling that generates consistent web and backend scaffolding from product requirements. For example, Koder.ai focuses on chat-driven app creation and commonly targets a React web front end with a Go backend and PostgreSQL—handy for moving from “MVP flow” to a working task marketplace without spending weeks on boilerplate.

Photos, receipts, and ID docs should go to object storage (e.g., S3/GCS) rather than your database. Decide retention by file type: task proof might be kept 90–180 days; sensitive verification documents often need shorter retention with strict access controls.

Set clear targets early: 99.9% uptime for core APIs, <300 ms average API response for common actions, and defined support SLAs. These goals guide hosting, monitoring, and how much caching you’ll need from day one.

Your backend is the “source of truth” for who can do what, when, and for how much. If you get the data model right early, you’ll ship faster and avoid messy edge cases when real money and deadlines are involved.

Start with a small set of entities you can explain on a whiteboard:

Plan endpoints around the real workflow:

Marketplaces need accountability. Store an event log for key actions: task edits, assignment changes, approvals, payout triggers, and dispute outcomes. This can be a simple audit_events table with actor, action, before/after, and timestamp.

If a task has limited slots (often just one), enforce it at the database level: use transactions/row locks or atomic updates so two workers can’t claim the same slot during a race condition.

If tasks require being on-site, store latitude/longitude, support distance filters, and consider geofencing checks at claim or submission time. Keep it optional so remote tasks stay friction-free.

Payments are where micro-task apps succeed or fail: the experience has to feel simple for posters, predictable for workers, and safe for you as the marketplace.

Most micro-task marketplaces start with escrow/hold funds: when a poster creates a task, you authorize or capture the payment and hold it until the task is approved. This reduces “I did the work but never got paid” disputes and makes refunds clearer when a task is rejected.

You can support instant pay rules, but define them tightly—for example: only for repeat posters, only below a small amount, or only for tasks with clear objective proof (e.g., geo-check-in + photo). If you allow instant pay too broadly, you’ll eat more chargebacks and “work not delivered” claims.

Decide whether fees are paid by the poster, the worker, or split:

Whatever you choose, show fees early (task posting + checkout) and repeat them on receipts. Avoid surprises.

Workers care about getting paid fast, but you need controls. Common patterns:

Build this into worker onboarding so expectations are set before the first task.

Plan basic checks from day one: duplicate accounts (same device, phone, bank), suspicious task patterns (same poster-worker pairs repeatedly), abnormal GPS/photo metadata, and chargeback monitoring. Add lightweight holds or manual review when signals spike.

Make “money screens” self-serve:

Clear records reduce support tickets and build trust.

A micro-task app only works when both sides feel safe: posters trust that work is real, and workers trust they’ll be paid and treated fairly. You don’t need enterprise-grade controls on day one, but you do need clear rules and a few reliable safeguards.

Start with lightweight verification like email + phone confirmation to reduce spam and duplicate accounts. If tasks involve in-person work, higher payouts, or regulated categories, consider optional or required ID checks.

Keep the flow simple: explain why you’re asking, what you store, and how long you keep it. Drop-off here hurts supply, so only add friction when it meaningfully reduces risk.

Give users easy ways to protect themselves:

On the admin side, make moderation fast: search by user, task, or phrase; view history; and take clear actions (warn, unlist, suspend).

Disputes should follow a predictable sequence: attempt resolution in chat, escalate to support, then a decision with a clear outcome (refund, payout, partial split, or ban).

Define what counts as evidence: in-app messages, timestamps, photos, location check-ins (if enabled), and receipts. Avoid relying on “he said/she said” decisions.

Protect user data with fundamentals: encryption in transit (HTTPS), encryption at rest for sensitive fields, least-privilege staff access, and audit logs for admin actions. Don’t store payment card data yourself—use a payment provider.

Write short, plain rules that set expectations: accurate task descriptions, fair pay, respectful communication, no illegal or dangerous requests, and no off-platform payment requests. Link them during posting and onboarding so quality stays high.

Quality assurance for a micro-task app is mostly about protecting the “money paths” and the “time paths”: can someone complete a task quickly, and can you pay them correctly. A good plan pairs structured test cases with a small real-world pilot, then turns learnings into short iteration cycles.

Start by writing simple, repeatable test cases for the core marketplace journey:

Also test edge cases: expired tasks, double-accept attempts, disputes, partial completion, and cancellations.

Micro-tasks often happen on the move. Simulate poor connectivity and confirm the app behaves predictably:

Define your “must-test” device set based on your audience: small screens, low-memory devices, and older OS versions. Focus on layout breakpoints, camera/upload performance, and notification delivery.

Recruit a handful of posters and workers and run 1–2 weeks of real tasks. Measure whether task instructions are understandable, how long tasks actually take, and where users hesitate.

Set up crash reporting and in-app feedback before the pilot. Tag feedback by screen and task ID so you can spot patterns, prioritize fixes, and ship weekly improvements without guessing.

A micro-task app lives or dies in the first week: early users decide whether tasks feel “real,” payouts feel “safe,” and support feels responsive. Before you submit to the stores, make sure the experience is not just working—it’s understandable.

Prepare your store listing to reduce confusion and low-quality sign-ups:

Your onboarding should teach users how to succeed, not just collect permissions.

Include:

Before inviting real users, verify the “boring” parts that create trust:

Start with one region or city so you can balance task supply and worker demand. A controlled rollout also keeps support volume manageable while you tune pricing, categories, and anti-fraud rules.

Add a simple help hub with FAQs and clear escalation paths (e.g., payment issues, rejected submissions, reporting a task). Link it from onboarding and settings, such as /help and /help/payments.

If you don’t measure the marketplace, you’ll “grow” into confusion: more users, more support tickets, and the same stalled transactions. Pick a small set of metrics that explain whether tasks are getting posted, accepted, and completed smoothly.

Start with a simple funnel for both sides:

These numbers show where friction lives. For example, a low completion rate often means unclear requirements, mismatched pricing, or weak verification—not “lack of marketing.”

Micro-task apps fail when one side outruns the other. If posters wait too long, they churn; if workers see empty feeds, they churn.

Tactics to rebalance:

Quality scales better than moderation.

Use task templates, pricing guidance, and short “what good looks like” tips at posting time. Educate posters with examples and lightweight rules, then link to deeper guidance in /blog.

Try growth loops that reinforce completion:

If you do add referrals later, consider tying rewards to real value creation (a completed task or a funded first task). Platforms like Koder.ai also run programs that reward users for sharing content or referrals—an approach you can mirror once your own marketplace has stable completion quality.

As volume grows, prioritize: automation (fraud flags, dispute triage), smarter matching (skills, proximity, reliability), and enterprise features (team accounts, invoicing, reporting). Scale what increases successful completions, not just installs.

A micro-task app is a marketplace for small, well-defined tasks that can be completed quickly (often in minutes) with objective proof (e.g., photos, checklists, tags, GPS/time evidence). It’s not meant for long, custom-scoped projects with ongoing negotiation and bespoke pricing.

Start by interviewing 10–15 task posters and 10–15 workers. Validate that tasks are:

Then pilot in a tight geography (one city/campus) and track completion rate and time-to-match.

Narrow your MVP to one niche + one area where density is achievable. Examples include photo verification for local retailers, address checks for property managers, or simple tagging tasks for small e-commerce teams. A tight niche makes templates, pricing guidance, and verification rules much easier.

Use a single, clear flow on both sides:

Design the steps and failure states (no-shows, missed deadlines, incomplete proof) before designing screens.

Define “done” inside the task itself using verifiable requirements such as:

Also publish accept/reject criteria so approvals feel predictable and disputes drop.

Pick one model for MVP:

Avoid mixing rules in v1; confusion creates cancellations and support tickets.

MVP essentials usually include:

Everything else should be judged against: .

Ship “trust basics” early:

Trust isn’t a “nice to have” in a paid marketplace.

Most marketplaces start with escrow/held funds: the poster pays when posting, funds are held until approval, then the worker is paid. It reduces “work completed but unpaid” issues and makes refunds clearer.

Set expectations upfront on:

Make money screens self-serve (receipts, payout history, reference IDs).

Track a small set of marketplace metrics:

If one side outruns the other, rebalance with controlled regional rollout, waitlists, and seeding repeatable task types.