Jun 07, 2025·8 min

Michael Stonebraker and Modern Databases: What He Changed

Explore Michael Stonebraker’s key ideas behind Ingres, Postgres, and Vertica—and how they shaped SQL databases, analytics engines, and today’s data stacks.

Explore Michael Stonebraker’s key ideas behind Ingres, Postgres, and Vertica—and how they shaped SQL databases, analytics engines, and today’s data stacks.

Michael Stonebraker is a computer scientist whose projects didn’t just influence database research—they directly shaped the products and design patterns many teams rely on every day. If you’ve used a relational database, an analytics warehouse, or a streaming system, you’ve benefited from ideas he helped prove, build, or popularize.

This isn’t a biography or an academic tour of database theory. Instead, it connects Stonebraker’s major systems (like Ingres, Postgres, and Vertica) to the choices you see in modern data stacks:

A modern database is any system that can reliably:

Different databases optimize these goals differently—especially when you compare transactional apps, BI dashboards, and real-time pipelines.

We’ll focus on practical impact: the ideas that show up in today’s “warehouse + lake + stream + microservices” world, and how they influence what you buy, build, and operate. Expect clear explanations, trade-offs, and real-world implications—not a deep dive into proofs or implementation details.

Stonebraker’s career is easiest to understand as a sequence of systems built for specific jobs—and then watched as the best ideas migrated into mainstream database products.

Ingres began as an academic project that proved relational databases could be fast and practical, not just a theory. It helped popularize SQL-style querying and cost-based optimization thinking that later became normal in commercial engines.

Postgres (the research system that led to PostgreSQL) explored a different bet: databases shouldn’t be fixed-function. You should be able to add new data types, new indexing methods, and richer behavior without rewriting the whole engine.

Many “modern” features trace back to this era—extensible types, user-defined functions, and a database that can adapt as workloads change.

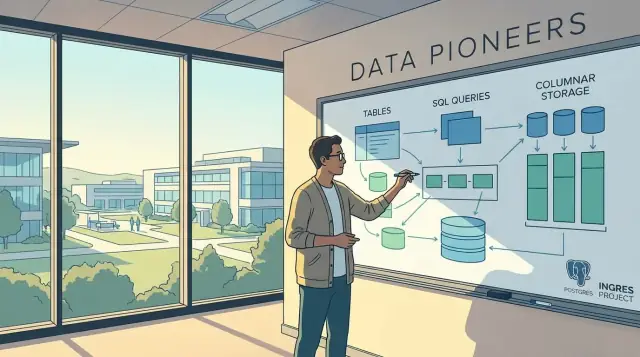

As analytics grew, row-oriented systems struggled with large scans and aggregations. Stonebraker pushed columnar storage and related execution techniques aimed at reading only the columns you need and compressing them well—ideas that are now standard in analytics databases and cloud warehouses.

Vertica took column-store research ideas into a commercially viable massively parallel processing (MPP) SQL engine designed for big analytic queries. This pattern repeats across the industry: a research prototype validates a concept; a product hardens it for reliability, tooling, and real customer constraints.

Later work expanded into stream processing and workload-specific engines—arguing that one general-purpose database rarely wins everywhere.

A prototype is built to test a hypothesis quickly; a product must prioritize operability: upgrades, monitoring, security, predictable performance, and support. Stonebraker’s influence shows up because many prototype ideas graduated into commercial databases as default capabilities rather than niche options.

Ingres (short for INteractive Graphics REtrieval System) was Stonebraker’s early proof that the relational model could be more than an elegant theory. At the time, many systems were built around custom access methods and application-specific data paths.

Ingres set out to solve a simple, business-friendly problem:

How do you let people ask flexible questions of data without rewriting the software every time the question changes?

Relational databases promised you could describe what you want (e.g., “customers in California with overdue invoices”) rather than how to fetch it step by step. But making that promise real required a system that could:

Ingres was a major step toward that “practical” version of relational computing—one that could run on the hardware of the day and still feel responsive.

Ingres helped popularize the idea that a database should do the hard work of planning queries. Instead of developers hand-tuning every data access path, the system could choose strategies like which table to read first, which indexes to use, and how to join tables.

This helped SQL-style thinking spread: when you can write declarative queries, you can iterate faster, and more people can ask questions directly—analysts, product teams, even finance—without waiting for bespoke reports.

The big practical insight is cost-based optimization: pick the query plan with the lowest expected “cost” (usually a mix of I/O, CPU, and memory), based on statistics about the data.

That matters because it often means:

Ingres didn’t invent every piece of modern optimization, but it helped establish the pattern: SQL + an optimizer is what makes relational systems scale from “nice idea” to daily tool.

Early relational databases tended to assume a fixed set of data types (numbers, text, dates) and a fixed set of operations (filter, join, aggregate). That worked well—until teams started storing new kinds of information (geography, logs, time series, domain-specific identifiers) or needed specialized performance features.

With a rigid design, every new requirement turns into a bad choice: force-fit the data into text blobs, bolt on a separate system, or wait for a vendor to add support.

Postgres pushed a different idea: a database should be extensible—meaning you can add new capabilities in a controlled way, without breaking the safety and correctness you expect from SQL.

In plain language, extensibility is like adding certified attachments to a power tool rather than rewiring the motor yourself. You can teach the database “new tricks,” while still keeping transactions, permissions, and query optimization working as a coherent whole.

That mindset shows up clearly in today’s PostgreSQL ecosystem (and many Postgres-inspired systems). Instead of waiting for a core feature, teams can adopt vetted extensions that integrate cleanly with SQL and operational tooling.

Common high-level examples include:

The key is that Postgres treated “changing what the database can do” as a design goal—not an afterthought—and that idea still influences how modern data platforms evolve.

Databases aren’t just about storing information—they’re about making sure the information stays right, even when many things happen at once. That’s what transactions and concurrency control are for, and it’s a major reason SQL systems became trusted for real business work.

A transaction is a group of changes that must succeed or fail as a unit.

If you transfer money between accounts, place an order, or update inventory, you can’t afford “half-finished” results. A transaction ensures you don’t end up with an order that charged a customer but didn’t reserve stock—or stock that was reduced without an order being recorded.

In practical terms, transactions give you:

Concurrency means many people (and apps) reading and changing data at the same time: customer checkouts, support agents editing accounts, background jobs updating statuses, analysts running reports.

Without careful rules, concurrency creates problems like:

One influential approach is MVCC (Multi-Version Concurrency Control). Conceptually, MVCC keeps multiple versions of a row for a short time, so readers can keep reading a stable snapshot while writers are making updates.

The big benefit is that reads don’t block writes as often, and writers don’t constantly stall behind long-running queries. You still get correctness, but with less waiting.

Today’s databases often serve mixed workloads: high-volume app writes plus frequent reads for dashboards, customer views, and operational analytics. Modern SQL systems lean on techniques like MVCC, smarter locking, and isolation levels to balance speed with correctness—so you can scale activity without trading away trust in the data.

Row-oriented databases were built for transaction processing: lots of small reads and writes, typically touching one customer, one order, one account at a time. That design is great when you need to fetch or update an entire record quickly.

Think of a spreadsheet. A row store is like filing each row as its own folder: when you need “everything about Order #123,” you pull one folder and you’re done. A column store is like filing by column: one drawer for “order_total,” another for “order_date,” another for “customer_region.”

For analytics, you rarely need the whole folder—you’re usually asking questions like “What was total revenue by region last quarter?” That query might touch only a few fields across millions of records.

Analytics queries often:

With columnar storage, the engine can read only the columns referenced in the query, skipping the rest. Less data read from disk (and less moved through memory) is often the biggest performance win.

Columns tend to have repetitive values (regions, statuses, categories). That makes them highly compressible—and compression can speed analytics because the system reads fewer bytes and can sometimes operate on compressed data more efficiently.

Column stores helped mark the move from OLTP-first databases toward analytics-first engines, where scanning, compression, and fast aggregates became primary design goals rather than afterthoughts.

Vertica is one of the clearest “real-world” examples of how Stonebraker’s ideas about analytics databases turned into a product teams could run in production. It took lessons from columnar storage and paired them with a distributed design aimed at a specific problem: answering big analytical SQL queries fast, even when data volumes grow beyond a single server.

MPP stands for massively parallel processing. The simplest way to think about it is: many machines work on one SQL query at the same time.

Instead of one database server reading all the data and doing all the grouping and sorting, the data is split across nodes. Each node processes its slice in parallel, and the system combines the partial results into a final answer.

This is how a query that would take minutes on one box can drop to seconds when spread across a cluster—assuming the data is distributed well and the query can be parallelized.

Vertica-style MPP analytics systems shine when you have lots of rows and want to scan, filter, and aggregate them efficiently. Typical use cases include:

MPP analytics engines are not a drop-in replacement for transactional (OLTP) systems. They’re optimized for reading many rows and computing summaries, not for handling lots of small updates.

That leads to common trade-offs:

The key idea is focus: Vertica and similar systems earn their speed by tuning storage, compression, and parallel execution for analytics—then accepting constraints that transactional systems are designed to avoid.

A database can “store and query” data and still feel slow for analytics. The difference is often not the SQL you write, but how the engine executes it: how it reads pages, moves data through the CPU, uses memory, and minimizes wasted work.

Stonebraker’s analytics-focused projects pushed the idea that query performance is an execution problem as much as a storage problem. This thinking helped shift teams from optimizing single-row lookups to optimizing long scans, joins, and aggregations over millions (or billions) of rows.

Many older engines process queries “tuple-at-a-time” (row-by-row), which creates lots of function calls and overhead. Vectorized execution flips that model: the engine processes a batch (a vector) of values in a tight loop.

In plain terms, it’s like moving groceries with a cart instead of carrying one item per trip. Batching reduces overhead and lets modern CPUs do what they’re good at: predictable loops, fewer branches, and better cache use.

Fast analytics engines are obsessed with staying CPU- and cache-efficient. Execution innovations commonly focus on:

These ideas matter because analytics queries are often limited by memory bandwidth and cache misses, not by raw disk speed.

Modern data warehouses and SQL engines—cloud warehouses, MPP systems, and fast in-process analytics tools—frequently use vectorized execution, compression-aware operators, and cache-friendly pipelines as standard practice.

Even when vendors market features like “autoscaling” or “separation of storage and compute,” the day-to-day speed you feel still depends heavily on these execution choices.

If you’re evaluating platforms, ask not only what they store, but how they run joins and aggregates under the hood—and whether their execution model is built for analytics rather than transactional workloads.

Streaming data is simply data that arrives continuously as a sequence of events—think “a new thing just happened” messages. A credit-card swipe, a sensor reading, a click on a product page, a package scan, a log line: each one shows up in real time and keeps coming.

Traditional databases and batch pipelines are great when you can wait: load yesterday’s data, run reports, publish dashboards. But real-time needs don’t wait for the next hourly job.

If you only process data in batches, you often end up with:

Streaming systems are designed around the idea that computations can run continuously as events arrive.

A continuous query is like a SQL query that never “finishes.” Instead of returning a result once, it updates the result as new events come in.

Because streams are unbounded (they don’t end), streaming systems use windows to make calculations manageable. A window is a slice of time or events, such as “the last 5 minutes,” “each minute,” or “the last 1,000 events.” This lets you compute rolling counts, averages, or top-N lists without needing to reprocess everything.

Real-time streaming is most valuable when timing matters:

Stonebraker has argued for decades that databases shouldn’t all be built as general-purpose “do everything” machines. The reason is simple: different workloads reward different design choices. If you optimize hard for one job (say, tiny transactional updates), you usually make another job slower (like scanning billions of rows for a report).

Most modern stacks use more than one data system because the business asks for more than one kind of answer:

That’s “one size doesn’t fit all” in practice: you pick engines that match the shape of the work.

Use this quick filter when choosing (or justifying) another system:

Multiple engines can be healthy, but only when each one has a clear workload. A new tool should earn its place by cutting cost, latency, or risk—not by adding novelty.

Prefer fewer systems with strong operational ownership, and retire components that don’t have a crisp, measurable purpose.

Stonebraker’s research threads—relational foundations, extensibility, column stores, MPP execution, and “right tool for the job”—are visible in the default shapes of modern data platforms.

The warehouse reflects decades of work on SQL optimization, columnar storage, and parallel execution. When you see fast dashboards on huge tables, you’re often seeing column-oriented formats plus vectorized processing and MPP-style scaling.

The lakehouse borrows warehouse ideas (schemas, statistics, caching, cost-based optimization) but places them on open file formats and object storage. The “storage is cheap, compute is elastic” shift is new; the query and transaction thinking underneath is not.

MPP analytics systems (shared-nothing clusters) are direct descendants of research that proved you can scale SQL by partitioning data, moving computation to data, and carefully managing data movement during joins and aggregations.

SQL has become the common interface across warehouses, MPP engines, and even “lake” query layers. Teams rely on it as:

Even when execution happens in different engines (batch, interactive, streaming), SQL often remains the user-facing language.

Flexible storage doesn’t eliminate the need for structure. Clear schemas, documented meaning, and controlled evolution reduce downstream breakage.

Good governance is less about bureaucracy and more about making data reliable: consistent definitions, ownership, quality checks, and access controls.

When evaluating platforms, ask:

If a vendor can’t map their product to these basics in plain language, the “innovation” might be mostly packaging.

Stonebraker’s through-line is simple: databases work best when they’re designed for a specific job—and when they can evolve as that job changes.

Before comparing features, write down what you actually need to do:

A useful rule: if you can’t describe your workload in a few sentences (query patterns, data size, latency needs, concurrency), you’ll end up shopping by buzzwords.

Teams underestimate how often requirements shift: new data types, new metrics, new compliance rules, new consumers.

Favor platforms and data models that make change routine rather than risky:

Fast answers are only valuable if they’re the right answers. When evaluating options, ask how the system handles:

Run a small “proof with your data,” not just a demo:

A lot of database guidance stops at “pick the right engine,” but teams also have to ship apps and internal tools around that engine: admin panels, metrics dashboards, ingestion services, and back-office workflows.

If you want to prototype these quickly without reinventing your whole pipeline, a vibe-coding platform like Koder.ai can help you spin up web apps (React), backend services (Go + PostgreSQL), and even mobile clients (Flutter) from a chat-driven workflow. That’s often useful when you’re iterating on schema design, building a small internal “data product,” or validating how a workload actually behaves before committing to long-term infrastructure.

If you want to go deeper, look up columnar storage, MVCC, MPP execution, and stream processing. More explainers live in /blog.

He’s a rare case where research systems became real product DNA. Ideas proven in Ingres (SQL + query optimization), Postgres (extensibility + MVCC thinking), and Vertica (columnar + MPP analytics) show up today in how warehouses, OLTP databases, and streaming platforms are built and marketed.

SQL won because it lets you describe what you want, while the database figures out how to get it efficiently. That separation enabled:

A cost-based optimizer uses table statistics to compare possible query plans and pick the one with the lowest expected cost (I/O, CPU, memory). Practically, it helps you:

MVCC (Multi-Version Concurrency Control) keeps multiple versions of rows so readers can see a consistent snapshot while writers update. In day-to-day terms:

Extensibility means the database can safely grow new capabilities—types, functions, indexes—without you forking or rewriting the engine. It’s useful when you need to:

The operational rule: treat extensions like dependencies—version them, test upgrades, and limit who can install them.

Row stores are great when you often read or write whole records (OLTP). Column stores shine when you scan many rows but touch a few fields (analytics).

A simple heuristic:

MPP (massively parallel processing) splits data across nodes so many machines execute one SQL query together. It’s a strong fit for:

Watch for trade-offs like data distribution choices, shuffle costs during joins, and weaker ergonomics for high-frequency single-row updates.

Vectorized execution processes data in batches (vectors) instead of one row at a time, reducing overhead and using CPU caches better. You’ll usually notice it as:

Batch systems run jobs periodically, so “fresh” data can lag. Streaming systems treat events as continuous input and compute results incrementally.

Common places streaming pays off:

To keep computations bounded, streaming uses windows (e.g., last 5 minutes) rather than “all time.”

Use multiple systems when each has a clear workload boundary and measurable benefit (cost, latency, reliability). To avoid sprawl:

If you need a selection framework, reuse the checklist mindset described in the post and related pieces in /blog.