Sep 23, 2025·8 min

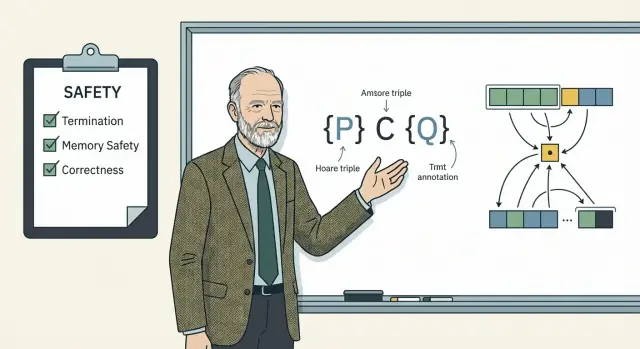

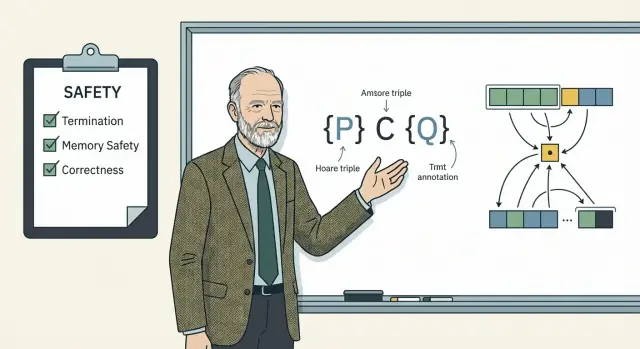

Tony Hoare’s Correctness Ideas: From Logic to Safe Code

Learn how Tony Hoare’s work on Hoare logic, Quicksort, and safety thinking shaped practical techniques for writing and reviewing correct software.

Learn how Tony Hoare’s work on Hoare logic, Quicksort, and safety thinking shaped practical techniques for writing and reviewing correct software.

When people say a program is “correct,” they often mean: “I ran it a few times and the output looked right.” That’s a useful signal—but it’s not correctness. In plain terms, correctness means the program meets its specification: for every allowed input, it produces the required result and respects any rules about state changes, timing, and errors.

The catch is that “meets its spec” is harder than it sounds.

First, specifications are often ambiguous. A product requirement might say “sort the list,” but does that mean stable sorting? What about duplicate values, empty lists, or non-numeric items? If the spec doesn’t say, different people will assume different answers.

Second, edge cases aren’t rare—they’re just less frequently tested. Null values, overflow, off-by-one boundaries, unusual user sequences, and unexpected external failures can turn “it seems to work” into “it failed in production.”

Third, requirements change. A program can be correct relative to yesterday’s spec and incorrect relative to today’s.

Tony Hoare’s big contribution wasn’t the claim that we should prove everything all the time. It was the idea that we can be more precise about what code is supposed to do—and reason about it in a disciplined way.

In this post, we’ll follow three connected threads:

Most teams won’t write full formal proofs. But even partial, “proof-style” thinking can make bugs easier to spot, reviews sharper, and behavior clearer before code ships.

Tony Hoare is one of those rare computer scientists whose work didn’t stay in papers or classrooms. He moved between academia and industry, and he cared about a practical question that every team still faces: how do we know a program does what we think it does—especially when the stakes are high?

This article focuses on a few Hoare ideas that keep showing up in real codebases:

{P} C {Q}.You won’t find deep mathematical formalism here, and we won’t attempt a complete, machine-checkable proof of Quicksort. The goal is to keep the concepts approachable: enough structure to make your reasoning clearer, without turning your code review into a graduate seminar.

Hoare’s ideas translate into ordinary decisions: what assumptions a function relies on, what it guarantees to callers, what must stay true halfway through a loop, and how to spot “almost correct” changes during reviews. Even when you never write {P} C {Q} explicitly, thinking in that shape improves APIs, tests, and the quality of discussions about tricky code.

Hoare’s view is stricter than “it passed a few examples”: correctness is about meeting an agreed promise, not about looking right on a small sample.

Bugs often happen when teams skip the middle step: they jump from requirements straight to code, leaving the “promise” fuzzy.

Two different claims frequently get mixed together:

For real systems, “never finishing” can be as harmful as “finishing with the wrong answer.”

Correctness statements are never universal; they rely on assumptions about:

Being explicit about assumptions turns “works on my machine” into something others can reason about.

Consider a function sortedCopy(xs).

A useful spec could be: “Returns a new list ys such that (1) ys is sorted ascending, and (2) ys contains exactly the same elements as xs (same counts), and (3) xs is unchanged.”

Now “correct” means the code satisfies those three points under the stated assumptions—not just that the output looks sorted in a quick test.

Hoare logic is a way to talk about code with the same clarity you’d use to talk about a contract: if you start in a state that satisfies certain assumptions, and you run this piece of code, you’ll end in a state that satisfies certain guarantees.

The core notation is the Hoare triple:

{precondition} program {postcondition}

A precondition states what must be true before the program fragment runs. This isn’t about what you hope is true; it’s what the code needs to be true.

Example: suppose a function returns the average of two numbers without overflow checks.

a + b fits in the integer typeavg = (a + b) / 2avg equals the mathematical average of a and bIf the precondition doesn’t hold (overflow is possible), the postcondition promise no longer applies. The triple forces you to say that out loud.

A postcondition states what will be true after the code runs—assuming the precondition was met. Good postconditions are concrete and checkable. Instead of “result is valid,” say what “valid” means: sorted, non-negative, within bounds, unchanged except for specific fields, etc.

Hoare logic scales from tiny statements to multi-step code:

x = x + 1, what facts about x are now true?The point isn’t to sprinkle curly braces everywhere. It’s to make intent readable: clear assumptions, clear outcomes, and fewer “it seems to work” conversations in reviews.

A loop invariant is a statement that is true before the loop starts, remains true after every iteration, and is still true when the loop finishes. It’s a simple idea with a big payoff: it replaces “it seems to work” with a claim you can actually check at each step.

Without an invariant, a review often sounds like: “We iterate over the list and gradually fix things.” An invariant forces precision: what exactly is already correct right now, even though the loop isn’t done? Once you can say that clearly, off-by-one errors and missing cases become easier to spot, because they show up as moments where the invariant would be broken.

Most day-to-day code can use a few reliable templates.

1) Bounds / index safety

Keep indices in a safe range.

0 <= i <= nlow <= left <= right <= highThis type of invariant is great for preventing out-of-range access and for making array reasoning concrete.

2) Processed vs. unprocessed items

Split your data into a “done” region and a “not yet” region.

a[0..i) have been examined.”result satisfies the filter predicate.”This turns vague progress into a clear contract about what “processed” means.

3) Sorted prefix (or partitioned prefix)

Common in sorting, merging, and partitioning.

a[0..i) is sorted.”a[0..i) are <= pivot, and all items in a[j..n) are >= pivot.”Even if the full array isn’t sorted yet, you’ve pinned down what is.

Correctness isn’t just about being right; the loop must also finish. A simple way to argue that is to name a measure (often called a variant) that decreases each iteration and can’t decrease forever.

Examples:

n - i shrinks by 1 each time.”If you can’t find a shrinking measure, you may have discovered a real risk: an infinite loop on some inputs.

Quicksort has a simple promise: given a slice (or array segment), rearrange its elements so they end up in non-decreasing order, without losing or inventing any values. The algorithm’s high-level shape is easy to summarize:

It’s a great teaching example for correctness because it’s small enough to hold in your head, but rich enough to show where informal reasoning fails. A Quicksort that “seems to work” on a few random tests can still be wrong in ways that only show up under specific inputs or boundary conditions.

A few issues cause most bugs:

To argue correctness in a Hoare-style way, you typically separate the proof into two parts:

This separation keeps the reasoning manageable: get partition right, then build sorting correctness on top of it.

Quicksort’s speed depends on one deceptively small routine: partition. If partition is even slightly wrong, Quicksort can mis-sort, loop forever, or crash on edge cases.

We’ll use the classic Hoare partition scheme (two pointers moving inward).

Input: an array slice A[lo..hi] and a chosen pivot value (often A[lo]).

Output: an index p such that:

A[lo..p] is <= pivotA[p+1..hi] is >= pivotNotice what’s not promised: the pivot doesn’t necessarily end up at position p, and elements equal to the pivot may appear on either side. That’s okay—Quicksort only needs a correct split.

As the algorithm advances two indices—i from the left, j from the right—good reasoning focuses on what is already “locked in.” A practical set of invariants is:

A[lo..i-1] are <= pivot (left side is clean)A[j+1..hi] are >= pivot (right side is clean)A[i..j] is unclassified (still to be checked)When we find A[i] >= pivot and A[j] <= pivot, swapping them preserves those invariants and shrinks the unclassified middle.

i runs to the right; partition must still terminate and return a sensible p.j runs to the left; same termination concern.< vs <=), pointers can stall. Hoare’s scheme relies on a consistent rule so progress continues.Different partition schemes exist (Lomuto, Hoare, three-way partitioning). The key is to pick one, state its contract, and review the code against that contract consistently.

Recursion is easiest to trust when you can answer two questions clearly: when does it stop? and why is each step valid? Hoare-style thinking helps because it forces you to state what must be true before a call, and what will be true after it returns.

A recursive function needs at least one base case where it does no further recursive calls and still satisfies the promised result.

For sorting, a typical base case is “arrays of length 0 or 1 are already sorted.” Here, “sorted” should be explicit: for an ordering relation ≤, the output array is sorted if for every index i < j, we have a[i] ≤ a[j]. (Whether equal elements keep their original order is a separate property called stability; Quicksort is not usually stable unless you design it to be.)

Every recursive step should call itself on a strictly smaller input. This “shrinking” is your termination argument: if the size decreases and cannot go below 0, you can’t recurse forever.

Shrinking also matters for stack safety. Even correct code can crash if recursion depth gets too large. In Quicksort, unbalanced partitions can produce deep recursion. That’s a termination-proof plus a practical reminder to consider worst-case depth.

Quicksort’s worst-case time can degrade to O(n²) when partitions are very unbalanced, but that’s a performance concern—not a correctness failure. The reasoning goal here is: assuming the partition step preserves elements and splits them according to the pivot, recursive sorting of the smaller parts implies the whole array meets the definition of sortedness.

Testing and proof-style reasoning aim at the same goal—confidence—but they get there differently.

Tests are excellent at catching concrete mistakes: an off-by-one, a missing edge case, a regression. But a test suite can only sample the input space. Even “100% coverage” doesn’t mean “all behaviors checked”; it mostly means “all lines executed.”

Proof-style thinking (Hoare-style reasoning in particular) starts from a specification and asks: if these preconditions hold, does the code always establish the postconditions? When you do that well, you don’t just find a bug—you can often eliminate an entire category of bugs (like “array access stays in bounds” or “the loop never breaks the partition property”).

A clear spec is a test generator.

If your postcondition says “output is sorted and is a permutation of the input,” you automatically get test ideas:

The spec tells you what “correct” means, and the tests check that reality matches it.

Property-based testing sits between proofs and examples. Instead of hand-picking a few cases, you state properties and let a tool generate many inputs.

For sorting, two simple properties go a long way:

These properties are essentially postconditions written as executable checks.

A lightweight routine that scales:

If you want a place to institutionalize this, make “spec + reasoning notes + tests” part of your PR template or code review checklist (see also /blog/code-review-checklist).

If you’re using a vibe-coding workflow (generating code from a chat-based interface), the same discipline applies—arguably more so. In Koder.ai, for example, you can start in Planning Mode to pin down preconditions/postconditions before any code is generated, then iterate with snapshots and rollback while you add property-based tests. The tool speeds up implementation, but the spec is still what keeps “fast” from turning into “fragile.”

Correctness isn’t only about “the program returns the right value.” Safety thinking asks a different question: what outcomes are unacceptable, and how do we prevent them—even when code is stressed, misused, or partially failing? In practice, safety is correctness with a priority system: some failures are merely annoying, others can cause financial loss, privacy breaches, or physical harm.

A bug is a defect in the code or design. A hazard is a situation that can lead to an unacceptable outcome. One bug can be harmless in one context and dangerous in another.

Example: an off-by-one error in a photo gallery might mislabel an image; the same error in a medication dosage calculator could harm a patient. Safety thinking forces you to connect code behavior to consequences, not just to “spec compliance.”

You don’t need heavy formal methods to get immediate safety benefits. Teams can adopt small, repeatable practices:

These techniques pair naturally with Hoare-style reasoning: you make preconditions explicit (what inputs are acceptable) and ensure postconditions include safety properties (what must never happen).

Safety-oriented checks cost something—CPU time, complexity, or occasional false rejections.

Safety thinking is less about proving elegance and more about preventing the failure modes you can’t afford.

Code reviews are where correctness thinking pays off fastest, because you can spot missing assumptions long before bugs reach production. Hoare’s core move—stating what must be true before and what will be true after—translates neatly into review questions.

When you read a change, try framing each key function as a tiny promise:

A simple reviewer habit: if you can’t say the pre/post conditions in one sentence, the code likely needs clearer structure.

For risky or central functions, add a small contract comment right above the signature. Keep it concrete: inputs, outputs, side effects, and errors.

def withdraw(account, amount):

"""Contract:

Pre: amount is an integer > 0; account is active.

Post (success): returns new_balance; account.balance decreased by amount.

Post (failure): raises InsufficientFunds; account.balance unchanged.

"""

...

These comments are not formal proofs, but they give reviewers something precise to check against.

Be extra explicit when reviewing code that handles:

If the change touches any of these, ask: “What are the preconditions, and where are they enforced?” and “What guarantees do we provide even when something fails?”

Formal reasoning doesn’t have to mean turning your whole codebase into a math paper. The goal is to spend extra certainty where it pays off: places where “looks fine in tests” isn’t enough.

They’re a strong fit when you have a small, critical module that everything else depends on (auth, payment rules, permissions, safety interlocks), or a tricky algorithm where off-by-one mistakes hide for months (parsers, schedulers, caching/eviction, concurrency primitives, partition-style code, boundary-heavy data transformations).

A useful rule: if a bug can cause real harm, large financial loss, or silent data corruption, you want more than ordinary review + tests.

You can choose from “lightweight” to “heavyweight,” and often the best results come from combining them:

Decide the depth of formality by weighing:

In practice, you can also treat “formality” as something you incrementally add: start with explicit contracts and invariants, then let automation keep you honest. For teams building quickly on Koder.ai—where generating a React front end, a Go backend, and Postgres schema can happen in a tight loop—snapshots/rollback and source code export make it easier to iterate fast while still enforcing contracts via tests and static analysis in your usual CI.

Use this as a quick “should we formalize more?” gate in planning or code review:

Further reading topics: design-by-contract, property-based testing, model checking for state machines, static analyzers for your language, and introductory material on proof assistants and formal specification.

Correctness means the program satisfies an agreed specification: for every allowed input and relevant system state, it produces the required outputs and side effects (and handles errors as promised). “It seems to work” usually means you only checked a few examples, not the whole input space or the tricky boundary conditions.

Requirements are the business goal (“sort the list for display”). A specification is the precise, checkable promise (“returns a new list sorted ascending, same multiset of elements, input unchanged”). The implementation is the code. Bugs often happen when teams jump straight from requirements to implementation and never write down the checkable promise.

Partial correctness: if the code returns, the result is correct. Total correctness: the code returns and the result is correct—so termination is part of the claim.

In practice, total correctness matters whenever “hanging forever” is a user-visible failure, a resource leak, or a safety risk.

A Hoare triple {P} C {Q} reads like a contract:

P (precondition): what must be true before running CC: the code fragmentPreconditions are what the code needs (e.g., “indices are in range”, “elements are comparable”, “lock is held”). If a precondition can be violated by callers, either:

Otherwise, your postconditions become wishful thinking.

A loop invariant is a statement that is true before the loop starts, stays true after every iteration, and is still true when the loop ends. Useful templates include:

0 <= i <= n)If you can’t articulate an invariant, it’s a sign the loop is doing too many things at once or the boundaries are unclear.

You typically name a measure (variant) that decreases each iteration and can’t decrease forever, such as:

n - i shrinking by 1If you can’t find a decreasing measure, you may have discovered a real non-termination risk (especially with duplicates or stalled pointers).

In Quicksort, partition is the small routine everything depends on. If partition is slightly wrong, you can get:

That’s why it helps to state partition’s contract explicitly: what must be true on the left side, on the right side, and that elements are only rearranged (a permutation).

Duplicates and “equal to pivot” handling are common failure points. Practical rules:

i/j)If duplicates are frequent, consider three-way partitioning to reduce both bugs and recursion depth.

Testing samples behaviors; reasoning can rule out whole classes of bugs (bounds safety, preservation of invariants, termination). A practical hybrid workflow is:

For sorting, two high-value properties are:

Q (postcondition): what will be true after C finishes, assuming P heldYou don’t have to write the notation in code—using the structure in reviews (“assumptions in, guarantees out”) is the practical win.