Sep 14, 2025·7 min

Trustworthy product reviews: moderation, photo proof, and incentives

Build trustworthy product reviews with clear anti-spam rules, photo proof checks, and transparent incentives that keep shoppers confident.

Why shoppers stop trusting reviews

Most people don't read every review. They scan for a pattern, then decide if that pattern feels honest. When it doesn't, they stop trusting the whole review section, even if many reviews are real.

Fake reviews are often easy to spot. They sound like ads, repeat the same phrases, or promise perfect results with no details. Low-effort reviews do a different kind of damage: one-word ratings, vague praise, and copy-paste comments create noise that buries the useful stories buyers need.

Bias can be just as harmful. If only the happiest customers are prompted to review, or unhappy buyers feel ignored, the page starts to look curated. Shoppers notice when every review is glowing and none mention tradeoffs, shipping, sizing, setup time, or who the product isn't for.

The business impact shows up quickly. Shoppers hesitate or leave to check other sites, returns rise because expectations are wrong, and support teams get flooded with basic questions reviews should have answered. Over time, brand trust erodes, and that's harder to rebuild than a single lost sale.

The goal isn't "more 5-star reviews." The goal is a stronger signal: a mix of experiences, specific details, and clear context. The most believable reviews often include a small negative, a practical tip, and a clear description of how the item was used.

Set expectations internally, too: you won't prevent all abuse. Some spam slips through, and some real reviews look suspicious at first. What you can do is reduce junk, make manipulation harder, and make honest reviews easier to recognize.

A simple example: if a product gets 20 reviews in one afternoon and most say "Amazing!!! Best purchase ever!!!" with no photos and no mention of sizing or delivery, shoppers assume something is off. Even buyers who were ready to purchase may pause, and that pause is where sales are lost.

Treat reviews like a quality system, not a vanity metric. You'll protect trust, lower avoidable returns, and make the product page feel safer to buy from.

What makes a review feel real

A review feels trustworthy when it's specific, lines up with what others report, and seems to come from a real person who could answer a follow-up question. It doesn't need perfect writing. It needs believable detail.

Context is the biggest credibility boost. Real shoppers usually mention what they bought, how they used it, and what happened after a few days or weeks. Even happy customers tend to mention at least one downside.

The strongest signals are simple: proof the person had access (like a verified purchase or active subscription), concrete details (size, color, model, plan level, version), and a clear use case ("I used it for client work" or "I set it up for my team of 5"). Balance matters, too: one clear pro and one clear con is often more convincing than pure praise.

Some reviews look positive but don't build trust because they read like advertising or quota-filling. Common red flags include generic praise with no specifics, repeated copy-paste text across products, extreme wording with no evidence, strange timing bursts (many reviews within minutes), and claims that contradict basics like price, features, or availability.

What "real" looks like also depends on the product. For physical goods, people talk about fit, materials, packaging, and show photos in normal lighting. For subscriptions, they mention billing, cancellation, support, and whether the value held up after a month. For apps (like a builder such as Koder.ai), readers look for workflow details: what the user tried to build, how long it took, what broke, and what they did next.

Anti-spam rules you should write down

A review system is only as believable as its rules. If you want reviews people trust, write an anti-spam policy in plain language and apply it the same way every time.

Start with clear bans for patterns that often signal spam: repeated text across many reviews, copy-paste templates, keyword stuffing (lots of product terms but no real experience), and off-topic rants. Harassment, hate, threats, and personal data (phone numbers, addresses, screenshots showing private info) should be removed fast.

A simple reject checklist helps moderators stay consistent. A review should be rejected or sent back for edits when it has duplicate or near-duplicate wording across accounts, no product experience (only shipping complaints or unrelated issues), promotional content (coupon codes, competitor mentions, "DM me" offers), abusive language, or text that reads like SEO instead of a human.

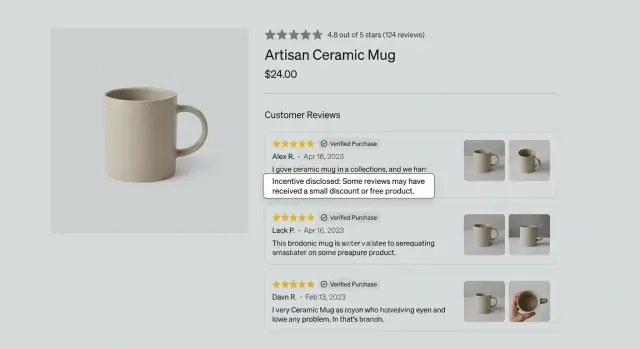

Incentives need special handling. Discounts, free items, credits, and giveaways can produce reviews that look honest but are still biased. If there's any incentive, require disclosure inside the review. If timing suggests a campaign (many 5-star reviews minutes after an incentive email), treat it as a campaign in your reporting and label those reviews clearly.

Define conflicts of interest upfront. Employees, contractors, brand partners, affiliates, family members, and direct competitors shouldn't post "normal" customer reviews. If you allow certain groups, mark them clearly so shoppers understand the context.

Refunds and "review for support" situations also need a firm rule: support can't ask for a positive review to unlock help, refunds, replacements, or faster service. If a review is tied to a dispute (chargeback, return, refund request), decide whether to pause publishing until the case is resolved, or publish with a neutral label such as "Order issue reported" without exposing private details.

When rules are broken, be consistent about outcomes. Most teams only need a few actions they can defend: reject before publish (with a short reason), remove after publish (with an internal log), keep but label (for disclosed incentives or known relationships), limit account actions (rate limits or bans), and escalate for organized fraud or harassment.

Example: if 30 reviews arrive in one hour and 25 share the same phrase, pause publishing, check account creation dates and purchase verification, and reject the coordinated set. Keep only what is unique and verifiable.

Step-by-step moderation workflow

A good workflow approves the helpful majority quickly and uses human time only where risk is higher.

1) Set auto-approve vs queue rules

Decide what you can safely publish right away and what needs a quick look.

Auto-approve works best for low-risk cases, like established accounts with verified purchases and normal language. Queue a review when it's higher risk: a first-time reviewer, a brand-new account, unusual timing (like a review posted minutes after purchase), repeated phrasing that matches other reviews, or any content that includes coupon codes, contact details, or safety/medical/legal claims.

2) Triage in three buckets

When a review lands in the queue, sort it into one of three buckets: obvious spam, unclear, or acceptable.

Obvious spam includes random text, promotions, off-topic rants, and competitor sabotage. Acceptable reviews can be approved quickly, even if they're short or negative. The unclear middle group is where you do one extra check.

3) Use reusable moderator notes

Moderation gets inconsistent when every decision is written from scratch. Create a small set of internal note templates that explain the reason in plain language, such as "Removed: includes personal contact info" or "Queued: needs order verification." If you message the reviewer, keep it calm and specific.

4) Response times and escalation rules

Set a time target so the queue doesn't become a black hole. Same day or within 24 hours is often enough.

Escalate immediately when you see threats or harassment, personal data (phone, address, email), defamation claims or legal threats, safety issues (injury reports, hazards), or payment/account takeover claims.

5) Add an appeal and resubmission path

If you reject a review, tell the person exactly what to fix and allow resubmission. For example: "Please remove the phone number and keep the review focused on the product." Appeals protect honest customers and reduce angry repeat posts.

Applied consistently, this approach makes it clear you moderate for safety and accuracy, not for perfect ratings.

Photo proof: how to collect it and keep it safe

Set up a moderation workflow

Design a three bucket moderation flow and iterate as edge cases show up.

Photos and short videos can turn "I love it" into something shoppers believe. They also help you spot fake posts faster. The key is to ask at the right time and handle uploads carefully so you don't create privacy problems.

Ask for photos when the review carries extra risk. That usually means high-return categories (beauty, supplements, luxury items, tickets) or claims that feel too good to be true. It can also make sense when an account is new but the review is unusually detailed, or when a product suddenly gets a wave of perfect scores.

Define what counts as acceptable proof so customers aren't guessing. Keep it simple: a product-in-use photo, packaging with the item visible, a receipt or order confirmation screenshot with sensitive fields covered, a short video showing multiple angles, or issue photos for complaints (damage, missing parts, wrong color).

Privacy is where many review programs fail. People upload more than they realize, and you're responsible for what you publish. Set clear rules and use light redaction before anything goes live: hide addresses, phone numbers, emails, and full names; blur faces; blur license plates and apartment numbers; remove metadata that can include location; and limit staff access.

You also need basic fraud checks. Look for duplicate images across accounts, repeated backgrounds, watermarks from other sites, and "template" photos that show up across many products.

For display, make photo proof helpful but not distracting. A small "photo attached" badge near the star rating and thumbnails inside the review card usually work well, with an optional gallery for shoppers who want more.

Incentives without losing credibility

Incentives aren't automatically bad. The problem is when shoppers feel like the rating was bought. A simple rule keeps you honest: you can reward participation, but you can't reward a specific outcome.

Separate these ideas in your policy language:

- Rewarded for purchase: points or store credit for buying (no review required).

- Rewarded for review: a thank-you for leaving feedback (a review is required, the rating is not).

That split reduces suspicion because shoppers can see you're not paying for praise.

Make disclosure obvious and consistent

If a review is part of a campaign (free sample, discount, early access, affiliate relationship), require a short disclosure sentence and add your own label on the review card. Don't hide it in a tooltip or at the bottom of the page. Use the same wording everywhere.

Good disclosure is plain and specific, like: "I received a free sample in exchange for an honest review." Avoid vague language that reads like a cover story.

Set guardrails that prevent credibility loss

Some incentive patterns destroy trust fast and should be banned:

- Only 5-star reviews get rewarded.

- We'll refund you if you leave a review.

- Change your review and we'll send a coupon.

- We'll remove your bad review if you contact support.

What's safe is rewarding the act of sharing feedback, regardless of rating. Pay the same amount for 1 star or 5 stars, and allow negative reviews to stand if they follow your rules.

Example: you run a 200-unit sampling program for a new blender. Every participant gets the same $10 credit after submitting a review, whether they loved it or hated it. On the product page, those reviews show a clear "Incentivized: free sample" label. Shoppers may disagree with the opinions, but they won't doubt where the reviews came from.

Handling campaigns (sampling, beta testers, affiliates)

Campaigns are fine if you keep them clearly separate from regular buyers. Label sampling reviews, label early access feedback, and require affiliate disclosure. Don't mark these as "verified purchase" unless it truly was. Staff and friends-and-family should not post customer reviews at all, or should be placed in a separate "team feedback" area.

The goal isn't to avoid incentives. It's to make relationships obvious so readers can judge opinions with context instead of feeling tricked.

What to show on the product page

Own your review platform

Keep full control by exporting the source code once the review flow works the way you want.

A review section earns trust when it answers two questions quickly: who is speaking, and what exactly happened.

Make context visible next to each review. "Verified purchase" is the big one. If someone received a discount, free sample, or store credit, show an "Incentive disclosed" label on the review card. Always show the review date. Location can be optional, but keep it broad if you include it.

Add light structure so reviews are comparable

Free text is valuable, but a little structure reduces vague hype and helps shoppers scan. A few fields go a long way: short pros and cons, usage duration ("Used for 3 weeks"), the product variant (size, color, model, subscription plan), and a simple "Would you recommend?" toggle.

Sorting matters, too. Offer "Most helpful," "Newest," and rating filters. Avoid defaults that bury negatives. If you use "Most helpful," make sure detailed critical reviews can rise to the top.

Give shoppers two lightweight controls: "Was this helpful?" and "Report abuse." Protect both from brigading by rate-limiting suspicious bursts.

Be transparent about moderation

If you remove or edit a review, leave a short factual note like "Removed: included personal information" or "Edited: removed profanity." This signals you're moderating for safety, not for perfect ratings.

Example: handling a suspicious review spike

On Tuesday morning, a small skincare store wakes up to 47 new reviews on a single bestseller overnight. Last week, that product averaged 2 to 3 reviews per day. The rating jumps from 4.2 to 4.8 in a few hours.

A quick scan shows patterns that match the store's anti-spam policy: most reviews come from brand-new accounts, several use the same phrases word-for-word, many lack purchase verification, a cluster shares device and location signals, and almost none include photos.

Moderation decisions follow written rules, not gut feelings. The team takes three actions.

First, they hold the entire spike for review so the product page doesn't swing instantly. Shoppers still see the previous rating and a note that some new reviews are being checked.

Second, they split reviews into lanes: reject repeated text and coordinated posts, request proof for unique reviews without a purchase match, approve with labels for verified purchases, and approve older accounts with consistent history.

Third, they send a short proof request to the "request proof" group: reply with an order number, delivery zip code, or a photo of the item in hand with personal details covered. If proof arrives, the review is approved and marked as verified. If not, it stays hidden.

One edge case matters: a genuine customer leaves a short review with no photo, and there's no purchase match because they bought as a guest. The store requests proof, the customer replies with a delivery email and date, and the review is approved. It stays short, unglamorous, and believable, which is the point.

Common mistakes that backfire

Create the admin and data layer

Spin up a Go and PostgreSQL backend for reviews, flags, and audit logs.

The fastest way to lose trust is to look like you're managing the story instead of managing quality. Shoppers notice patterns, and once they suspect manipulation, even honest feedback starts to feel questionable.

A common trigger is a rating spread that looks too perfect. If a page has almost no 2 to 4 star reviews, people assume negatives were filtered out. A healthier approach is to publish critical reviews that follow your rules, then respond calmly and explain fixes.

Another mistake is letting customer support rewrite reviews. Even small edits can make a review feel scripted. If something is unclear or includes private info, ask the reviewer to resubmit instead.

Incentives also backfire when treated like a secret. If you offer discounts, points, or giveaways, label those reviews clearly from day one. Adding labels later often creates more suspicion than the incentive itself.

Photo proof is powerful, but making it mandatory for every review is a trap. Many legitimate buyers won't upload images for privacy or time reasons. Keep photos optional, and reserve stricter photo requirements for high-risk categories or unusually high-value claims.

Don't treat "helpful" votes as automatically safe, either. They can be bought or brigaded just like reviews, which quietly pushes spam to the top.

Quick checklist and next steps

Trust builds when rules are clear, checks are consistent, and shoppers can see enough context to judge reviews for themselves.

Keep a short checklist near your moderation queue:

- Write an anti-spam policy in plain language (no copied text, no irrelevant content, no harassment, no impersonation).

- Use consistent disclosure for incentives and show it next to the review.

- Offer proof options (verified purchase when possible, optional photo uploads, and a "used it for X days" field).

- Define enforcement steps (warn for minor issues, remove for clear abuse, block repeat offenders) and log actions internally.

- Run quick fraud checks before approving (timing spikes, repeated phrases, brand-new accounts posting only 5-star reviews, reviews that don't match an order or variant).

To avoid "we're censoring bad reviews" accusations, track a few basics on a schedule: approval rate by category, top removal reasons, review-to-order ratio, photo rate, and time-to-approve.

A rollout plan that works: start light. Add labels (verified purchase, incentive disclosed) and a basic moderation queue first. Tighten rules based on what you actually see in your data.

If you want to prototype the review flow and the internal moderation tools quickly, Koder.ai (koder.ai) can help you build the review UX and an admin queue from a chat-based plan, then iterate as your policy and edge cases become clearer.

FAQ

What are the easiest signs that a review section is being manipulated?

Start by scanning for patterns, not individual opinions. Red flags include repeated phrases across reviews, extreme praise with no details, lots of first-time accounts, and big timing bursts (many reviews in minutes or hours).

If the page looks “too perfect” (almost no 2–4 star reviews), shoppers often assume negatives were filtered out.

What makes a review feel real to shoppers?

Aim for a stronger signal, not a higher average rating. Encourage reviews that include:

- What was bought (variant/model/plan)

- How it was used and for how long

- One clear pro and one clear con

- Any tradeoffs (setup time, sizing, shipping, learning curve)

A balanced review usually feels more believable than pure praise.

What anti-spam rules should we write down first?

Keep it short and specific, and apply it the same way every time. A practical baseline:

- Ban duplicate/copy-paste templates and keyword-stuffed text

- Remove harassment, hate, threats, and personal data

- Reject promo content (coupon codes, “DM me,” competitor pitches)

- Require disclosure for any incentive

Consistency matters more than perfection.

How do we decide which reviews to auto-approve and which to send to moderation?

A simple default is:

- Auto-approve: verified purchase + normal language + no risky claims

- Queue: new accounts, unusual timing (minutes after purchase), repeated phrasing, contact info, coupon codes, or safety/medical/legal claims

This keeps most honest reviews fast while focusing human effort where risk is higher.

What’s a simple moderation workflow that won’t overwhelm a small team?

Use three buckets:

- Obvious spam: promotions, off-topic rants, nonsense text, coordinated copy-paste

- Unclear: no purchase match, suspicious timing, very extreme claims with no context

- Acceptable: relevant experience (even if short or negative)

Only the “unclear” bucket should consume extra checks.

When should we request photo proof, and when should we avoid it?

Default: keep photos optional. Ask for them when the risk is higher, such as:

- High-return categories or high-value items

- A sudden wave of perfect reviews

- Unusually detailed reviews from brand-new accounts

- Claims that sound too good to be true

Offer easy proof options (product-in-use photo, packaging photo, issue photo for damage) so customers aren’t guessing.

How do we handle privacy and safety with photo and video reviews?

Assume people will accidentally upload sensitive info. Before publishing, use light redaction:

- Blur addresses, phone numbers, emails, full names

- Blur faces, license plates, apartment numbers

- Remove location metadata

- Limit staff access to original files

Also watch for fraud signals like the same image reused across multiple accounts.

How can we use incentives without destroying review credibility?

Reward the act of leaving feedback, not the rating. Two safe defaults:

- Everyone gets the same reward for submitting a review (1 star or 5 stars)

- Incentivized reviews must include a clear disclosure, and you label them on the review card

Never offer rewards only for positive reviews or for changing a negative review.

What should we display on the product page to make reviews more trustworthy?

Show enough context for quick judgment:

- Verified purchase (or subscription) status

- Incentive disclosure label when applicable

- Review date

- Variant/plan/model and usage duration

Add light structure (pros/cons, “used for X weeks”) plus controls like “Was this helpful?” and “Report abuse,” with rate limits to prevent brigading.

What should we do when we get a sudden spike of suspicious reviews?

Pause publishing the spike so the rating doesn’t swing instantly, then triage:

- Reject coordinated duplicates

- Request proof for unique reviews without purchase matches

- Approve verified purchases (and label incentives)

If someone bought as a guest, accept alternate proof (order number, delivery details, or a redacted confirmation) so real customers aren’t punished.